Evaluating Efficacy in Evolution Education: Evidence-Based Approaches for Scientific Training

This article synthesizes current research on the efficacy of various evolution education approaches, targeting researchers and drug development professionals for whom evolutionary theory is a foundational scientific framework.

Evaluating Efficacy in Evolution Education: Evidence-Based Approaches for Scientific Training

Abstract

This article synthesizes current research on the efficacy of various evolution education approaches, targeting researchers and drug development professionals for whom evolutionary theory is a foundational scientific framework. It explores persistent conceptual challenges and the pedagogical content knowledge required for effective instruction. The analysis covers innovative methodologies, from active learning strategies to digital tools like concept mapping and AI-driven personalized learning. The article further investigates solutions for common learning barriers and provides a comparative evaluation of educational interventions, concluding with implications for cultivating the critical and complex thinking skills essential for biomedical research and innovation.

Understanding the Landscape: Core Challenges and Knowledge Gaps in Evolution Education

Identifying Persistent Student Misconceptions and Cognitive Biases

Understanding and addressing persistent student misconceptions and cognitive biases is fundamental to improving the efficacy of evolution education. Despite evolution being a foundational concept in biology, a significant proportion of students and the general public struggle to accept its principles, particularly human evolution [1]. Research indicates that around half of the American public does not agree that humans evolved from non-human species, and approximately one-third of undergraduate biology students sampled across the United States do not accept that all life shares a common ancestor [1]. This rejection persists even among students enrolled in biology courses, presenting a substantial challenge for educators. The identification and addressing of these persistent misconceptions is not merely an academic exercise; it has real-world implications for scientific literacy, public policy, and professional practice in fields ranging from medicine to education [1]. This guide systematically compares the efficacy of different evolution education approaches, providing researchers and educators with evidence-based strategies for overcoming the most stubborn cognitive obstacles to understanding evolution.

Theoretical Framework: Cognitive Obstacles to Understanding Evolution

Psychological Biases in Evolution Learning

Decades of research in cognitive psychology, developmental psychology, and science education have revealed that students regularly misunderstand what evolution is and how it occurs [2]. These misunderstandings are not solely the result of religious or cultural resistance but stem from fundamental cognitive biases that pose substantial obstacles to understanding biological change.

Essentialist Reasoning: Psychological essentialism is the belief that members of a category (e.g., a species) are united by a common, unchanging essence [2]. This leads to the assumption that species are immutable and discrete, which is fundamentally incompatible with the evolutionary concept of gradual change and common ancestry. Essentialist thinking results in "boundary intensification," making it difficult for students to discern relationships among species or understand variation within a species [2].

Teleological Reasoning: Teleology is the tendency to explain natural phenomena by reference to purpose or design [2]. Students often assume that evolution is a forward-looking, goal-directed process, such as believing that giraffes developed long necks "in order to" reach high leaves. This contradicts the evolutionary logic of blind variation and selective retention. This "promiscuous teleology" may emerge from a naïve theory of mind that inappropriately attributes intentional origins to natural objects and processes [2].

Existential Anxiety: Beyond cognitive biases, evolutionary theory can invoke existential anxiety in some students, which presents an additional barrier to acceptance [2]. The idea that humans are the product of natural processes rather than purposeful design can be deeply unsettling, leading to motivated rejection of evolutionary concepts.

The Religious Conflict Factor

In the United States, variables related to religion are the strongest predictive factors for evolution rejection [1]. Both an individual's religious affiliation and their religiosity highly correlate with their evolution acceptance. More than 65% of undergraduate biology students from large samples across the nation identify as religious, and over 50% specifically identify as Christian [1]. However, research shows that the strongest predictor of religious students' evolution acceptance is their perceived conflict between evolution and religion, which helps explain the relationship between religious identity and evolution rejection [1]. Many students operate under the misconception that one must be an atheist to accept evolution, a perception that strongly predicts rejection among religious students [1].

Comparative Analysis of Educational Approaches

The following section provides a systematic comparison of different educational interventions designed to address persistent misconceptions and biases in evolution education. The table below summarizes key experimental findings from recent studies.

Table 1: Efficacy Comparison of Evolution Education Approaches

| Educational Approach | Target Population | Key Outcome Measures | Reported Efficacy | Implementation Context |

|---|---|---|---|---|

| Human vs. Non-Human Examples [3] | Introductory high school biology students (Alabama) | Understanding and acceptance of evolution | Both approaches increased understanding & acceptance in >70% of students; human examples potentially more effective for common ancestry | Curriculum units using BSCS 5E instructional model (Engage, Explore, Explain, Elaborate, Evaluate) |

| Conflict-Reducing Practices [1] | Undergraduate students in 19 biology courses (2,623 participants) | Perceived conflict, compatibility, acceptance of human evolution | Significant decreases in conflict, increases in compatibility & acceptance compared to control | Short evolution video with embedded conflict-reducing messages |

| Cultural & Religious Sensitivity (CRS) [3] | Introductory high school biology students (Alabama) | Student comfort, perceived respect for views | Overwhelmingly positive feedback; helped religious students feel more comfortable | Dedicated classroom activity acknowledging and respecting diverse views on evolution |

| Instructor Religious Identity with Conflict-Reducing Practices [1] | Undergraduate biology students | Perceived compatibility, evolution acceptance | Christian and non-religious instructors equally effective (except atheist students responded better to non-religious instructors) | Evolution video presenting conflict-reducing practices delivered by instructors of different stated religious identities |

Analysis of Comparative Efficacy

The experimental data reveals several important patterns for educators and researchers. The integration of human examples in evolution curriculum appears to be at least equally effective as non-human examples and may offer specific advantages for teaching challenging concepts like common ancestry [3]. This finding is significant given that some educators may avoid human examples due to perceived controversy.

Furthermore, structured approaches to address religion and evolution show consistent, positive outcomes across multiple studies. Conflict-reducing practices and Cultural and Religious Sensitivity (CRS) activities significantly improve outcomes for religious students without compromising scientific content [3] [1]. Importantly, the efficacy of these practices in a controlled, randomized study design provides strong evidence for their causal impact [1].

A particularly noteworthy finding for teacher training is that an instructor's personal religious identity does not appear to be a barrier to implementing these strategies successfully. Both Christian and non-religious instructors were equally effective at delivering conflict-reducing messages, making this a widely applicable approach [1].

Experimental Protocols and Methodologies

Protocol: Curriculum Development and Testing (LUDA Project)

The LUDA project provides a rigorous model for developing and testing evolution curriculum materials [3].

- Curriculum Design: The process was based on Understanding by Design, beginning with granular learning objectives aligned with state science standards. The team proposed more detailed learning objectives than the broad standards outlined in the Alabama Course of Study.

- Instructional Model: The BSCS 5E instructional model was applied at the lesson level. This constructivist-based strategy sequences lessons into five stages:

- Engage: Activities surface students' prior ideas and generate interest.

- Explore: Students participate in common experiences to build initial explanations.

- Explain: Students formally construct explanations with teacher feedback.

- Elaborate: Students extend and apply concepts to new situations.

- Evaluate: Students assess their understanding and demonstrate it to others.

- Materials Development: The team developed draft assessments and lesson outlines, which were reviewed by an advisory board. Short films from HHMI BioInteractive were incorporated into many lessons.

- Iterative Testing: Teachers used the lessons in two rounds of field tests. The materials were revised based on feedback from both teachers and students before full implementation.

Table 2: Research Reagent Solutions for Evolution Education Research

| Research Tool | Function/Application | Example from Studies |

|---|---|---|

| Evolution Acceptance Instruments | Quantitatively measure students' acceptance of evolutionary concepts | Multiple instruments exist, but require careful selection for religious populations [4] |

| Conceptual Assessments | Evaluate understanding of core evolutionary mechanisms | Assessments targeting natural selection, common ancestry, and variation [2] |

| Cultural & Religious Sensitivity (CRS) Resources | Provide structured activities to reduce perceived conflict between religion and science | Classroom activity acknowledging diverse views and discussing compatibility [3] |

| Comparative Curriculum Units | Isolate the effect of specific instructional examples (e.g., human vs. non-human) | "Eagle" unit (with human examples) vs. "Elephant" unit (non-human only) [3] |

| Stimulated Recall Discussions | Elicit teacher cognition and decision-making processes | Used with pre-service teachers to explore belief development [5] |

Protocol: Measuring Intervention Impact

Robust measurement is critical for evaluating the efficacy of educational approaches.

- Instrument Selection: Researchers must carefully select evolution acceptance instruments, paying close attention to content validity for religious populations [4]. Some instrument items may reference specific religious texts (e.g., the Bible) or concepts (e.g., God), which can create construct-irrelevant variance for students from different religious backgrounds [4].

- Multi-faceted Assessment: Studies should employ a combination of quantitative and qualitative measures:

- Control for Covariates: Analyses should account for potential confounding variables such as prior evolution knowledge, school socioeconomic status, student religiosity, and parental education levels [3].

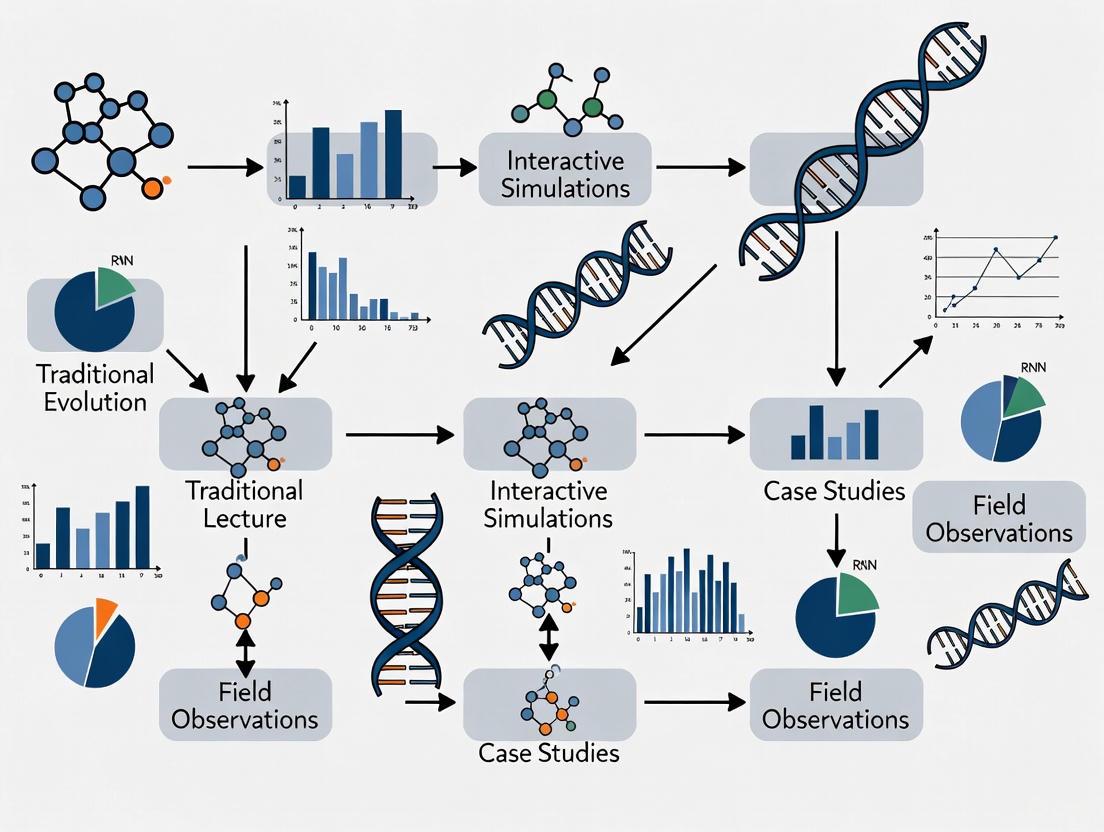

The diagram below illustrates the core workflow for developing and testing evolution education interventions, from identifying cognitive obstacles to measuring outcomes.

Discussion and Research Implications

The comparative analysis of evolution education approaches reveals several critical implications for researchers and educators. First, the most effective strategies address both cognitive and affective barriers to learning evolution. While clarifying scientific concepts is necessary, it is insufficient if students' cognitive biases (essentialism, teleology) and personal concerns (religious conflict) are not simultaneously addressed [1] [2]. The success of conflict-reducing practices demonstrates that explicitly discussing the relationship between science and religion, without compromising scientific content, can significantly improve educational outcomes for religious students [3] [1].

Second, the context of examples used in teaching matters. The finding that human examples can be as effective as, or more effective than, non-human examples for teaching certain concepts challenges any default avoidance of human evolution [3]. However, research also suggests that students with lower prior knowledge might benefit more initially from non-human examples [3], indicating the potential value of a strategic progression in example selection.

A significant methodological implication concerns assessment. The field requires more consistent and validated measurement instruments, particularly those with demonstrated validity across diverse religious populations [4]. Inconsistent instrument use across studies creates challenges for comparing results and building a coherent evidence base.

Future research should explore the long-term retention of benefits from these interventions and their efficacy across different cultural and educational contexts. Additionally, more work is needed to integrate these strategies effectively into teacher education programs, ensuring that pre-service teachers are equipped to implement evidence-based practices for evolution education [5].

Analyzing Gaps in Collective Pedagogical Content Knowledge (PCK)

Collective Pedagogical Content Knowledge (PCK) represents the shared understanding and effective teaching practices that educators within a community develop for making specific subject matter comprehensible to students. In the context of evolution education, robust collective PCK is particularly vital yet challenging to establish. Evolution serves as the foundational unifying theory for all biological sciences, yet it faces substantial educational barriers including conceptual complexities, cultural objections, and persistent student misconceptions [6]. Despite over 150 years of scientific acceptance, evolution remains poorly understood and frequently rejected by the public, with nearly one-third of Americans not fully endorsing either evolutionary or creationist perspectives [7]. This educational crisis stems partly from insufficient collective PCK, leaving educators underprepared to address the unique conceptual and pedagogical challenges of evolution instruction.

Research indicates that teachers often avoid teaching evolution or dedicate minimal instructional time to it, even when national standards emphasize its importance [7]. Many educators demonstrate limited understanding of evolutionary concepts and struggle with the nature of science fundamentals where most misconceptions originate [8]. This gap in collective PCK has direct consequences for student learning, as evidenced by studies showing that even biology majors often retain significant misconceptions about evolution after instruction [7]. This comparative guide analyzes the efficacy of different evolution education approaches to identify evidence-based strategies for strengthening collective PCK in this critical domain.

Comparative Analysis of Evolution Education Approaches

Quantitative Comparison of Pedagogical Efficacy

Table 1: Comparative effectiveness of pedagogical approaches in evolution education

| Pedagogical Approach | Cognitive Gain Effect Size | Affective Gain Impact | Behavioral Gain Impact | Key Measured Outcomes |

|---|---|---|---|---|

| Problem-Based Learning | d = 0.89 [9] | Moderate improvement | Significant improvement | Enhanced critical thinking, problem-solving skills, knowledge application [9] |

| Project-Based Learning | d = 0.95-1.36 [9] | Moderate improvement | Significant improvement | Improved conceptual understanding, application skills, civic engagement [9] |

| Inquiry-Based Learning | d = 0.35-1.26 [9] | Moderate improvement | Moderate improvement | Better student achievement, metacognitive skills, science process skills [9] |

| Evolutionary Psychology Course | Significant increase (p<0.001) [7] | Significant improvement | Not measured | Increased knowledge/relevance, decreased creationist reasoning and misconceptions [7] |

| Traditional Biology Course | No significant change [7] | No significant change | Not measured | Increased evolutionary misconceptions in some cases [7] |

| Cosmos-Evidence-Ideas Model | Moderate improvement [6] | Mild improvement | Not measured | Slightly greater performance increases compared to standard approaches [6] |

Specialized Interventions for Evolution Education

Table 2: Specialized interventions and their impacts on evolution education

| Intervention Type | Target Audience | Duration | Key Outcomes | Limitations |

|---|---|---|---|---|

| Hands-on PD with 3D Printing [8] | K-12 Science Teachers | 3-day workshop | Significant improvement in teacher self-efficacy and perception of evolution teaching | Limited long-term follow-up data |

| STEAM Professional Development [10] | Pre-service STEM Teachers | 5-stage framework (18 months) | Enhanced teacher self-efficacy in integrating arts/humanities with STEM | Complex implementation requirements |

| "Ways of Knowing" Discussions [8] | Teachers & Students | Integrated with curriculum | Addresses worldview conflicts, supports conceptual change | Requires specialized facilitator training |

Experimental Protocols and Methodologies

Curriculum Intervention Studies

Protocol 1: Comparative Course Efficacy Assessment [7]

- Research Design: Pre-test/post-test control group design with multiple cohorts

- Participants: 868 students across evolutionary psychology, biology with evolutionary content, and political science (control) courses

- Assessment Tool: Evolutionary Attitudes and Literacy Survey (EALS) measuring:

- Knowledge/Relevance subscale

- Creationist Reasoning subscale

- Evolutionary Misconceptions subscale

- Exposure to Evolution subscale

- Implementation: Multiple group repeated measures confirmatory factor analysis to examine latent mean differences

- Duration: Full academic semester with pre-test during first week and post-test during final week

- Data Analysis: Latent mean differences calculation with statistical significance testing at p<0.05 level

Protocol 2: Professional Development Impact Assessment [8]

- Research Design: Mixed-methods approach with pre-test/post-test surveys and semi-structured focus groups

- Participants: K-12 science teachers participating in human evolution professional development

- Intervention Components:

- Paleontology and human origins content knowledge building

- Direct engagement with professional paleoanthropologists

- Implementation strategy discussions with evolution education specialists

- 3D printing technology integration for fossil replication

- "Ways of knowing" discussions addressing cultural and religious concerns

- Duration: Intensive three-day workshop with lesson plan development component

- Data Collection: Validated surveys measuring teacher self-efficacy administered pre-workshop and post-workshop, followed by focus group interviews

- Analysis: Quantitative analysis of survey results with qualitative coding of interview transcripts

Meta-Analytical Methodology

Protocol 3: Pedagogical Impact Meta-Analysis [9]

- Literature Search: PRISMA methodology for systematic review of 32 eligible studies

- Inclusion Criteria: Studies investigating pedagogies in mixed-ability high school biology classrooms with measurable learning gains

- Outcome Measures:

- Cognitive gains (comprehension, information retention, critical thinking)

- Affective gains (attitudes, confidence, motivation)

- Behavioral gains (engagement, leadership skills, teamwork)

- Effect Size Calculation: Standardized mean differences (Cohen's d) with confidence intervals

- Heterogeneity Assessment: I² statistic to quantify variability across studies

- Moderator Analysis: Examination of pedagogical models as potential sources of systematic variation

Visualization of Evolution Education Approaches

Experimental Workflow for Evolution Education Research

Knowledge Integration Pathways in Evolution Education

Research Reagent Solutions for Evolution Education

Table 3: Essential research instruments and materials for evolution education studies

| Research Tool | Type/Format | Primary Application | Key Features & Functions |

|---|---|---|---|

| Evolutionary Attitudes and Literacy Survey (EALS) [7] | Validated Assessment Instrument | Measuring knowledge, attitudes, and misconceptions | Multiple subscales (Knowledge/Relevance, Creationist Reasoning, Evolutionary Misconceptions); Pre-test/post-test capability |

| 3D Fossil Replicas & Printing Technology [8] | Physical/Digital Manipulatives | Hands-on paleontological instruction | Provides tactile access to fossil evidence; Overcomes limited access to original fossils; Supports inquiry-based learning |

| Professional Development Framework [10] | Structured Intervention Protocol | Teacher self-efficacy building | Five-stage framework; 18-month longitudinal implementation; Integrates content knowledge with pedagogical skills |

| PRISMA Methodology [9] | Systematic Review Protocol | Meta-analytical research | Standardized literature screening and selection; Quality assessment of studies; Effect size aggregation |

| Cosmos-Evidence-Ideas Model [6] | Conceptual Teaching Framework | Evolution curriculum design | Structured approach to teaching evolutionary theory; Emphasizes scientific methodology and evidence evaluation |

Discussion and Research Implications

The comparative analysis reveals significant disparities in the efficacy of different evolution education approaches, highlighting substantial gaps in current collective PCK. Problem-based and project-based learning demonstrate notably large effect sizes (d = 0.89-1.36) for cognitive gains [9], suggesting these approaches effectively address conceptual barriers in evolution understanding. Particularly striking is the finding that evolutionary psychology courses produce significant improvements in knowledge/relevance while decreasing creationist reasoning and misconceptions, whereas traditional biology courses show no significant change in knowledge/relevance and sometimes increase misconceptions [7]. This indicates that how evolution is taught matters more than simply including it in curriculum.

Professional development interventions that specifically address pedagogical content knowledge gaps show promise for enhancing evolution education. Workshops incorporating 3D printing technology, "ways of knowing" discussions, and direct scientist engagement significantly improve teacher self-efficacy [8], which is crucial for effective implementation. The five-stage STEAM professional development framework demonstrates that sustained support over 18 months positively impacts both teacher self-efficacy and student outcomes [10]. These findings suggest that building collective PCK requires moving beyond content knowledge to address pedagogical strategies, technological integration, and worldview considerations specific to evolution education.

The persistence of evolutionary misconceptions despite traditional instruction points to critical gaps in how educators understand and address conceptual barriers like essentialism and teleology [6]. Effective approaches explicitly confront these intuitive but incorrect ways of thinking through conceptual conflict strategies, contextualized examples, and multidisciplinary connections. Future research should prioritize developing more sophisticated assessment tools that measure nuanced aspects of evolution understanding and identify specific PCK components that differentiate highly effective evolution educators from their less effective counterparts.

The Impact of Intuitive Conceptions on Understanding Evolutionary Mechanisms

Understanding evolutionary mechanisms remains a significant challenge in biology education, primarily due to persistent intuitive conceptions that conflict with scientific principles. Research across diverse populations reveals that intuitive reasoning patterns—teleological, essentialist, and anthropocentric thinking—consistently impair comprehension of natural selection and evolutionary processes [11]. These cognitive frameworks operate as default reasoning modes that individuals maintain from childhood through advanced education and even into professional scientific careers [11]. The impact of these intuitive conceptions extends beyond academic settings, influencing how researchers and drug development professionals interpret evolutionary patterns in pathogens, cancer development, and therapeutic resistance [11] [1].

The challenge is particularly pronounced in understanding antibiotic resistance, where studies show undergraduate students frequently produce and agree with misconceptions rooted in intuitive reasoning [11]. Despite formal education, these deep-seated cognitive patterns continue to shape biological understanding, suggesting that effective evolution education requires specifically targeted approaches that address these foundational conceptual barriers [11] [12]. This analysis compares the efficacy of various educational interventions designed to overcome intuitive conceptions and improve understanding of evolutionary mechanisms.

Defining Intuitive Reasoning Patterns in Evolutionary Biology

Cognitive psychology research has established that humans develop early intuitive assumptions to make sense of biological phenomena, and these patterns persist well beyond childhood into high school, undergraduate education, and professional practice [11]. Three primary forms of intuitive reasoning have been identified as particularly problematic for understanding evolution.

Table 1: Core Intuitive Reasoning Patterns and Their Characteristics

| Reasoning Pattern | Definition | Manifestation in Evolution | Prevalence in Student Populations |

|---|---|---|---|

| Teleological Reasoning | Attributing purpose or goals as causal agents for changes or events | "Finches diversified in order to survive"; "Bacteria evolve resistance to deal with antibiotics" | Present in nearly all students' written explanations [11] |

| Essentialist Reasoning | Assuming category members share uniform, static "essences" while ignoring variability | "The moths gradually became darker" (population transformation rather than variational change) | Strongly associated with transformational evolutionary views [11] |

| Anthropocentric Reasoning | Reasoning by analogy to humans or exaggerating human importance | "Plants want to bend toward the light"; viewing humans as biologically discontinuous from other animals | Particularly common in Western industrialized populations [11] |

Acceptance of a specific misconception is significantly associated with production of its corresponding intuitive reasoning form (all p ≤ 0.05) [11]. These intuitive reasoning patterns represent subtly appealing linguistic shorthand that can persist even when individuals possess formal knowledge of evolutionary mechanisms [11].

Quantitative Assessment of Misconception Prevalence and Intervention Efficacy

Prevalence of Evolutionary Misconceptions Across Populations

Research demonstrates that intuitive misconceptions about evolutionary mechanisms persist across diverse educational levels and geographic contexts. Studies of undergraduate students' understanding of antibiotic resistance reveal that a majority produce and agree with misconceptions, with intuitive reasoning present in nearly all students' written explanations [11]. Acceptance of misconceptions shows significant association with specific forms of intuitive thinking, highlighting the cognitive underpinnings of these conceptual errors [11].

Table 2: Evolution Acceptance and Knowledge Across Different Populations

| Population | Region | Acceptance Level (MATE) | Knowledge Level (KEE) | Primary Influencing Factors |

|---|---|---|---|---|

| Pre-service Teachers | Ecuador | 67.5/100 (Low) | 3.1/10 (Very Low) | Religiosity, Knowledge Deficits [13] |

| Undergraduate Biology Students | United States | ~65% (Moderate) | Variable | Religiosity, Perceived Conflict [1] |

| High School Students | Brazil | Lower than Italy | Lower than Italy | Economic & Sociocultural Factors [13] |

| High School Students | Mexico | Moderate to High | Not Reported | Religiosity (Negative Influence) [13] |

Global studies reveal significant variation in evolution acceptance, with religious affiliation and religiosity consistently correlating with evolution rejection [13] [1]. In the United States, approximately half of the public disagrees that humans evolved from non-human species, and around one-third of undergraduate biology students sampled nationwide do not accept that all life shares a common ancestor [1]. This rejection has practical implications for biomedical fields, as professionals who resist evolutionary thinking may be less likely to apply evolutionary medicine principles to human health and disease [1].

Efficacy of Educational Interventions

Recent controlled studies have quantified the impact of specific educational interventions on overcoming intuitive conceptions and improving evolution understanding.

Table 3: Efficacy of Evolution Education Interventions

| Intervention Type | Study Design | Key Outcome Measures | Results |

|---|---|---|---|

| Conflict-Reducing Practices | Randomized controlled trial with 2623 undergraduates in 19 biology courses [1] | Perceived conflict, religion-evolution compatibility, evolution acceptance | Significant decreases in conflict, increases in compatibility and acceptance of human evolution compared to control [1] |

| Instructor Identity Effects | Same RCT comparing Christian vs. non-religious instructors [1] | Same as above | Christian and non-religious instructors equally effective except atheist students responded better to non-religious instructors for compatibility [1] |

| Comparison-Based Learning | Experiments with 4-8 year olds learning animal adaptation (N=240) [14] | Memory and generalization of perceptual vs. relational information | Children struggled to generalize relational information immediately (β = -.496, p < .001) and over time; language prompts improved relational generalization (β = .236, p = .005) [14] |

| VIST Framework (Museums) | Evaluation of 12 natural history museums [12] | Teleological reasoning, understanding of natural selection | Focus on natural selection alone reinforced teleological thinking; visitors maintained "survival of the fittest" mentality and progressive evolution views [12] |

Conflict-reducing practices, which explicitly acknowledge that while conflict exists between certain religious beliefs and evolution, it is possible to believe in a higher power and accept evolution, have demonstrated particular effectiveness in randomized controlled designs [1]. These practices significantly improve outcomes for religious students without compromising scientific accuracy [1].

Experimental Protocols and Methodologies

Protocol: Assessing Intuitive Reasoning in Antibiotic Resistance Understanding

Research Objective: To investigate relationships between intuitive reasoning patterns and misconceptions of antibiotic resistance among undergraduate populations [11].

Participant Groups:

- Entering biology majors (EBM)

- Advanced biology majors (ABM)

- Non-biology majors (NBM)

- Biology faculty (BF) as reference [11]

Assessment Tool: Written assessment evaluating:

- Agreement with common misconceptions of antibiotic resistance

- Use of intuitive reasoning in written explanations

- Application of evolutionary knowledge to antibiotic resistance [11]

Coding Framework:

- Teleological statements: Coded for attribution of purpose or need as causal agent (e.g., "bacteria developed resistance to survive")

- Essentialist statements: Coded for assumptions of uniform population transformation (e.g., "the bacteria became resistant" without variation)

- Anthropocentric statements: Coded for human-centered analogies or attributions of human-like cognition [11]

Statistical Analysis: Acceptance of each misconception was tested for significant association with production of hypothesized intuitive reasoning form using appropriate statistical tests (all significant at p ≤ 0.05) [11].

Protocol: Conflict-Reducing Practices Randomized Controlled Trial

Research Objective: To test the efficacy of conflict-reducing practices during evolution instruction in a randomized controlled design [1].

Participant Recruitment: 2623 undergraduate students enrolled in 19 biology courses across multiple states [1].

Randomization: Students randomly assigned to one of three conditions:

- Evolution video with no conflict-reducing practices

- Evolution video with conflict-reducing practices implemented by non-religious instructor

- Evolution video with conflict-reducing practices implemented by Christian instructor [1]

Conflict-Reducing Practices Implementation:

- Explicit statement that one can accept evolution and maintain religious faith

- Acknowledgement that multiple interpretations exist regarding evolution-religion relationship

- Avoidance of religion negativity or jokes about religious beliefs [1]

Outcome Measures:

- Perceived conflict between evolution and religion

- Perceived compatibility between evolution and religion

- Acceptance of human evolution [1]

Data Collection: Pre-post intervention assessments with validated instruments measuring outcome variables [1].

Conceptual Framework: Relationship Between Intuitive Reasoning and Educational Outcomes

The diagram below illustrates the conceptual relationships between intuitive reasoning patterns, their cognitive characteristics, and the educational interventions that effectively address them.

Research Reagent Solutions: Key Assessment Tools and Analytical Approaches

Table 4: Essential Research Instruments for Studying Intuitive Conceptions

| Research Tool | Primary Function | Application Context | Key Features |

|---|---|---|---|

| Written Assessment Protocols [11] | Qualitative coding of intuitive reasoning | Analyzing student explanations of antibiotic resistance | Identifies teleological, essentialist, and anthropocentric reasoning patterns |

| MATE Instrument [13] [1] | Measure evolution acceptance | Pre-post testing for intervention efficacy | Validated instrument assessing agreement with core evolutionary principles |

| KEE Assessment [13] | Evaluate knowledge of evolution | Assessing conceptual understanding separate from acceptance | Tests core evolutionary concepts and mechanisms |

| DUREL Scale [13] | Measure religiosity | Examining religion-evolution conflict | Assesses organizational, non-organizational, and intrinsic religiosity |

| PsiPartition Tool [15] | Genomic data analysis for phylogenetic studies | Evolutionary relationships between species | Accounts for site heterogeneity in evolutionary rates; improves tree accuracy |

| Comparison-Based Learning Protocols [14] | Structural alignment assessment | Testing relational understanding in children | Examines generalization of perceptual vs. relational information |

These research tools enable rigorous investigation of intuitive conceptions and their impact on understanding evolutionary mechanisms. The written assessment protocols specifically allow researchers to identify and categorize intuitive reasoning patterns in qualitative responses [11], while standardized instruments like MATE and KEE provide quantitative measures of acceptance and knowledge [13]. Advanced computational tools like PsiPartition represent cutting-edge approaches to evolutionary analysis that can complement educational research by providing accurate phylogenetic frameworks [15].

Discussion: Implications for Research and Professional Practice

The persistence of intuitive conceptions presents significant challenges for evolution education, but evidence-based interventions demonstrate promising approaches for addressing these barriers. Conflict-reducing practices have proven particularly effective in randomized controlled trials, significantly decreasing perceived conflict between evolution and religion while increasing evolution acceptance [1]. The finding that both Christian and non-religious instructors can effectively implement these practices (with minor exceptions for atheist students) suggests broad applicability across educational contexts [1].

For researchers and drug development professionals, understanding these intuitive barriers has practical importance beyond education. Professionals who maintain essentialist thinking may struggle with population-based approaches to antibiotic resistance or cancer evolution, while those exhibiting teleological reasoning may misinterpret selective pressures in evolutionary dynamics [11]. Incorporating explicit instruction about multiple evolutionary forces—including stochastic processes like genetic drift—can counterbalance the overemphasis on natural selection that reinforces teleological thinking [12].

Future research should continue to develop and test interventions that specifically target the cognitive mechanisms underlying intuitive reasoning, particularly for professionals in biomedical fields where evolutionary thinking informs research approaches and therapeutic development. The integration of these evidence-based educational strategies into graduate and professional training represents a promising direction for enhancing evolutionary understanding across scientific disciplines.

Bridging the Gap Between Student Acceptance and Conceptual Understanding

A significant challenge in science education lies in the disconnect between a student's acceptance of evolutionary theory and their deep conceptual understanding of its mechanisms. While acceptance is a necessary first step, it does not automatically translate into the ability to apply evolutionary principles to solve novel problems or to integrate these concepts into a coherent scientific framework [13]. This gap is observed globally; for instance, in Ecuador, teachers demonstrate enthusiasm for evolution but lack clear knowledge of its foundational principles [13]. The efficacy of evolution education, therefore, depends on bridging this divide through pedagogical approaches that simultaneously address affective barriers, such as religiosity, and cognitive hurdles, such as counterintuitive concepts [16] [13]. This guide objectively compares the performance of different educational interventions, evaluating their success in fostering both acceptance and robust conceptual understanding based on current empirical evidence.

Comparative Analysis of Evolution Education Approaches

A growing body of research investigates the relationship between instructional methods and key educational outcomes in evolution, namely instructional time, classroom presentation of evolution as credible science, and topic emphasis. The table below synthesizes findings from a nationally representative survey of U.S. high school biology teachers, analyzing how specific types of pre-service coursework correlate with these outcomes [16].

Table 1: Impact of Pre-service Coursework on Evolution Teaching Practices

| Type of Pre-service Coursework | Instructional Time Devoted to Evolution | Classroom Characterization of Evolution/Creationism | Emphasis on Key Topics (e.g., Common Ancestry, Human Evolution) |

|---|---|---|---|

| Evolution-Focused Coursework | Significant positive association; more class hours devoted to evolution [16] | Positive association; evolution, not creationism, presented as scientifically credible [16] | Positive association; prioritization of common ancestry, human evolution, and the origin of life [16] |

| Coursework Containing Some Evolution | Not specified | Positive association; evolution presented as scientifically credible [16] | Positive association; prioritization of common ancestry [16] |

| Methods: Problem-Based Learning (PBL) | Not specified | Negative association; creationism presented as scientifically credible alongside evolution [16] | Negative association; prioritization of biblical perspectives [16] |

| Methods: Teaching Controversial Topics | Not specified | Negative association; creationism presented as scientifically credible [16] | Not specified |

The data reveals a clear trend: content-focused coursework in evolution is consistently associated with teaching practices that align with scientific consensus. In contrast, certain types of pedagogy-focused methods coursework, particularly those dealing with problem-based learning and teaching controversial topics, showed unexpected negative associations, potentially leading instructors to present creationism as scientifically credible [16]. This suggests that without a solid foundational knowledge of evolutionary theory, pedagogical strategies alone may be insufficient or even counterproductive.

Beyond teacher preparation, the learning environment itself is a critical variable. Research comparing contact (face-to-face) and online biology teaching reveals that each modality offers distinct advantages that can influence conceptual understanding [17].

Table 2: Comparative Analysis of Contact vs. Online Teaching Modalities in Biology

| Factor | Contact (Face-to-Face) Teaching | Online Teaching |

|---|---|---|

| Student Preferences | Problem-solving, direct teacher guidance, and a stimulating learning environment [17] | Low-stress lessons, interesting content, and room for independent work [17] |

| Inherent Strengths | Richer social interaction, immediate feedback, and easier maintenance of student concentration and motivation [17] | Flexibility, self-paced learning, constant access to materials, and development of digital skills [17] |

| Impact on Conceptual Understanding | More effective for developing conceptual understanding, particularly in tasks requiring knowledge integration and problem-solving [17] | Lower student performance on post-instruction assessments of conceptual understanding compared to contact teaching [17] |

These findings indicate that while online learning offers valuable flexibility, the structured, interactive environment of contact teaching may be more effective in promoting the deep cognitive engagement required for conceptual understanding in a complex subject like biology [17].

Experimental Protocols and Methodologies

National Survey on Teacher Preparation and Classroom Practices

The compelling data presented in Table 1 originates from a rigorous research design implemented to isolate the effects of pre-service coursework [16].

- Objective: To investigate associations between types of pre-service coursework and teachers' attitudes and classroom practices regarding evolution.

- Methodology: A nationally representative probability survey of U.S. public high school biology teachers.

- Data Collection: Data were collected on seven categories of pre-service coursework (independent variables) and five categories of teaching attitudes and practices (dependent variables). The dependent variables included personal acceptance of evolution, perception of scientific consensus, instructional time devoted to evolution, classroom characterization of evolution and creationism, and emphasis on specific evolutionary topics.

- Analysis: Researchers conducted a series of regression analyses to isolate the effects of coursework preparation, controlling for potential confounding variables such as teacher seniority, gender, and the nature of their state’s science education standards. This robust statistical approach strengthens the validity of the findings by accounting for other factors that could influence teaching practices [16].

Comparative Study of Teaching Modalities

The comparative findings in Table 2 were derived from a large-scale study examining the effectiveness of different teaching modalities [17].

- Objective: To assess student performance and gather perceptions on the effectiveness of contact versus online biology teaching.

- Study Population and Period: Conducted in autumn 2021 with 3035 students, 124 biology teachers, and 719 parents.

- Methodology: The study combined a post-instruction assessment of student performance with questionnaires. Student assessments evaluated both knowledge reproduction and conceptual understanding.

- Analysis: A CHAID-based decision tree model was applied to questionnaire responses to investigate how various teaching-related factors influence the perceived understanding of biological content. This mixed-methods approach provided both quantitative performance data and qualitative insights from key stakeholders [17].

Visualizing Research Workflows and Conceptual Models

Experimental Workflow for Comparative Education Research

The following diagram visualizes the methodology for a comparative study of educational approaches, illustrating the process from participant recruitment to data synthesis.

Conceptual Model of Factors Influencing Evolution Acceptance

This diagram maps the key factors that influence the acceptance of evolutionary theory, as identified in cross-cultural research, and their interrelationships.

The Scientist's Toolkit: Key Research Reagents and Materials

The following table details essential "research reagents" — the core methodological components and tools — required for conducting rigorous research in evolution education.

Table 3: Essential Methodological Components for Evolution Education Research

| Research Component | Function in Evolution Education Research |

|---|---|

| Validated Survey Instruments (e.g., MATE, KEE) | Standardized tools like the Measure of Acceptance of the Theory of Evolution (MATE) and Knowledge of Evolution Exam (KEE) provide reliable, quantifiable metrics for cross-sectional and longitudinal studies of acceptance and understanding [13]. |

| Polygenic Indices (PGIs) | Used in gene-environment interaction studies, PGIs help quantify genetic predispositions for educational outcomes. This allows researchers to investigate how school quality can compensate for genetic disadvantages in learning [18]. |

| Value-Added Measures (VAM) of School Quality | These metrics, derived from administrative data, quantify a school's contribution to student learning outcomes, independent of student background. They are crucial for studying how institutional quality moderates other factors [18]. |

| CHAID Decision Tree Model | A statistical technique used to identify the most significant factors influencing an outcome (e.g., understanding). It is valuable for analyzing complex questionnaire data from multiple stakeholders (students, teachers, parents) [17]. |

| Mixed-Methods Research Framework | An approach that integrates quantitative data (e.g., test scores) with qualitative data (e.g., interviews, open-ended responses). This provides a more comprehensive understanding of both the "what" and "why" behind educational phenomena [17] [19]. |

Innovative Pedagogies in Action: From Active Learning to Digital Tools

This guide provides a comparative analysis of three prominent active learning approaches—Case Studies, Simulations, and Problem-Based Learning (PBL). It is designed to assist researchers and educators in selecting and implementing evidence-based pedagogies, with a specific focus on applications within evolution education.

Comparative Efficacy: Quantitative Outcomes

Extensive research demonstrates that active learning strategies consistently outperform traditional lecture-based methods. The table below summarizes key quantitative findings on the efficacy of different approaches.

Table 1: Comparative Quantitative Outcomes of Learning Approaches

| Learning Approach | Key Efficacy Metrics | Comparative Performance & Contextual Notes |

|---|---|---|

| Active Learning (Overall) | - 54% higher test scores than traditional lectures [20]- 1.5x lower failure rate compared to lecture courses [20]- 33% reduction in achievement gaps on examinations [20] | A study of over 100,000 students is underway to further explore the interactive factors influencing efficacy [21]. |

| Case Method | - Excels in developing strategic thinking and leadership judgment [22]- Highly effective for decision-making under uncertainty and ethical reasoning [22] | Best for short-to-medium timeframes; ideal for executive education and analyzing ambiguous, high-stakes scenarios [22]. |

| Simulations & Gamification | - Immerses learners in dynamic, real-world settings [23]- Fosters strategic thinking, resilience, and decision-making skills [23] | Effective in high-stakes or fast-paced learning environments; allows for practical application of knowledge [23]. |

| Problem-Based Learning (PBL) | - Drives development of critical thinking and self-directed learning [24]- Promotes deeper, contextualized understanding of subject matter [24] | A long-term, process-oriented approach ideal for tackling open-ended, real-world problems [22] [24]. |

| AI-Powered Tutoring | - Significant learning gains: Students learned more in less time (median 49 min vs. 60 min) [25]- Higher student engagement and motivation compared to in-class active learning [25] | A recent RCT found it outperformed in-class active learning, offering a scalable model for personalized instruction [25]. |

Experimental Protocols and Methodologies

Protocol: Randomized Controlled Trial (RCT) on AI Tutoring vs. Active Learning

A recent RCT at Harvard University provides a robust model for comparing innovative learning tools with established teaching methods [25].

- Objective: To measure differences in learning gains and student perceptions between an AI tutor and an in-class active learning lesson.

- Population: 194 undergraduate students in a physics course.

- Design: A crossover study where students were divided into two groups. Each group experienced both the AI tutor (at home) and the in-class active learning lesson (in person) for different topics over two consecutive weeks.

- Interventions:

- AI Tutor Group: Interacted with a custom-designed, generative AI-powered tutoring system. The system was engineered to adhere to pedagogical best practices, including facilitating active learning, managing cognitive load, and providing timely, adaptive feedback [25].

- In-Class Active Learning Group: Participated in a 75-minute instructor-led active learning session that incorporated peer instruction and small-group activities, based on the same core pedagogical principles as the AI tutor [25].

- Measures:

- Content Mastery: Identical pre-tests and post-tests were administered for each topic.

- Time on Task: Platform analytics tracked time spent for the AI group; in-class learning time was fixed at 60 minutes.

- Student Perceptions: Surveys measured engagement, enjoyment, motivation, and growth mindset on a 5-point Likert scale [25].

- Analysis: Linear regression was used, controlling for pre-test scores, prior physics proficiency, time on task, and other variables [25].

Protocol: Implementing the Case Method

The case method is a well-established pedagogy for developing analytical and decision-making skills [22].

- Objective: To immerse students in a real-world business scenario and guide them through a structured analysis.

- Framework:

- Case Presentation: Learners are given a detailed, narrative case study describing a real or fictional dilemma faced by an organization [22].

- Individual Analysis: Learners analyze the data, context, and key players to identify the core problem [22].

- Option Weighing: Learners develop and evaluate multiple courses of action, considering trade-offs and potential outcomes [22].

- Recommendation & Defense: Learners make a final recommendation and justify their decision, often through discussion or debate [22].

- Instructor Role: Acts as a facilitator of dialogue, guiding discussion and challenging assumptions rather than lecturing [22].

Protocol: Implementing Problem-Based Learning (PBL)

PBL is a student-centered approach designed to foster inquiry and problem-solving skills [24].

- Objective: To engage students in solving an authentic, open-ended problem.

- Framework:

- Problem Introduction: Students are presented with a complex, ill-structured problem without a single correct solution [24].

- Knowledge Gap Identification: In small groups, students determine what they already know and what they need to learn to solve the problem [24].

- Self-Directed Research: Students independently research the identified learning gaps [24].

- Solution Application & Refinement: Groups apply their new knowledge to develop and refine a viable solution [24].

- Instructor Role: Acts as a facilitator or coach, providing resources and asking probing questions without giving direct answers [22] [24].

Workflow Visualization of Pedagogical Approaches

The following diagrams illustrate the logical workflows for two key active learning strategies.

Problem-Based Learning Workflow

Case Method Analysis Workflow

The Scientist's Toolkit: Key Research Reagents and Materials

Table 2: Essential Materials for Active Learning Implementation

| Item/Solution | Function in Educational Research |

|---|---|

| Validated Assessment Instruments | Quantify learning gains, conceptual understanding, and attitudinal shifts. Examples include the Measure of Acceptance of Theory of Evolution (MATE) and Knowledge of Evolution Exam (KEE) [13]. |

| Learning Management System (LMS) | Platforms like Canvas serve as the foundational infrastructure for deploying learning materials, collecting assignment data, and integrating with other tools [21]. |

| Generative AI Tutoring Platform | A system like Active L@S provides adaptive, personalized learning prompts and immediate feedback at scale, leveraging Large Language Models (LLMs) [21] [25]. |

| Classroom Observation Protocols | Standardized rubrics for quantifying the fidelity of implementation of active learning strategies (e.g., types and frequency of student-instructor interactions). |

| Student Perception Surveys | 5-point Likert scale questionnaires to measure self-reported engagement, motivation, enjoyment, and growth mindset [25]. |

| Data Analytics & NLP Tools | Machine learning and natural language processing techniques are used to analyze large-scale educational data, including written assignments and discussion board posts [21]. |

Evolution education presents a unique challenge for researchers and instructors, as it is a conceptually complex domain where students often grapple with persistent misconceptions and must integrate multiple key and threshold concepts into a coherent understanding [26]. The rise of digital learning environments has created new opportunities for capturing rich data on student learning processes, moving beyond simple fact-recall to assessing complex knowledge structures and their development over time [26] [27]. Two particularly powerful approaches for this are concept mapping and Learning Progression Analytics (LPA). Concept maps are node-link diagrams that allow students to visually represent their conceptual understanding, making their knowledge structures explicit and analyzable [26]. LPA is an emerging methodology that uses data from students' interactions with digital learning environments to trace their conceptual development along empirically validated learning progressions—descriptions of how understanding of a "big idea" typically develops under supportive educational conditions [27]. This guide provides a comparative analysis of these two approaches within the specific context of evolution education research, detailing their experimental applications, methodological considerations, and efficacy for assessing conceptual change.

Comparative Analysis: Concept Maps vs. Learning Progression Analytics

The table below provides a structured comparison of concept mapping and Learning Progression Analytics as applied to evolution education research.

Table 1: Comparison of Digital Assessment Approaches in Evolution Education

| Feature | Concept Mapping | Learning Progression Analytics (LPA) |

|---|---|---|

| Primary Function | Assess static and dynamic knowledge structures [26] | Trace progression along a hypothesized model of conceptual development [27] |

| Data Collected | Nodes (concepts), links (relationships), propositions; network metrics (e.g., average degree, number of edges) [26] | Process data from student interactions with digital tasks; performance on LP-aligned assessments [27] |

| Key Metrics | Similarity to expert maps, concept scores, number of nodes/links, average degree [26] | LP level attainment, evidence of knowledge integration, ability to explain phenomena [27] |

| Strengths | Visualizes student mental models; captures conceptual change over time; potential for automated analysis [26] | Provides a developmental model for instruction; can be automated for real-time feedback; links assessment to learning theory [27] |

| Limitations | Qualitative analysis is time-intensive; requires careful task design to be valid [26] | LPs are hypothetical and require extensive validation; performance assessments are resource-intensive to score [27] |

| Tech Dependencies | Digital concept mapping software | Digital learning environments; AI/Machine Learning for automated scoring [27] |

Supporting Experimental Data: A 2025 study on evolution learning collected five digital concept maps from 250 high school students over a ten-week unit. The analysis found that quantitative metrics like the average degree (average number of connections per node) and the number of edges (connections) showed significant differences between students with high, medium, and low learning gains at multiple measurement points. This suggests these metrics are promising for automated tracking of conceptual growth [26].

Experimental Protocols for Evolution Education Research

Protocol for Digital Concept Mapping Studies

This protocol is adapted from a study investigating conceptual understanding of evolutionary factors [26].

Table 2: Key Reagents and Tools for Concept Mapping Research

| Research Reagent/Tool | Function in Experiment |

|---|---|

| Digital Concept Mapping Platform | Provides the interface for students to create, revise, and submit maps; enables digital data capture of nodes and links [26]. |

| Pre-defined Concept List | A standardized set of key concepts (e.g., mutation, natural selection, genetic drift) ensures all students are mapping the same core ideas, facilitating comparison [26]. |

| Expert Reference Map | A concept map created by a domain expert; serves as a benchmark for calculating similarity scores to an ideal knowledge structure [26]. |

| Network Analysis Software | Calculates quantitative metrics from the concept map data (e.g., number of nodes/links, average degree, centrality measures) [26]. |

| Conceptual Inventory | A standardized test (e.g., pre/posttest) to measure overall conceptual understanding and learning gains independently of the map analysis [26]. |

Methodology Details:

- Participant Recruitment & Grouping: Recruit a sample of students (e.g., N=250 high school students). Split them into comparison groups post-study based on their gain scores from a pre/post conceptual inventory [26].

- Pre-test & Orientation: Administer a conceptual inventory as a pre-test. Introduce students to the concept mapping tool and task, providing a list of core concepts to be used [26].

- Repeated Map Creation: Integrate concept mapping tasks at multiple points (e.g., five times) throughout a teaching unit on evolution. Students should revise and rework their previous maps at each stage [26].

- Data Extraction & Metric Calculation: For each submitted map, extract structural data. Calculate metrics for each student at each time point, including:

- Data Analysis: Use statistical tests (e.g., ANOVA) to analyze differences in map metrics (a) between consecutive measurement points to track overall progress, and (b) between the pre-defined achievement groups (high/medium/low gain) at each measurement point to identify metrics that distinguish learning trajectories [26].

Diagram 1: Concept Mapping Experimental Workflow

Protocol for Learning Progression Analytics (LPA) Studies

This protocol outlines the LPA approach, which uses an evidence-centered design to automate the assessment of complex competencies [27].

Methodology Details:

- Define Hypothetical Learning Progression (LP): Establish a hypothesized model of how student understanding evolves for a specific evolutionary concept (e.g., natural selection). This LP should have multiple levels, from novice to expert-like understanding, describing the increasing sophistication of knowledge and practice integration [27].

- Design LP-Aligned Performance Assessments: Create tasks, often constructed-response (CR), that require students to apply their knowledge to explain evolutionary phenomena or solve problems. These tasks must be designed to provide evidence of a student's position on the LP [27].

- Develop Automated Scoring Models: Train machine learning (ML) or AI models using a large set of previously scored student responses. For early LP validation, unsupervised or semi-supervised ML can identify patterns. For advanced LPs, supervised ML and Generative AI (GAI) can be used for more accurate scoring [27].

- Implement in Digital Learning Environment: Deploy the LP-aligned assessments within a digital platform that can capture student interaction data and responses.

- Analyze Learning Pathways & Provide Feedback: The AI system scores student responses and places them on the LP. This data provides feedback to researchers and teachers on class-wide and individual student progress, enabling the study of learning pathways and the tailoring of instruction [27].

Diagram 2: LPA Development and Validation Cycle

The Scientist's Toolkit: Key Reagents for Digital Assessment Research

Table 3: Essential Research Reagents and Digital Tools

| Item/Reagent | Function/Explanation |

|---|---|

| Validated Conceptual Inventory | A pre- and post-test to establish a baseline of understanding and measure overall learning gains, providing a criterion for validating other assessment methods [26]. |

| Hypothesized Learning Progression (LP) | The cognitive model against which student development is measured. It must be empirically validated for the specific topic (e.g., natural selection) [27]. |

| Digital Learning Platform (LMS) | Ecosystems like Google Classroom or Canvas are essential for delivering assessments, collecting interaction data, and managing the learning process [28] [29]. |

| Performance Assessments | Constructed-response tasks that require knowledge application, such as explaining evolutionary phenomena, which provide rich evidence for LP level diagnosis [27]. |

| Machine Learning (ML) Models | AI tools (supervised, unsupervised, generative) for automatically scoring complex student responses and diagnosing their LP level from performance data [27]. |

| Network Analysis Algorithms | Software scripts that calculate quantitative metrics (e.g., centrality, density) from digital concept maps to objectively evaluate knowledge structure complexity [26]. |

The integration of digital assessments like concept maps and LPA is transforming efficacy research in evolution education. Concept mapping offers a powerful, visual method for capturing and quantifying dynamic knowledge structures, with metrics like average degree and edge count showing particular promise for automated tracking of conceptual growth [26]. In parallel, LPA provides a robust, theory-driven framework for understanding student learning as a developmental pathway, increasingly enabled by AI for scalable and timely assessment [27]. The choice between or combination of these methods depends on the research goals: concept maps are ideal for analyzing the structure of student knowledge at a granular level, while LPA is suited for tracking progression against a predefined model of increasing competency. Together, they provide a powerful suite of tools for moving beyond traditional assessments to deeply understand how students learn—and often struggle with—the complex concepts of evolutionary biology.

Adopting an Interdisciplinary, Trait-Centered Approach to Evolution

This guide compares the efficacy of different interdisciplinary approaches in evolution education and research, focusing on quantitative outcomes, experimental protocols, and practical implementation resources.

Comparative Efficacy of Interdisciplinary Approaches

The table below summarizes the performance of various interdisciplinary approaches based on key quantitative metrics from empirical studies.

Table 1: Comparative Performance of Interdisciplinary Evolution Approaches

| Approach Name | Core Interdisciplinary Elements | Key Efficacy Metrics | Reported Outcomes | Sample Size & Context |

|---|---|---|---|---|

| Digital Concept Mapping Learning Progression [26] | Biology Education, Learning Analytics, Network Science | Concept score similarity to expert maps, Number of nodes/edges, Average degree of concept network | Significant differences in average degree and number of edges between high/low gain students; maps showed significant development across measurement points [26]. | 250 high school students; 10-week hybrid teaching unit on evolutionary factors [26] |

| Interdisciplinary Evolution & Sustainability Course [30] | Evolutionary Biology, Sociology, Sustainability Science, Ethics | Change in evolution acceptance scores, Understanding of interdisciplinary application (survey & open-ended writing) | Increased student acceptance of evolutionary theory; expanded perspective on interdisciplinary application of evolutionary theory [30]. | Undergraduate non-science majors; 15-week semester course [30] |

| Evolutionary Sparse Learning (ESL-PSC) [31] | Computer Science, Machine Learning, Genomics, Phylogenetics | Model Fit Score (MFS), Predictive accuracy for convergent traits, Functional enrichment significance (e.g., for hearing genes) | Genetic models highly predictive of C4 photosynthesis; genes for echolocation enriched for hearing/sound perception functions [31]. | Proteome-scale analysis; 64-species alignment for C4 photosynthesis [31] |

Detailed Experimental Protocols

Protocol: Digital Concept Mapping for Learning Progression

This methodology assesses conceptual change in evolution understanding through repeated concept map creation [26].

- Procedure:

- Pre-test: Administer a conceptual inventory before the teaching unit.

- Intervention: Conduct a ten-week teaching unit on five factors of evolution (mutation, selection, genetic drift, etc.).

- Repeated Mapping: Students create a total of five concept maps throughout the unit, repeatedly revising and reworking their previous maps.

- Post-test: Administer the same conceptual inventory after the unit.

- Data Extraction: For each student map, calculate quantitative metrics, including:

- Structural Metrics: Number of nodes (concepts), number of edges (links), and average degree (average number of links per node).

- Quality Metrics: Similarity to an expert-derived concept map and a "concept score" based on the use of key terms.

- Group Analysis: Split students into three groups (high, medium, low) based on pre-to-post-test gain scores.

- Statistical Comparison: Analyze differences in map metrics (a) between consecutive measurement points and (b) between the gain-score groups at each point.

Protocol: Interdisciplinary Course on Evolution and Sustainability

This protocol evaluates the impact of an interdisciplinary course on evolution acceptance and application understanding [30].

- Procedure:

- Course Design: Structure a 15-week course around three interdisciplinary modules:

- Module 1: Honey bee biology and evolution, featuring hands-on beekeeping and DNA analysis.

- Module 2: Native plants and community education on sustainability, involving habitat restoration proposals.

- Module 3: Evolution of human behavior and subjective free will, with ties to sustainability implications.

- Core Readings: Use interdisciplinary texts, including Darwin's original works, David Sloan Wilson's "Evolution for Everyone," and academic articles on module topics.

- Major Assignments: Implement group and individual projects, such as research proposals and community engagement plans.

- Assessment:

- Evolution Acceptance: Measure changes using a standardized instrument.

- Learning Objectives: Assess basic knowledge of evolutionary theory and ability to apply it to other disciplines via group and individual assignments.

- Interdisciplinary Perspective: Use closed-ended survey questions and analysis of open-ended writing to gauge students' understanding of interdisciplinary application.

- Course Design: Structure a 15-week course around three interdisciplinary modules:

Protocol: Evolutionary Sparse Learning with Paired Species Contrast (ESL-PSC)

This computational method builds predictive genetic models for convergent trait evolution [31].

- Procedure:

- Data Selection (PSC Design):

- Identify a balanced set of species: an equal number of trait-positive (e.g., echolocating) and trait-negative (e.g., non-echolocating) species.

- Pair each trait-positive species with a closely related trait-negative species.

- Ensure evolutionary independence between all pairs (the Most Recent Common Ancestor of one pair is not an ancestor of any other pair).

- Sequence Alignment: Compile a multiple sequence alignment of protein sequences for all selected species.

- Model Building (Sparse Learning):

- Use Evolutionary Sparse Learning (ESL), specifically a Sparse Group LASSO model.

- The algorithm regresses species' trait status (+1/-1) against the presence/absence of every possible amino acid residue in the alignment.

- A sparsity penalty is applied to include only the most predictive sites and genes in the final model, preventing overfitting.

- Model Evaluation: Select the optimal model using a Model Fit Score (MFS), analogous to a Brier score.

- Validation:

- Prediction: Test the model's ability to predict trait status in species not used in model building.

- Functional Enrichment: Perform Gene Ontology (GO) enrichment analysis on the genes included in the model to test for biological relevance to the trait.

- Data Selection (PSC Design):

Workflow and Relationship Visualizations

ESL-PSC Research Workflow

Interdisciplinary Education Research Framework

The Scientist's Toolkit: Key Research Reagents & Materials

Table 2: Essential Reagents and Materials for Interdisciplinary Evolution Research

| Item Name | Function/Application | Representative Use Case |

|---|---|---|

| Digital Concept Mapping Software | Allows students/researchers to create and revise node-link diagrams representing their conceptual knowledge. Enables quantitative extraction of network metrics [26]. | Assessing conceptual change and knowledge integration in evolution education studies [26]. |

| Standardized Evolution Acceptance Instrument | A validated survey tool to quantify an individual's acceptance of evolutionary theory, allowing for pre/post-intervention comparison [30]. | Measuring the impact of an interdisciplinary course on student attitudes toward evolution [30]. |

| Paired Species Contrast (PSC) Dataset | A curated set of genomic or proteomic sequences from trait-positive and closely related trait-negative species, structured to ensure phylogenetic independence [31]. | Building predictive genetic models for convergent traits like C4 photosynthesis or echolocation using ESL-PSC [31]. |

| Evolutionary Sparse Learning (ESL) Algorithm | A supervised machine learning method (Sparse Group LASSO) that identifies a minimal set of genomic features predictive of a trait from sequence alignments [31]. | Identifying genes and sites associated with independent origins of complex traits [31]. |

| Cryopreserved Fossil Record | Samples (e.g., microbial populations) stored at ultra-low temperatures throughout a long-term experiment, creating a living archive of evolutionary history [32]. | Resurrecting ancestral states in long-term evolution experiments (LTEE) to retrospectively test evolutionary hypotheses [32]. |

Integrating AI and Personalized Learning Technologies for Adaptive Feedback

The integration of artificial intelligence (AI) into educational technologies has catalyzed a significant shift from standardized instruction to personalized learning experiences. In the specific context of scientific and professional education, adaptive learning systems have emerged as powerful tools that dynamically adjust educational content and feedback based on individual learner performance and needs. These technologies are particularly relevant for researchers, scientists, and drug development professionals who require efficient, effective continuing education in rapidly evolving fields. By leveraging machine learning algorithms and data analytics, these systems can provide tailored educational pathways that respond in real-time to learner interactions, creating a customized learning environment that traditional one-size-fits-all approaches cannot match [33].

The fundamental distinction between key approaches is crucial for understanding their research efficacy. Adaptive learning is a technology-driven, algorithm-controlled method that adjusts educational content in real-time based on learner performance metrics. In contrast, personalized learning represents a broader human-guided approach that tailors educational experiences to individual goals, preferences, and styles, often incorporating instructor curation [34]. When these approaches converge with AI technologies, they create powerful systems capable of delivering adaptive feedback that is both immediate and highly specific to individual learning gaps and progression patterns. This synthesis represents a significant advancement in educational technology, particularly for complex scientific domains where precision and accuracy are paramount.

Comparative Performance Data: Quantitative Analysis of AI-Driven Learning Technologies

The efficacy of AI-integrated learning technologies is supported by substantial empirical evidence across multiple educational contexts. The following tables summarize key quantitative findings from recent research and implementation studies.

Table 1: Learning Outcome Improvements with AI-Powered Adaptive Technologies

| Metric | Improvement | Context | Source |

|---|---|---|---|

| Test scores | 54% higher | AI-enhanced active learning vs. traditional environments [35] | |

| Knowledge retention | 30% improvement | Personalized AI learning vs. traditional approaches [35] | |

| Learning efficiency | 57% increase | AI-powered corporate training [35] | |

| Course completion | 70% better rates | AI-personalized learning vs. traditional approaches [35] | |

| Feedback speed | 10 times faster | AI-powered assessment vs. traditional methods [35] | |

| Student motivation | 75% vs. 30% in traditional | Personalized AI learning environments [36] |

Table 2: Implementation Metrics for Adaptive Learning Technologies

| Metric | Finding | Context | Source |

|---|---|---|---|

| AI adoption in education | 86% of institutions | Highest adoption rate of any industry [35] | |

| Teacher time savings | 44% reduction | Using AI for administrative tasks [35] | |

| Market growth | 46% increase (2024-2025) | Global AI in education market [35] | |

| Student usage | 89% of students | Using ChatGPT for homework assignments [35] | |

| Engagement generation | 10 times more engagement | AI-powered active learning vs. passive methods [35] | |

| Reduction in dropout rates | 15% decrease | Schools implementing AI early warning systems [35] |

A recent meta-analysis of personalized technology-enhanced learning (TEL) in higher education context provides further evidence for the effectiveness of these approaches. The analysis revealed that personalized TEL can improve students' cognitive skills and non-cognitive characteristics at a medium effect size, with research settings, delivery mode, and modelled factors influencing non-cognitive traits [37]. This comprehensive review underscores the potential of adaptive learning technologies to address multiple dimensions of the learning process simultaneously.

Experimental Protocols and Methodologies

Protocol 1: Implementation of Closed-Loop Adaptive Learning Systems

The foundational architecture for most adaptive learning systems follows a closed-loop feedback mechanism that continuously responds to learner inputs [33]. The implementation protocol involves four key phases: