Metacognitive Exercises for Evolution Education: Enhancing Conceptual Understanding for Research and Clinical Applications

This article provides a comprehensive framework for implementing metacognitive exercises in evolution education, tailored for researchers, scientists, and drug development professionals.

Metacognitive Exercises for Evolution Education: Enhancing Conceptual Understanding for Research and Clinical Applications

Abstract

This article provides a comprehensive framework for implementing metacognitive exercises in evolution education, tailored for researchers, scientists, and drug development professionals. It explores the foundational theory of metacognition as a tool to overcome persistent intuitive conceptual barriers, such as essentialism, that hinder the deep understanding of evolutionary principles. The content outlines practical, evidence-based methodologies for classroom and laboratory application, addresses common cognitive and motivational challenges like mental load and self-efficacy, and reviews validation studies demonstrating improved conceptual knowledge and self-regulatory accuracy. By fostering metaconceptual awareness, this approach aims to enhance scientific reasoning and its application in biomedical research, including antimicrobial resistance and cancer evolution.

The Foundational Role of Metacognition in Overcoming Evolutionary Misconceptions

Defining Metacognitive and Metaconceptual Thinking in Science Education

Within science education, fostering higher-order thinking is paramount for developing competent future scientists. Metacognitive thinking refers to the awareness and control of one's own learning processes [1]. In a scientific context, this involves a researcher's ability to plan their investigation strategy, monitor their comprehension during experimentation, and evaluate their problem-solving effectiveness [2] [3]. Metaconceptual thinking, by extension, involves explicit awareness and control of one's conceptual understanding, which is crucial for mastering complex scientific theories and models [4]. These cognitive skills are particularly vital in evolution education, where overcoming deeply rooted misconceptions requires learners to not only grasp new concepts but also to consciously restructure their existing conceptual frameworks.

The distinction between metacognitive knowledge (awareness) and metacognitive regulation (control) provides a critical framework for understanding these processes [1] [5]. Metacognitive knowledge encompasses what individuals know about their own cognitive processes, different learning strategies, and the demands of a specific task [5]. Metacognitive regulation involves the active management of one's cognitive processes through planning, monitoring, and evaluating learning activities [2]. For evolution education, this means students must develop awareness of their own conceptual models of evolutionary processes and learn to regulate their understanding as they encounter new evidence.

Quantitative Assessment Data in Science Education

Table 1 summarizes key metrics and assessment methodologies used to evaluate metacognitive and metaconceptual processes in science education research. These quantitative tools provide researchers with empirical data on the effectiveness of educational interventions.

Table 1: Quantitative Assessment Methods for Metacognitive and Metaconceptual Processes

| Assessment Method | Measured Construct | Key Metrics | Typical Results in Intervention Studies |

|---|---|---|---|

| Metacognitive Sensitivity Tasks [1] | Ability to discriminate correct from incorrect judgements | Meta-d'/d' ratio; Confidence-accuracy correlation | Post-intervention ratios show 0.1-0.3 increase, indicating improved self-monitoring [1] |

| Pre/Post Conceptual Assessments [6] | Conceptual knowledge and restructuring | Concept inventory scores; Misconception frequency | Significant gains (effect sizes 0.4-0.7) in conceptual understanding after meta-learning interventions [6] |

| Strategy Use Inventories [5] [3] | Application of metacognitive strategies | Self-reported frequency; Strategy variety | Increased report of planning and monitoring strategies; higher strategy diversity correlates with better performance (r ≈ 0.35) [5] |

| Think-Aloud Protocols [7] [8] | Online cognitive processing | Instances of self-correction; Question generation | 40-60% more monitoring statements and conceptual queries in intervention groups [7] |

| Exam Wrappers & Reflection [3] | Learning self-evaluation | Error pattern recognition; Study plan adaptation | 75% of students demonstrate improved study strategy adaptation after repeated use [3] |

Experimental Protocols for Evolution Education Research

Protocol: Eliciting Metaconceptual Reflection Through Conceptual Models

This protocol is designed to explicitly trigger metaconceptual thinking by having students externalize and reflect on their mental models of natural selection.

Application Context: Suitable for undergraduate evolution courses after initial instruction on natural selection mechanisms.

Materials:

- Whiteboards or large drawing paper

- Markers of various colors

- Pre-defined evolutionary scenarios (e.g., antibiotic resistance, beak adaptation)

- Pre- and post-intervention concept inventories

Procedure:

- Pre-Assessment: Administer a concept inventory (e.g., Conceptual Inventory of Natural Selection) to establish a baseline [6].

- Model Elicitation: In small groups, provide students with an evolutionary scenario. Instruct them to collaboratively draw a detailed conceptual model explaining the process, using arrows and labels to denote causal relationships [8].

- Think-Aloud Modeling: The instructor models a think-aloud while drawing a sample model for a different scenario, verbalizing their conceptual reasoning (e.g., "I'm showing variation here because that's the raw material for selection...") [7] [9].

- Peer Explanation and Challenge: Groups present their models to the class. The audience is required to ask clarifying questions that probe conceptual understanding (e.g., "Why did you show the environment directly causing a trait?") [7].

- Expert Model Comparison: Provide students with a scientifically accurate model of the same scenario. Guide a structured comparison with their own model, focusing on key conceptual differences.

- Model Revision: Groups revise their original models based on new insights and discussions.

- Metaconceptual Journaling: Individually, students write a reflection addressing: "How did my understanding of the concept of natural selection change during this activity? Which of my ideas were confirmed, and which were challenged?" [3].

- Post-Assessment: Re-administer the concept inventory after a delay (e.g., 2-3 weeks) to measure conceptual change retention.

Protocol: Metacognitive Judgement Training for Problem-Solving

This protocol adapts cognitive neuroscience methods to train and assess students' ability to monitor their own understanding during evolutionary problem-solving [1].

Application Context: Can be integrated into weekly problem-solving sessions in an evolution course.

Materials:

- Set of evolutionary problems of varying difficulty

- Confidence rating scales (e.g., 0-100% sure)

- Software for data collection (e.g., online surveys that record response and confidence)

Procedure:

- Baseline Calibration: Students complete a set of problems, providing an answer and a confidence rating for each. Calculate their Brier scores and meta-d'/d' ratios to establish a baseline metacognitive efficiency [1].

- Explicit Instruction: Teach students about the concepts of metacognitive monitoring (judging your learning) and control (regulating your learning) [1] [5].

- Guided Practice with Feedback: Students solve problems in class. After each solution but before feedback, they must:

- Distractor Analysis: For incorrect answers with high confidence, guide students to analyze the source of their error (e.g., misapplication of a principle, reliance on a misconception) [9].

- Strategy Development: Students maintain a "strategy journal" where they document which problem-solving approaches (e.g., drawing a population diagram, identifying selective pressure) were most effective for different problem types [7] [9].

- Post-Training Assessment: Repeat the baseline calibration phase with new problems. Analyze changes in metacognitive efficiency (meta-d'/d') and the correlation between confidence and accuracy.

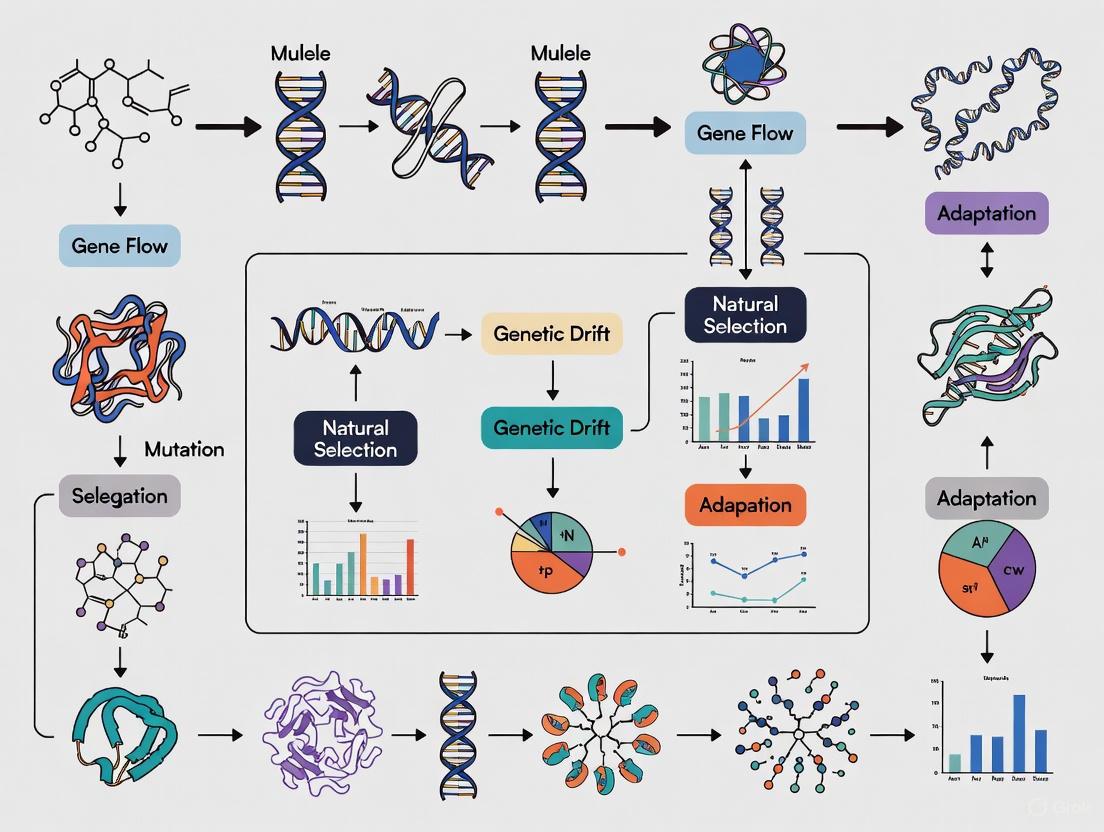

Visualizing the Metaconceptual Change Process

The following diagram illustrates the iterative cognitive process a learner undergoes during metaconceptual change, particularly when confronting and restructuring scientific misconceptions.

The Researcher's Toolkit: Key Reagents and Instruments

Table 2 catalogs essential "research reagents" — the validated instruments and tools — for conducting rigorous research on metacognition and metaconceptual change in science education.

Table 2: Research Reagent Solutions for Metacognition and Metaconceptual Studies

| Tool/Reagent Name | Function in Research | Application Context | Key Considerations |

|---|---|---|---|

| Meta-d' / d' Analysis [1] | Quantifies metacognitive sensitivity independent of task performance. | Ideal for controlled experiments on monitoring skills using 2-AFC tasks on scientific content. | Requires specialized statistical packages (e.g., MATLAB); sensitive to trial number. |

| Concept Inventories (CIs) [6] | Measures specific conceptual understanding and identifies prevalent misconceptions. | Essential pre/post tool for studies on conceptual change (e.g., Evolution CI, Genetics CI). | Must ensure alignment between CI content and intervention content. |

| Structured Reflection Prompts [2] [3] | Elicits metacognitive knowledge and regulatory processes. | Can be embedded in courses as "exam wrappers" or journaling prompts for qualitative analysis. | Coding responses requires inter-rater reliability checks; can be time-consuming. |

| Think-Aloud Protocol Guides [7] [5] | Captures real-time cognitive and metaconceptual processes during problem-solving. | Used for in-depth qualitative analysis of reasoning pathways and monitoring instances. | Requires audio/video recording and transcription; data analysis is resource-intensive. |

| Motivated Strategies for Learning Questionnaire (MSLQ) | Assesses students' self-regulated learning strategies and motivational orientations. | Provides complementary data on motivational factors influencing metacognitive strategy use. | Is a self-report instrument; best used in conjunction with performance-based measures. |

The explicit integration of metacognitive and metaconceptual thinking into evolution education represents a powerful approach for achieving deep, conceptual learning. The protocols and tools detailed herein provide a framework for researchers to systematically investigate and foster these critical competencies. By focusing on how students think about and regulate their understanding of evolutionary concepts—moving beyond mere content delivery—educators can empower learners to restructure their knowledge frameworks, overcome persistent misconceptions, and cultivate the lifelong learning skills essential for scientific literacy. Future research should continue to refine these assessment methodologies and explore their longitudinal impact on students' abilities to navigate complex scientific information.

Essentialism as a Primary Epistemological Obstacle in Evolution Education

The theory of evolution by natural selection represents a foundational yet challenging concept in biological education. Despite its central unifying role in the life sciences, many students struggle to achieve a conceptual understanding of evolutionary principles [10]. A significant body of research indicates that this difficulty stems not only from the conceptual complexity of evolution but also from deeply ingrained epistemological obstacles, among which psychological essentialism stands as a primary barrier [11] [10].

Essentialism constitutes a pre-scientific cognitive default that leads students to view species as discrete, unchanging categories defined by underlying essences rather than as populations of variable individuals connected by common descent [11]. This intuitive biological thinking directly contradicts the fundamental tenets of evolutionary theory, creating robust misconceptions that persist even after formal instruction. This article explores the manifestations of essentialist reasoning in evolution education and provides detailed protocols for metacognitive interventions designed to explicitly address these barriers through targeted cognitive training.

Theoretical Framework: Essentialism as an Epistemological Obstacle

Cognitive Foundations of Essentialist Reasoning

Psychological essentialism represents a intuitive cognitive bias wherein individuals perceive category members as sharing an underlying immutable essence that determines their identity and observable properties [11]. In biological contexts, this manifests through several key characteristics:

- Belief in species immutability: The assumption that species boundaries are fixed and absolute, preventing conceptualization of evolutionary transitions [11]

- Discontinuity thinking: Difficulty recognizing relationships among species and variation within species due to perceived categorical boundaries [11]

- Teleological explanations: Tendency to attribute evolutionary changes to purposeful mechanisms or inherent needs rather than stochastic processes [10]

This essentialist bias functions as an epistemological obstacle because it represents a way of thinking that is functionally adaptive in everyday contexts but fundamentally misaligned with evolutionary biology's core principles [11]. Essentialist thinking provides cognitive shortcuts for rapid category-based reasoning but becomes counterproductive when learning population thinking and common descent.

Interaction with Other Cognitive Biases

Essentialism rarely operates in isolation but interacts with other cognitive obstacles in evolution education. Research indicates it frequently co-occurs with:

- Teleological reasoning: The tendency to explain natural phenomena by reference to purpose or design [11] [10]

- Existential anxieties: Concerns related to identity, mortality, and meaning that evolution can evoke [10]

- Analogous obstacles in social sciences: Resistance to evolutionary explanations in human behavior studies [11]

Table 1: Primary Cognitive Biases in Evolution Education

| Bias Type | Definition | Manifestation in Evolution |

|---|---|---|

| Essentialism | Belief in fixed, underlying essences defining categories | Inability to conceptualize speciation and within-species variation |

| Teleology | Explanation by reference to purpose or end-goals | "Giraffes got long necks to reach high leaves" |

| Existential Resistance | Anxiety triggered by implications of evolutionary theory | Discomfort with human-animal continuity and mortality |

Metacognitive Protocols for Addressing Essentialism

Metacognition—the awareness and regulation of one's cognitive processes—provides a powerful framework for addressing essentialist obstacles [1]. The following protocols employ metacognitive strategies to help students recognize and override intuitive essentialist reasoning.

Protocol 1: Essentialism Identification Exercise

Objective: To develop students' awareness of their own essentialist thinking patterns when reasoning about biological categories.

Materials:

- Species transition sets (images showing intermediate forms)

- Concept mapping software or physical manipulatives

- Reflective journal template

Procedure:

- Pre-assessment: Present students with contrasting pairs (e.g., Theropod dinosaur → Archaeopteryx → Modern bird) and ask them to categorize each as "same kind" or "different kind" of organism

- Think-aloud protocol: Students verbalize their reasoning while making categorization decisions, with prompts to identify "defining features"

- Pattern recognition: Guide students to identify moments when they appealed to "essences" or "defining traits" in their reasoning

- Contrastive cases: Present ring species or other boundary-challenging examples that defy essentialist categorization

- Metacognitive reflection: Students complete journal responses identifying instances where their intuitive categorization conflicted with evolutionary relationships

Implementation Context: This protocol fits well within introductory evolution modules, requiring 45-60 minutes for full implementation. The exercise can be adapted for both undergraduate and advanced high school levels.

Protocol 2: Variation Mapping for Population Thinking

Objective: To counteract essentialist discreteness by visualizing continuous variation within populations.

Materials:

- Quantitative trait datasets (e.g., beak depth, limb length)

- Data visualization tools (physical or digital)

- Statistical analysis software (optional)

Procedure:

- Trait selection: Identify 3-5 measurable traits within a single species

- Data collection: Students measure traits from sample images or real specimens

- Distribution visualization: Create frequency histograms for each trait, emphasizing continuous variation

- Between-species comparison: Repeat for related species to show overlapping trait distributions

- Metacognitive discussion: Facilitate reflection on how visualizing continuous variation challenges essentialist boundaries

Table 2: Sample Trait Variation Data Template

| Specimen ID | Trait 1 Measurement | Trait 2 Measurement | Trait 3 Measurement | Classification Attempt |

|---|---|---|---|---|

| SP-01 | 12.5 mm | 24.8 mm | 8.3 mm | Species A |

| SP-02 | 13.1 mm | 25.2 mm | 8.1 mm | Species A/B? |

| SP-03 | 14.2 mm | 26.7 mm | 8.9 mm | Species B |

Protocol 3: Historical Narrative Development

Objective: To address essentialist immutability beliefs by constructing evolutionary lineages.

Materials:

- Fossil images or replicas across temporal sequences

- Timeline software or physical timeline materials

- Character matrix datasets

Procedure:

- Lineage selection: Choose well-documented evolutionary sequences (e.g., equids, cetaceans)

- Character identification: Identify key traits that change across the sequence

- Gradual transition mapping: Plot trait changes across geological time

- Arbitrary boundary exercise: Students attempt to identify "exact moment" of speciation, then reflect on the impossibility

- Metacognitive wrap-up: Students write reflections on how gradual change challenges essentialist thinking

Assessment Methods for Essentialist Reasoning

Validated assessment tools are essential for evaluating the effectiveness of metacognitive interventions. The following methods provide quantitative and qualitative data on essentialist thinking patterns.

Concept Inventory Assessments

Measure: Categorical versus Population Thinking (CPT) Scale

Components:

- 15-item Likert scale assessing agreement with essentialist statements

- 10 forced-choice items presenting categorical versus populational explanations

- 5 open-response items analyzing reasoning patterns

Administration: Pre- and post-intervention to measure conceptual shift

Validation: Pilot testing shows Cronbach's α of 0.79 for internal consistency

Clinical Interview Protocols

Semi-structured interviews provide nuanced data on students' conceptual frameworks:

- Species concept probe: "What makes a dog a dog and not a cat?"

- Boundary probe: "At what point in evolution did dinosaurs become birds?"

- Variation probe: "Are all members of a species essentially the same?"

- Metacognitive awareness probe: "Can you describe how your thinking about species has changed?"

The Researcher's Toolkit: Essential Materials

Table 3: Research Reagent Solutions for Evolution Education Research

| Reagent/Tool | Function | Example Application |

|---|---|---|

| ACORNS (Assessing Contextual Reasoning about Natural Selection) | Measures evolutionary reasoning patterns | Pre-post assessment of essentialist thinking |

| MATE (Measure of Acceptance of Theory of Evolution) | Assesses acceptance versus understanding | Controlling for attitudinal factors in intervention studies |

| Concept Mapping Software | Visualizes conceptual relationships | Identifying essentialist patterns in student knowledge structures |

| Eye-Tracking Systems | Measures attention to variation | Studying perceptual components of essentialist reasoning |

| fMRI-Compatible Tasks | Neural correlates of essentialism | Identifying brain activity associated with categorical thinking |

Integration with Broader Metacognitive Frameworks

The metacognitive protocols described align with broader theoretical frameworks for self-regulated learning [12] [1]. Effective implementation requires embedding these exercises within comprehensive metacognitive support that includes:

- Planning strategies: Explicit identification of potential essentialist pitfalls before learning activities [3]

- Monitoring techniques: Real-time awareness of essentialist reasoning during problem-solving [1]

- Evaluation protocols: Reflection on overcoming essentialist biases after learning activities [3]

This integrated approach helps students develop the metacognitive habits necessary to recognize and regulate the intuitive cognitions that impede evolution understanding [12].

Visualizing the Conceptual Transition from Essentialism to Population Thinking

The following diagram illustrates the conceptual shift that metacognitive interventions aim to facilitate, showing the transition from essentialist to population-based reasoning:

Essentialism represents a fundamental epistemological obstacle in evolution education that requires targeted metacognitive interventions rather than mere factual correction. The protocols outlined provide research-ready methodologies for addressing this barrier through structured activities that make essentialist thinking visible and subject to conscious regulation. For researchers in science education and cognitive science, these approaches offer validated pathways for investigating conceptual change in evolutionary biology.

Future research directions should include longitudinal studies tracking the persistence of metacognitive gains, cross-cultural investigations of essentialist thinking patterns, and neurocognitive studies examining the neural correlates of conceptual change about evolutionary concepts. By treating essentialism as a primary epistemological obstacle requiring metacognitive solutions, evolution education can move beyond information delivery to facilitate genuine conceptual transformation.

The Critical Link Between Metaconceptual Awareness and Conceptual Change

Within the specific domain of evolution education research, fostering conceptual change is a primary objective. Students often enter the classroom with robust intuitive conceptions about the natural world that are not aligned with scientific understanding [13]. Metaconceptual awareness—the conscious awareness and control of one's own conceptual understandings—is a critical facilitator of this conceptual change. This document provides structured Application Notes and detailed Experimental Protocols to equip researchers and scientists with the tools necessary to rigorously investigate and apply principles of metaconceptual awareness to achieve lasting conceptual change in evolution education.

Application Notes: Quantitative Insights

The following tables synthesize key quantitative findings from recent research, providing a evidence-based foundation for designing interventions.

Table 1: Baseline Metaconceptual Awareness and Academic Achievement in Teacher Trainees

| Metric | Overall Cohort (n=Not Specified) | Male Students | Female Students | Statistical Significance (Gender) |

|---|---|---|---|---|

| Metaconceptual Awareness | 60% above average [14] | No significant difference [14] | No significant difference [14] | Not Significant (p > 0.05) [14] |

| Academic Achievement | Diverse; 40% below average [14] | No significant difference [14] | No significant difference [14] | Not Significant (p > 0.05) [14] |

| MAI-Achievement Correlation | Very weak positive correlation [14] | - | - | Statistically Non-Significant [14] |

Table 2: Temporal Evolution of Metacognitive Strategies in a CBLE

| Factor | Impact on Metacognitive Strategy Use | Statistical Role | Key Finding in Temporal Evolution |

|---|---|---|---|

| Task Value | Positive predictor [15] | Partially explains variation in evolution [15] | Use increases from Day 1 to Day 2, then stabilizes [15] |

| Prior Domain Knowledge | Positive predictor [15] | Partially explains variation in evolution [15] | Evolution of strategic behaviors varies across individuals [15] |

| Self-Efficacy | No effect [15] | Not a significant predictor [15] | - |

Experimental Protocols

Protocol: Quantitative Assessment of Metaconceptual Awareness

This protocol details the use of the Metacognitive Awareness Inventory (MAI) for large-scale, quantitative assessment.

- Objective: To rapidly assess the metacognitive awareness of a cohort of students at the beginning of a course or intervention study [16].

- Primary Instrument: Metacognitive Awareness Inventory (MAI) [14] [16].

- Description: A 52-item self-report questionnaire that uses a Likert-scale response format [16].

- What it Measures: Purports to measure metacognition directly, assessing both metacognitive knowledge and metacognitive regulation [14] [16].

- Rationale for Selection: It is a free instrument of moderate length that has been used in correlational studies with academic success metrics [16].

- Procedure:

- Administration: Distribute the MAI at the study's baseline (e.g., first day of class). Scantron sheets or online forms can be used for efficient data collection [16].

- Data Processing: Score the responses according to the instrument's guidelines. This can be done rapidly, in about 5 minutes for an entire class using a machine [16].

- Data Analysis:

- Calculate total and subscale scores for the cohort.

- Use percentage analysis to categorize students (e.g., above-average, average, below-average) [14].

- Employ independent samples t-tests to compare mean scores across genders or other demographic groups [14].

- Perform Pearson’s correlation analysis to explore the relationship between MAI scores and academic achievement scores (e.g., GPA, course grades) [14].

- Notes of Caution: Self-report measures like the MAI are not always accurate and should not be used as the sole measure of metacognitive development. Researchers have reported instances where MAI data showed no relationship with other qualitative measures or student success metrics [16].

Protocol: Qualitative Assessment of Conceptual Change

This protocol complements quantitative data by providing rich, nuanced insights into students' conceptual evolution.

- Objective: To gain a deeper, nuanced understanding of students' conceptual frameworks and how they change over time through direct analysis of their written or verbal responses [16].

- Primary Instrument: Open-Ended Prompts.

- Description: Carefully constructed questions that require students to explain evolutionary concepts in their own words (e.g., "Explain how natural selection leads to the evolution of antibiotic resistance in bacteria.") [13].

- What it Measures: Reveals the complexity of student explanations, specific misconceptions (e.g., teleological reasoning), and the use of accurate scientific models [13].

- Procedure:

- Data Collection: Administer open-ended prompts at multiple time points (pre-intervention, post-intervention, and delayed post-intervention) to track conceptual change.

- Coding Scheme Development: Develop a coding rubric based on established frameworks. For evolution, this could include:

- Key Concepts: Identify presence of core ideas like variation, inheritance, selection, and time [13].

- Misconceptions: Code for common errors, such as attributing evolutionary change to "need" or individual effort [13].

- Model Complexity: Score the sophistication and integration of concepts in the explanation [13].

- Analysis: Code all student responses using the rubric. This process is labor-intensive and can take months to complete [16]. Use qualitative data analysis software (e.g., NVivo) to manage and identify patterns.

- Integration with Quantitative Data: Triangulate findings from the qualitative analysis with MAI scores and academic grades to develop a more complete picture of the relationship between metaconceptual awareness and conceptual change [16].

Visualization of Theoretical Framework

The following diagram illustrates the integrated relationship between metaconceptual awareness and the process of conceptual change, grounded in the literature.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Instruments and Materials for Metaconceptual Awareness Research

| Item Name | Type/Format | Primary Function in Research |

|---|---|---|

| Metacognitive Awareness Inventory (MAI) | Quantitative Survey (52-item) [16] | Provides a rapid, quantitative baseline measure of a learner's metacognitive knowledge and regulation [14] [16]. |

| Open-Ended Conceptual Prompts | Qualitative Assessment Tool [16] [13] | Elicits rich, nuanced data on students' conceptual frameworks and the process of conceptual change, allowing for coding of misconceptions and model complexity [16] [13]. |

| Coding Rubric for Conceptual Understanding | Analytical Framework [13] | Enables systematic, reliable qualitative analysis of open-ended responses by defining levels of understanding and key misconceptions [13]. |

| Pedagogical Content Knowledge (PCK) Framework | Theoretical Framework [13] | Guides the design of instruction and research by organizing knowledge of student thinking, assessment, and instructional strategies for specific topics like natural selection [13]. |

| Conceptual Inventory of Natural Selection (CINS) | Quantitative Diagnostic [13] | A forced-response instrument specifically designed to assess understanding of key natural selection concepts and identify common misconceptions [13]. |

| Avida-ED | Digital Learning Platform [13] | An open-ended software platform that allows students to design experiments and observe evolution in a digital organism, providing an authentic context for applying and monitoring conceptual understanding [13]. |

The challenge of fostering robust evolution acceptance among students extends beyond simple knowledge transfer, requiring instead the development of sophisticated cognitive and metacognitive capacities. This application note explores the theoretical progression from structured Self-Regulated Learning (SRL) models toward a state of adaptive metacognitive vigilance, with specific application to evolution education research. We provide researchers and course designers with experimentally validated protocols and analytical frameworks to cultivate the metacognitive capabilities necessary for navigating the complex conceptual and epistemological challenges inherent in evolutionary biology. The integration of these frameworks addresses not only knowledge acquisition but also the motivational and self-evaluative processes crucial for reconciling scientific understanding with personal beliefs.

Theoretical Foundations and Key Concepts

The design of effective metacognitive interventions requires a grounding in both general and domain-specific self-regulation theories. The following table summarizes the core theoretical models relevant to this progression.

Table 1: Foundational Theoretical Models in Self-Regulated Learning

| Model Name | Key Architect | Core Phases/Components | Relevance to Metacognitive Vigilance |

|---|---|---|---|

| Cyclical Model | Zimmerman [17] | Forethought, Performance, Self-Reflection | Provides a three-phase iterative structure for embedding metacognitive checks. |

| Dual Processing Model | Boekarts [17] | Growth Pathway, Well-Being Pathway | Highlights the role of emotion and domain-specific knowledge as a gateway to SRL. |

| Information Processing Model | Winne & Hadwin [17] | Task Definition, Goal Setting & Planning, Strategy Use, Metacognitive Adaptation | Emphasizes feedback loops and metacognitive monitoring within cognitive architecture. |

| Domain-Specific CMHI Model | - [17] | Cognitive & Metacognitive Activities in Historical Inquiry | Demonstrates domain-specific SRL adaptation; a template for evolution education. |

The concept of metacognitive vigilance extends these models, describing a sustained, adaptive state of awareness where learners actively monitor their own understanding, evaluate emerging cognitive conflicts, and regulate their learning strategies in real-time. This is particularly critical in evolution education, where students often encounter concepts that challenge pre-existing worldview. From an evolutionary perspective, metacognition itself can be framed as a functional adaptation for dealing with uncertainties across multiple spatio-temporal scales [18]. This framework positions metacognitive vigilance not as a luxury, but as a fundamental cognitive tool for navigating complex and changing information environments.

Empirical studies consistently reveal the positive impact of SRL and metacognitive interventions on academic outcomes. The following table synthesizes key quantitative findings from recent research, highlighting the effects on writing quality, metacognitive strategy use, and the specific context of evolution understanding.

Table 2: Empirical Evidence on SRL and Metacognitive Intervention Outcomes

| Study Focus | Population | Key Intervention | Major Quantitative Findings | Citation |

|---|---|---|---|---|

| Writing Quality & Planning | 4th & 5th Graders | Self-Regulated Strategy Development (SRSD) | SRSD students produced higher-quality texts and evaluated their work more accurately. Progress was mediated by improved planning skills. Students with poor working memory struggled with strategy implementation. | [19] |

| Metacognitive Strategy Use | 6th Graders | Learning in "Betty's Brain" (CBLE) | Metacognitive strategy use increased from the first to the second day, then stabilized. Task value and prior domain knowledge positively predicted metacognitive strategy use, while self-efficacy did not. | [15] |

| Evolution Understanding & Acceptance | 11,409 College Biology Students (U.S.) | N/A (Cross-sectional survey) | Students were most accepting of microevolution and least accepting of common ancestry of life. For highly religious students, evolution understanding was not related to acceptance of common ancestry. | [20] |

| Generality of SRL | Academically Successful High Schoolers | N/A (Interview-based) | Self-Regulated Learning was found to be both a general characteristic and a domain-specific one, involving a complex process that subsumes both attributes. | [17] |

Application Notes and Experimental Protocols

The following section provides detailed, actionable protocols for implementing and researching SRL and metacognitive vigilance in evolution education.

Protocol 1: Self-Regulated Strategy Development (SRSD) for Evolution Argumentation

This protocol adapts the established SRSD model [19] to help students formulate written arguments about evolutionary concepts, thereby enhancing both writing quality and conceptual understanding.

- Objective: To explicitly teach students self-regulation strategies for planning and composing evidence-based arguments on evolutionary topics (e.g., natural selection, common descent).

- Materials:

- Graphic Organizers: For concept mapping and structuring arguments (e.g., T-charts for evidence, outline templates).

- Model Texts: High-quality exemplar essays that argue for evolutionary concepts.

- Self-Monitoring Checklist: A sheet with prompts for goal-setting, planning, and self-evaluation.

- Writing Prompts: Content-specific prompts (e.g., "Using evidence from comparative anatomy and genetics, argue for the common ancestry of whales and even-toed ungulates.").

- Procedure:

- Develop Background Knowledge: Pre-teach necessary content knowledge and vocabulary related to the writing prompt and evolutionary topic.

- Discuss It: Collaboratively examine and critique model texts, identifying key argument components and the author's strategies.

- Model It: The instructor thinks aloud while writing a sample argument, explicitly verbalizing the planning process (e.g., "My goal is to convince a skeptical reader. I will first state the claim of common ancestry, then present fossil evidence, followed by genetic evidence..."), formulation, and self-correction strategies.

- Memorize It: Students memorize the mnemonic "POW + TREE" (Pick my idea, Organize my notes, Write and say more; Topic sentence, Reasons, Ending, Examine) or a similar structured strategy.

- Support It: Students co-create a first draft with instructor/peer support, using their graphic organizers and checklists. Provide feedback focused on the use of the strategy and the logical structure.

- Independent Performance: Students write independently, using the self-regulation strategies without scaffolds. The goal is to foster autonomous use in new contexts.

- Evaluation Metrics:

- Text Quality Rubric: Score arguments based on structure, coherence, relevance and quantity of ideas, and use of evidence.

- Planning Time and Quality: Measure time spent planning pre-writing and analyze the complexity of generated outlines or concept maps.

- Self-Evaluation Accuracy: Compare student self-scores on a rubric with instructor scores.

Protocol 2: Cultivating Metacognitive Vigilance in Open-Ended Learning

Adapted from research on metacognitive strategy use in computer-based learning environments [15], this protocol uses structured reflection and predictive monitoring to foster vigilance.

- Objective: To train students to continuously monitor their understanding of evolutionary concepts within open-ended learning tasks (e.g., inquiry-based labs, analysis of phylogenetic trees).

- Materials:

- Betty's Brain CBLE or Similar Simulation: An environment where students teach a virtual agent about a complex system like natural selection.

- Metacognitive Prompting Software or a Structured Learning Journal.

- Pre- and Post-Tests on the target evolutionary concepts.

- Procedure:

- Baseline Assessment: Administer prior domain knowledge and motivation (task value) questionnaires [15].

- Integrated Metacognitive Prompts: During the learning task, present automated, non-intrusive prompts at key decision points. Examples include:

- "Before you proceed, what is your current goal?"

- "Based on what you just learned, how would you now explain genetic drift to Betty?"

- "How confident are you in the causal map you have built? What part are you least sure about?"

- Predictive Self-Monitoring: At intervals, ask students to predict their performance on a future quiz question related to the current sub-topic. After the task, they compare their predictions with actual performance.

- Structured Reflection Logs: After the learning session, students complete a log with prompts such as:

- "Describe a moment today when you realized you did not understand a concept. What did you do next?"

- "What strategy was most effective for your learning today? Will you use it again?"

- Evaluation Metrics:

- Metacognitive Behavior Rate: Log the frequency of student-initiated strategy use (e.g., self-quizzing, concept map editing) within the CBLE [15].

- Prediction Accuracy: Calculate the correlation between predicted and actual quiz scores.

- Learning Gain: Normalized change from pre- to post-test scores.

Visualization of Theoretical Frameworks and Workflows

The following diagrams, generated using Graphviz with the specified color palette, illustrate the core models and protocols discussed.

Zimmerman's Cyclical Model of SRL

SRSD Intervention Protocol Workflow

The Scientist's Toolkit: Research Reagent Solutions

This table details essential materials and their functions for implementing and studying the proposed protocols.

Table 3: Essential Research Reagents and Materials for SRL and Metacognition Studies

| Item Name/Category | Function/Application in Research | Exemplars & Notes |

|---|---|---|

| Structured Writing Rubrics | Quantitatively assesses the quality of written arguments produced during SRSD interventions. | Use domain-specific rubrics evaluating idea coherence, use of evidence, and argument structure. |

| Metacognitive Prompting Software | Integrates into learning environments to deliver timed, context-sensitive prompts that stimulate self-monitoring. | Can be implemented in platforms like Betty's Brain [15] or custom online learning modules. |

| Self-Report Motivation Scales | Assesses learners' initial task value and self-efficacy, which are moderators of metacognitive strategy use [15]. | Adapt standardized questionnaires (e.g., focusing on Intrinsic Goal Orientation and Task Value). |

| Computer-Based Learning Environments (CBLEs) | Provides an open-ended platform for authentic inquiry where metacognitive behaviors can be logged and analyzed [15]. | Betty's Brain; simulations of evolutionary processes (e.g., Natural Selection). |

| Prior Knowledge Assessments | Establishes a baseline of domain-specific knowledge, a key predictor of SRL strategy deployment [15] [17]. | Standardized concept inventories (e.g., Conceptual Inventory of Natural Selection (CINS)). |

| Structured Interview Protocols | Qualitatively explores the domain-specific and general aspects of students' SRL processes [17]. | Semi-structured protocols asking students to compare their learning strategies across different subjects. |

This document provides application notes and protocols for identifying and addressing two specific intuitive conceptions—teleology and typologism—within professional research environments, particularly those focused on evolution education and metacognitive exercises. These preconceptions can influence scientific reasoning, experimental design, and the interpretation of data. The following sections offer structured methodologies for identifying these conceptions and integrating corrective metacognitive strategies into research practices.

Teleology is the explanation of phenomena by reference to a purpose, end, or goal, rather than solely by antecedent causes [21]. In its philosophical origins, it derives from the Greek words telos (end, purpose) and logos (reason, explanation) [22] [23]. While teleological language is often appropriate for describing intentional human action (e.g., a researcher conducts an experiment to test a hypothesis), its application to natural biological processes (e.g., "giraffes evolved long necks in order to reach high leaves") constitutes a misleading intuitive conception, as it implies forward-looking purpose in evolution instead of the mechanistic process of natural selection [23] [21].

Typologism (or Essentialism), in a scientific context, is the cognitive tendency to categorize variable natural populations into discrete, fixed types based on a perceived underlying "essence" [24]. This mode of thinking can obscure the continuous variation present within populations, which is the fundamental substrate upon which evolutionary forces like natural selection act. In professional practice, this can manifest as an over-reliance on rigid classifications or an underestimation of population diversity.

Quantitative Data on Conception Prevalence and Impact

The following tables synthesize quantitative findings and influential factors related to these intuitive conceptions, drawn from current education research.

Table 1. Impact of Targeted Curriculum Units on Evolution Understanding & Acceptance

| Curriculum Intervention | Student Group | Key Outcome Measure | Result | Citation |

|---|---|---|---|---|

| "H&NH" Unit (Human & Non-Human examples) | Introductory High School Biology (Alabama) | Understanding of Common Ancestry | More effective at increasing understanding compared to "ONH" unit [25] | [25] |

| "ONH" Unit (Only Non-Human examples) | Introductory High School Biology (Alabama) | Understanding of Common Ancestry | Less effective than "H&NH" unit [25] | [25] |

| Both "H&NH" & "ONH" Units | Introductory High School Biology (Alabama) | General Understanding & Acceptance of Evolution | Increase in over 70% of individual students [25] | [25] |

Table 2. Factors Influencing Acceptance of Evolutionary Concepts

| Factor Category | Specific Factor | Impact on Evolution Acceptance/Understanding | Citation |

|---|---|---|---|

| Religious & Cultural | Perceived Conflict with Religion | Strongest negative predictor of acceptance [25] [26] | [25] [26] |

| Cultural & Religious Sensitivity (CRS) Teaching | Reduced student discomfort; helped religious students feel their views were respected [25] | [25] | |

| Educational | Inclusion of Human Examples | Aided understanding of common ancestry; effective when combined with non-human examples [25] | [25] |

| Prior Evolution Knowledge | Positive correlation with higher post-intervention understanding scores [25] | [25] | |

| Socio-Economic | School Socio-Economic Status | Students at schools with lower percentage of economically disadvantaged students had higher scores [25] | [25] |

Experimental Protocols for Identification and Intervention

Protocol 1: Identifying Teleological Reasoning in Explanations

This protocol is designed to detect implicit teleological language in verbal or written explanations of biological phenomena.

I. Materials and Setup

- Participants: Researchers or students in a training context.

- Stimuli: A set of prompts describing evolutionary traits or biological processes (e.g., "Explain why antibiotic resistance develops in bacteria," or "Why do some animals have camouflage?").

- Environment: Quiet room for individual written response or audio-recorded interview.

II. Procedure

- Stimulus Presentation: Provide the prompts to participants.

- Data Collection: Ask participants to provide their explanations verbally or in writing. Do not prompt or guide their responses.

- Data Analysis (Coding):

- Transcribe all explanations verbatim.

- Code the explanations for the presence of key teleological markers using the following criteria:

- Explicit Goal-Oriented Language: Look for phrases such as "in order to," "so that," "for the purpose of," "to achieve," when referring to non-conscious biological entities or processes.

- Anthropomorphism: Attribution of human-like intention, foresight, or needs to natural selection or organisms (e.g., "the bacteria wanted to survive," "the species decided to adapt").

- Categorize explanations as "Mechanistic" (referring to random variation, selection pressures, differential survival) or "Teleological" (using coded markers).

III. Metacognitive Exercise Following the analysis, conduct a guided debriefing session. Present participants with anonymized examples of teleological explanations (including their own, with permission) and contrast them with mechanistic explanations. Facilitate a discussion on the difference between the usefulness of teleological thinking in describing human action versus its pitfalls in explaining evolutionary mechanisms [27].

Protocol 2: Assessing Typological Thinking in Classification Tasks

This protocol assesses the tendency towards essentialist thinking when participants are asked to group biological specimens or data.

I. Materials and Setup

- Participants: Researchers or students.

- Stimuli: Species cards with images and data on various characteristics for a range of organisms. The set should include populations with high intra-species variation and sibling species with subtle differences [25]. Include human examples where appropriate.

- Materials: Materials for creating graphical representations (e.g., pipe cleaners, drawing tools) [25].

II. Procedure

- Task Instruction: Ask participants to examine the species cards and develop a classification system based on the available data.

- Grouping and Representation: Participants must group the organisms and create a visual representation of their classification (e.g., a phylogenetic tree or a cladogram using pipe cleaners) [25].

- Data Collection:

- Collect the final classifications and representations.

- Conduct a short interview asking participants to justify their groupings and explain how they decided where to draw boundaries between categories.

III. Data Analysis

- Classification Rigidity: Analyze the representations for a "ladder-of-life" progression versus a branching tree structure. A linear progression is indicative of typological thinking.

- Boundary Justification: Code interview transcripts for mentions of "ideal types" or dismissal of continuous variation as "noise." Look for evidence that participants recognize variation within groups as being as important as differences between groups.

- Impact of Human Examples: Compare responses between groups that classified sets including humans versus those that did not, to see if self-relevance reduces typological bias [25].

Visualization of Conceptual Frameworks and Workflows

Teleological Reasoning Identification Pathway

Typologism Assessment Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3. Essential Methodological Reagents for Investigating Intuitive Conceptions

| Research 'Reagent' | Type/Format | Primary Function in Research |

|---|---|---|

| Cultural & Religious Sensitivity (CRS) Activity | Structured classroom discussion or guided resource [25] | Reduces perceived conflict between science and religion; creates a supportive environment for learning evolution, thereby allowing for more accurate assessment of core conceptions [25]. |

| Human & Non-Human (H&NH) Case Study Unit | Curriculum units (e.g., LUDA Project "Eagle" unit) [25] | Serves as both an intervention and assessment tool. The inclusion of human examples is particularly effective for teaching concepts like common ancestry and challenging anthropocentric biases [25]. |

| Classification Task with Species Cards | Physical cards with morphological/ genetic data [25] | Elicits underlying typological or essentialist reasoning patterns through a hands-on sorting task, revealing how individuals categorize biological variation [25]. |

| Perceived Conflict between Evolution and Religion (PCoRE) Measure | Validated survey instrument [26] | Quantifies the level of conflict a participant perceives, which is a critical confounding variable that must be measured and controlled for in studies of evolution acceptance [25] [26]. |

| Metacognitive Prompt Library | Set of standardized interview or reflection questions [9] | Facilitates the "metacognitive exercise" component. Prompts (e.g., "What was your reasoning? How did you distinguish categories?") guide participants to reflect on and articulate their own thought processes [9]. |

Evidence-Based Metacognitive Exercises for Evolution Mastery

Implementing Conception Self-Assessments with Criteria-Based Checklists

Within the specific context of evolution education research, the initial conception phase of a study is critical. This stage determines the foundational framework upon which all subsequent research is built. Implementing conception self-assessments with criteria-based checklists provides a structured metacognitive exercise for researchers, enabling them to systematically evaluate and refine their research questions and theoretical frameworks before committing to a specific design. This proactive approach aligns with broader goals of enhancing research quality and rigor through critical self-reflection, a cornerstone of metacognitive strategy development [28] [29]. This protocol outlines the application of such checklists, adapted from interdisciplinary research frameworks, to foster a more disciplined and self-regulated approach to launching research in evolution education [30].

Background and Rationale

The efficacy of self-assessment as a learning and development tool is well-documented. When implemented with adequate support and clear guidelines, self-assessment activities positively affect learners' attitudes, skills, and behavioral changes, as categorized by Kirkpatrick's model of evaluation [29]. In research, metacognition—the "higher-order thinking that enables understanding, analysis, and control of one’s cognitive processes"—is equally vital [9]. It empowers researchers to understand their own strengths and weaknesses, recognize the most productive strategies for a given problem, and avoid previously unproductive paths.

A key benefit of a structured checklist is its role in making implicit thought processes explicit. It guides researchers to "examine their learning experiences" and formalize the strategies that lead to success [9]. For research teams, this practice fosters a shared vision and mission, crucial for creating a supportive and forward-thinking collaborative environment [30] [31]. In evolution education, where projects may integrate perspectives from paleontology, genetics, developmental biology, and science education, a conception checklist ensures that the interdisciplinary nature of the project is not just aspirational but clearly articulated and justified from the outset [30].

Application Notes

The following application notes detail the core components and considerations for deploying the self-assessment checklist.

Key Metacognitive Objectives

The primary objective of the checklist is to trigger critical self-reflection and team dialogue during the conception phase. It aims to:

- Clarify Positioning: Force a precise definition of the research approach within the spectrum of scientific inquiry.

- Identify Motivations and Barriers: Surface the team's underlying expectations, strengths, and potential weaknesses.

- Establish a Shared Foundation: Ensure all team members collectively define and prioritize research objectives, leading to a genuine integration of knowledge and methods [30].

Context for Use in Evolution Education

This protocol is designed for use by individual researchers or teams at the very beginning of a project lifecycle, prior to detailed experimental or study design. In evolution education research, this is particularly relevant for:

- Grant Proposal Development: Strengthening the theoretical grounding and methodological justification of funding applications.

- Graduate Research Projects: Providing a structured framework for students to develop and refine dissertation topics.

- Interdisciplinary Collaborations: Facilitating dialogue between biologists, education specialists, and cognitive scientists to establish a common language and shared mission.

Experimental Protocol: Conception Phase Self-Assessment

This protocol provides a step-by-step methodology for conducting a conception self-assessment, adapted from the interdisciplinary research checklist developed for project leaders and their teams [30].

Pre-Assessment Preparation

- Team Assembly: Gather all key investigators and team members involved in the project's conceptualization.

- Materials: Distribute the Conception Self-Assessment Checklist (see Section 4.3) to all participants. Ensure access to the project's preliminary abstract or concept note.

- Time Allocation: Schedule a dedicated 60-90 minute session for discussion. The process requires uninterrupted, critical thinking.

Assessment Procedure

- Individual Rating (15 minutes): Each team member independently completes the checklist, rating each criterion and making brief notes on justifications and ideas for improvement.

- Facilitated Group Discussion (45-60 minutes): A facilitator (e.g., the principal investigator or a neutral party) guides the team through each checklist item.

- For each item, the facilitator solicits ratings and rationale from each member.

- The team discusses discrepancies in ratings to understand different perspectives.

- The goal is not to force consensus but to identify areas of alignment and misalignment, and to collectively refine the research conception.

- Synthesis and Action Plan (15 minutes): The facilitator summarizes key discussion points, identified strengths, and critical gaps. The team agrees on specific actions to address the gaps before moving to the project design phase.

Conception Self-Assessment Checklist

This checklist is the core reagent for the protocol. Researchers should evaluate their project concept against the following criteria.

Table 1: Conception Self-Assessment Checklist for Evolution Education Research

| Criterion | Rating (1-5) | Justification & Notes for Improvement |

|---|---|---|

| 1. Definition & Positioning: How is the project positioned relative to a robust definition of interdisciplinarity (e.g., integrating information, data, techniques, tools, perspectives, concepts, and/or theories from two or more bodies of specialized knowledge)? [30] | ||

| 2. Team Definition: Has the team explicitly formulated its own definition of interdisciplinarity and its specific approach (e.g., endogenous/close vs. exogenous/wide)? [30] | ||

| 3. Strength & Weakness Analysis: Have the team's collective strengths and weaknesses in executing this specific interdisciplinary approach been identified? [30] | ||

| 4. Motivation & Barriers: Are the core motivations for an interdisciplinary approach, as well as potential barriers, clearly understood? [30] | ||

| 5. Strategic Articulation: Can the team's interdisciplinary research strategy and priorities be concisely formulated? [30] | ||

| 6. Metacognitive Integration: Does the conception explicitly include plans for promoting metacognitive strategies among the research team or within the eventual educational intervention? [32] [9] | ||

| Rating Scale: 1=Not Addressed, 2=Poorly Addressed, 3=Partially Addressed, 4=Well Addressed, 5=Excellent |

Outcome Evaluation

The success of the self-assessment intervention can be evaluated using an adapted Kirkpatrick model, as applied in medical education self-assessment studies [29].

Table 2: Framework for Evaluating Self-Assessment Outcomes

| Kirkpatrick Level | Evaluation Focus | Measurement Method (Examples) |

|---|---|---|

| Level 1: Reaction | Researchers' views on the utility and acceptability of the self-assessment process. | Post-session feedback survey; qualitative interviews. |

| Level 2: Learning | Changes in researchers' understanding of their project's conceptual strengths and gaps. | Pre- and post-assessment ratings of conceptual clarity; analysis of discussion notes. |

| Level 3: Behavior | Observable changes in the research proposal or concept note based on assessment findings. | Document analysis comparing pre- and post-assessment project descriptions; tracking of implemented actions. |

The Scientist's Toolkit: Research Reagent Solutions

The following table details the essential materials and conceptual "reagents" required to implement this protocol effectively.

Table 3: Essential Research Reagents for Conception Self-Assessment

| Item | Function/Explanation |

|---|---|

| Structured Checklist | The core tool (e.g., Table 1) that operationalizes abstract criteria into evaluable items, guiding and standardizing the self-reflection process. |

| Facilitator Guide | A protocol for the session leader to ensure productive discussion, manage conflicting viewpoints, and keep the team focused on constructive critique. |

| Project Concept Note | A brief (1-2 page) written summary of the research idea, providing the concrete subject matter for the assessment. |

| Metacognitive Framework | A shared understanding of metacognition (e.g., as planning, monitoring, and reflecting on one's learning/thinking processes) to inform Criterion 6 [32] [9]. |

| Definitional References | Foundational texts providing robust definitions of key concepts like "interdisciplinarity" to anchor Criterion 1 and prevent ambiguous interpretations [30]. |

Workflow Visualization

The diagram below illustrates the logical sequence and iterative nature of the conception self-assessment protocol.

Conditional metaconceptual knowledge enables learners to understand why and in which contexts specific conceptions are appropriate or not, which is particularly crucial in evolution education where students frequently hold intuitive conceptions that differ from scientific explanations [33]. This approach moves beyond simply presenting correct scientific concepts by fostering students' metacognitive awareness and self-regulation of their own ideas [34]. Within evolution education research, developing conditional metaconceptual knowledge represents a powerful metacognitive exercise that helps students navigate the complex conceptual landscape of evolutionary theory while acknowledging and regulating their intuitive conceptions [33].

The theoretical foundation for this approach integrates conceptual change theory and knowledge integration perspectives, recognizing that students hold multiple conceptions simultaneously and must learn to selectively activate appropriate ideas based on context [35] [33]. For evolution concepts, this is particularly relevant as many student misconceptions stem from intuitive cognitive biases that may be functional in everyday contexts but are inappropriate in scientific explanations of evolutionary processes [33].

Experimental Evidence and Quantitative Findings

Recent intervention studies have demonstrated the effectiveness of conditional metaconceptual knowledge approaches in evolution education. The table below summarizes key quantitative findings from experimental research:

Table 1: Quantitative Outcomes of Metaconceptual Interventions in Evolution Education

| Intervention Component | Effect Size/Statistical Significance | Impact on Conceptual Knowledge | Effect on Self-Efficacy | Cognitive Load Findings |

|---|---|---|---|---|

| Self-assessment of conceptions | Medium effect sizes (η² = 0.06-0.11) | Significant improvement (p < .001) [33] | No negative impact; enabled more accurate ability beliefs [33] | Increased mental load, potentially suppressing benefits [33] |

| Conditional metaconceptual instruction | Not reported | Significant gains (p < .001) [33] | Supported accurate self-assessment | No significant increase in mental load [33] |

| Combined intervention | Largest effects | Greatest conceptual gains | Most balanced outcomes | Managed load through distributed practice |

| Traditional instruction (control) | Baseline | Moderate improvements | Variable effects | Lowest reported load |

Table 2: Accuracy of Student Self-Assessment of Conceptions

| Assessment Dimension | Accuracy Level | Predicting Factors |

|---|---|---|

| Identification of intuitive conceptions | Moderate | Prior conceptual knowledge of scientific concepts [34] |

| Identification of scientific conceptions | Moderate | Prior conceptual knowledge and self-efficacy [34] |

| Overall self-assessment accuracy | Moderate with over-assessment tendency | Scientific knowledge strongest predictor [34] |

| Context-appropriate application | Improved with conditional knowledge | Explicit instruction on contextual appropriateness [33] |

Core Protocol: Developing Conditional Metaconceptual Knowledge in Evolution

This protocol provides a detailed methodology for implementing conditional metaconceptual knowledge interventions in evolution education research settings, adaptable for various age groups and institutional contexts.

Primary Research Question: How does explicit instruction in conditional metaconceptual knowledge affect students' ability to appropriately apply intuitive versus scientific conceptions in evolutionary explanations?

Experimental Design: 2×2 factorial design comparing: (1) self-assessment of conceptions only, (2) conditional metaconceptual knowledge instruction only, (3) combined intervention, and (4) control group with traditional evolution instruction [33].

Participant Recruitment: Target N = 600+ for adequate statistical power. Recruit upper secondary biology students (ages 16-19) with basic evolution knowledge but documented alternative conceptions [33]. Ensure diverse demographic representation and obtain institutional IRB approval.

Implementation Timeline: 6-8 week intervention integrated into standard evolution curriculum, with pre-, post-, and delayed post-testing (8-12 weeks delayed) to assess retention.

Phase 1: Pre-Assessment and Baseline Data Collection (Week 1)

Conceptual Knowledge Measurement:

- Administer validated evolution understanding instruments (e.g., Concept Inventory of Natural Selection)

- Include open-ended evolutionary scenarios that elicit both intuitive and scientific explanations

- Measure ability to identify appropriate contexts for different conceptions

Metaconceptual Awareness Assessment:

- Implement think-aloud protocols during evolution problem-solving

- Administer metaconceptual thinking scales adapted from established instruments

- Assess self-regulation strategies through scenario-based questionnaires

Affective and Cognitive Measures:

- Administer self-efficacy scales specific to evolution understanding

- Implement cognitive load measures using established instruments

- Assess religiosity and evolution acceptance when relevant to research questions [20]

Phase 2: Intervention Implementation (Weeks 2-5)

Conditional Metaconceptual Knowledge Instruction:

- Conduct explicit teaching sessions comparing intuitive and scientific conceptions

- Implement contrasting cases showing appropriate and inappropriate contexts for different conceptions

- Facilitate structured discussions about conditions when intuitive thinking is useful versus when scientific conceptions are necessary

- Use worked examples demonstrating context-appropriate reasoning

Self-Assessment Protocol:

- Provide criteria-referenced self-assessment sheets with clear descriptions of intuitive and scientific conceptions

- Implement guided practice with authentic student explanations (anonymized)

- Conduct peer-assessment exercises with structured feedback protocols

- Facilitate metacognitive reflection on self-assessment accuracy

Integrated Activities:

- Implement "conception logs" where students track their own idea use across contexts

- Conduct role-playing activities where students defend different conceptions in appropriate contexts

- Create conceptual maps showing relationships between ideas and their domains of applicability [35]

Phase 3: Formative Assessment and Progress Monitoring (Weekly)

Progress Tracking:

- Collect and analyze conception logs for patterns of metaconceptual development

- Administer brief metaconceptual awareness checks

- Conduct short cognitive load measurements during demanding tasks

- Implement self-efficacy monitoring for specific evolution topics

Intervention Fidelity:

- Audio-record instructional sessions for fidelity checks

- Use standardized observation protocols to document implementation quality

- Collect instructor reflections on intervention adaptations

Phase 4: Post-Assessment and Data Analysis (Weeks 6-8)

Outcome Measures:

- Readminister conceptual knowledge measures

- Implement transfer tasks assessing context-appropriate conception use

- Administer metaconceptual awareness and regulation assessments

- Measure self-efficacy and cognitive load

Qualitative Data Collection:

- Conduct semi-structured interviews with purposively selected participants

- Implement stimulated recall using students' own assessments

- Collect written reflections on conceptual development

Data Analysis Plan:

- Employ multivariate analyses of covariance controlling for pre-test scores

- Conduct mediation analyses examining mechanisms of change

- Implement qualitative content analysis of interview data

- Use mixed-methods approaches to integrate quantitative and qualitative findings

Visualization of Theoretical Framework and Mechanisms

Theoretical Framework of Conditional Metaconceptual Knowledge Development

Experimental Workflow and Implementation Protocol

Experimental Workflow for Metaconceptual Knowledge Intervention

Research Reagent Solutions and Essential Materials

Table 3: Essential Research Materials and Assessment Tools

| Tool/Instrument | Primary Function | Implementation Specifications | Validation Evidence |

|---|---|---|---|

| Criteria-Referenced Self-Assessment Sheets | Formative assessment of intuitive and scientific conceptions | 4-point scales with exemplars; administered weekly | High inter-rater reliability (Cohen's κ > 0.80) [33] |

| Conceptual Knowledge Tests (CINS, MUM) | Measure evolution understanding | Pre-post-delayed design; counterbalanced forms | Established validity and reliability in evolution education [20] |

| Metaconceptual Awareness Scale | Assess awareness of one's conceptions | 20-item Likert scale; measures monitoring and evaluation | Good internal consistency (α = 0.82-0.89) [33] |

| Cognitive Load Measure | Assess mental load during tasks | 9-point subjective rating scale; multiple time points | Validated in educational psychology research [33] |

| Self-Efficacy Scales | Measure confidence in evolution understanding | Domain-specific 6-item scale; pre-post administration | Strong psychometric properties (α = 0.88) [33] |

| Conception Logs | Track conception use across contexts | Structured templates for weekly reflection | Qualitative validation through think-aloud protocols |

| Conditional Knowledge Assessment | Measure context-appropriate reasoning | Scenario-based with explanation prompts | Content validity established through expert review |

Adaptation and Implementation Guidelines

Contextual Adaptations

For Highly Religious Student Populations: Research indicates religiosity moderates the relationship between evolution understanding and acceptance [20]. For these populations, emphasize the conditional nature of scientific explanations and explicitly address perceived conflicts between religious and scientific ways of knowing. Focus on microevolutionary concepts initially, as these typically show higher acceptance across religiosity levels [20].

For Different Educational Levels:

- Secondary students: Use concrete examples and gradual introduction of metaconceptual language

- Undergraduate biology majors: Integrate with experimental design and research experiences [36]

- Graduate and professional populations: Focus on application to research practice and experimental interpretation

For Various Evolution Topics:

- Natural selection: Contrast intentionality-based vs. selection-based explanations

- Macroevolution: Address essentialist vs. population-based thinking

- Human evolution: Explicitly tackle anthropocentric reasoning patterns

Implementation Quality Indicators

High-Fidelity Implementation Markers:

- Consistent use of metaconceptual language by instructors

- Regular, criterion-referenced self-assessment

- Explicit discussion of contextual appropriateness

- Integration of conditional knowledge across evolution topics

- Appropriate pacing to manage cognitive load

Common Implementation Challenges:

- Student resistance to examining intuitive conceptions

- Instructor tendency to revert to traditional instruction

- Underestimation of time needed for metacognitive processes

- Difficulty maintaining intervention fidelity across multiple instructors

- Challenge of balancing conceptual coverage with metaconceptual depth

The development of conditional metaconceptual knowledge represents a significant advancement in evolution education research, addressing the core challenge of helping students appropriately regulate their intuitive conceptions in scientific contexts. The experimental protocols outlined here provide a validated methodology for investigating and implementing this approach across diverse educational settings.

Future research directions should include longitudinal studies tracking the development of metaconceptual competence over extended periods, investigations of neural correlates of metaconceptual thinking using neuroimaging methods, and development of technology-enhanced tools for supporting metaconceptual awareness. Additionally, research exploring the transfer of metaconceptual abilities across biological subdisciplines and into professional practice would strengthen our understanding of the broader impacts of this approach.

For drug development professionals and research scientists, these protocols offer methodologies for enhancing conceptual sophistication and context-appropriate reasoning in complex biological systems. The emphasis on conditional knowledge and self-regulation aligns with the cognitive demands of interdisciplinary research and evidence-based decision making in pharmaceutical development and clinical applications.

Designing Pre-Assessments and Reflective Journals to Activate Prior Knowledge

Application Notes: Theoretical and Practical Foundations

Activating prior knowledge is a critical metacognitive exercise in evolution education research, enabling researchers to establish baseline understanding and scaffold complex concepts like natural selection, genetic drift, and phylogenetic analysis. The pedagogical approach of pre-teaching provides a theoretical framework for designing pre-assessments, emphasizing that meaningful learning occurs when new information integrates into existing cognitive structures [37]. This connection is vital for evolution education, where learners often hold persistent misconceptions that must be identified and addressed before introducing advanced research methodologies.

For scientific professionals, these tools serve dual purposes: they function as formative assessment mechanisms that reveal knowledge gaps while simultaneously priming neural pathways for acquiring complex experimental protocols. The INVO model (integrating cognition, motivation, volition, and emotion) offers a comprehensive framework for designing these research tools, ensuring they address not only cognitive dimensions but also the motivational-volitional components essential for sustained engagement with challenging evolutionary concepts [37].

Reflective journals serve as powerful metacognitive instruments that extend beyond simple documentation. When implemented with structured prompts, they foster critical thinking about one's own learning process and conceptual development—a crucial capacity for researchers navigating the paradigm-shifting nature of evolutionary biology [38]. The cyclical process of recording experiences, reflecting on processes, and analyzing for deeper learning leads to new dimensions of scientific understanding and innovation [39].

Experimental Protocols

Protocol for Pre-Assessment Design and Implementation

Objective: To create and administer pre-assessments that effectively activate and diagnose prior knowledge in evolution education research contexts.

Materials:

- Pre-assessment questions targeting key evolutionary concepts

- Response recording system (digital or paper-based)

- Data analysis framework for knowledge gap identification

Procedure:

Diagnostic Question Development (Duration: 3-5 days)

- Identify 5-7 core evolutionary concepts essential for understanding the upcoming research module (e.g., mechanisms of speciation, molecular clock calculations, selective pressure analysis)

- Formulate open-ended questions that require explanation rather than simple recall

- Sequence questions from fundamental to complex to scaffold thinking

- Validate questions with subject matter experts for content accuracy

Pre-Assessment Administration (Duration: 45-60 minutes)

- Provide context by explaining the diagnostic purpose to participants

- Instruct participants to answer all questions without external resources

- Emphasize that responses should reflect current understanding without penalty

- Collect responses through appropriate medium (digital platform preferred for data analysis)

Response Analysis and Categorization (Duration: 2-3 days)

- Create a scoring rubric with the following categories:

- Accurate Understanding: Scientifically correct explanations

- Partial Understanding: Mixed accurate and inaccurate elements

- Misconceptions: Persistent incorrect evolutionary beliefs

- Knowledge Gaps: Omitted or unrecognized concepts

- Code all responses according to the established rubric

- Quantify frequencies across categories for each evolutionary concept

- Create a scoring rubric with the following categories:

Intervention Planning (Duration: 1-2 days)

- Design targeted instructional materials addressing identified misconceptions

- Develop concept inventories for ongoing monitoring