Assessing the Power of Introgression Detection Methods: A Comparative Guide for Genomic Researchers

Accurately detecting the direction of introgression—the transfer of genetic material between species or populations—is crucial for evolutionary biology, drug target discovery, and understanding disease genetics.

Assessing the Power of Introgression Detection Methods: A Comparative Guide for Genomic Researchers

Abstract

Accurately detecting the direction of introgression—the transfer of genetic material between species or populations—is crucial for evolutionary biology, drug target discovery, and understanding disease genetics. This article provides a comprehensive assessment of the power and limitations of modern introgression detection algorithms. We explore the foundational principles of 12 representative methods, including tree-based, statistical, and signal-processing approaches like S*, D-statistics, IBDmix, and IntroMap. For a research-focused audience, we detail methodological applications, troubleshoot common pitfalls like false positives from homoplasy, and present a rigorous validation framework. A key finding from recent research is that downstream analyses can yield different conclusions depending on the introgression map used, underscoring the need for a multi-method approach to ensure robust, reproducible results in biomedical research.

The Introgression Landscape: Core Concepts and Methodological Foundations

Introgression, the transfer of genetic material between species or distinct populations through hybridization and repeated backcrossing, represents a powerful evolutionary force with far-reaching implications across the tree of life. Once considered primarily a homogenizing process, research over the past decade has revealed that introgression serves as a significant mechanism for adaptation, enabling species to acquire beneficial alleles that facilitate rapid response to environmental challenges [1]. This process has been documented extensively in eukaryotes—most famously through Neanderthal introgression in modern humans—and increasingly in bacteria, where it challenges traditional concepts of species boundaries [2] [3].

The detection and analysis of introgressed genomic regions have become sophisticated endeavors, employing diverse methodological approaches including summary statistics, probabilistic modeling, and supervised learning [4]. Each method offers distinct advantages and limitations, with performance varying across evolutionary scenarios, taxonomic groups, and genomic contexts. This guide provides a systematic comparison of introgression detection methods, their experimental protocols, and their applications across biological systems—from archaic hominin DNA to bacterial core genomes—enabling researchers to select optimal approaches for their specific study systems.

Methodological Approaches for Detecting Introgression

Categories of Detection Methods

Current methods for identifying introgressed sequences fall into three primary categories, each with distinct theoretical foundations and implementation requirements. Summary statistics-based methods utilize population genetic metrics such as D-statistics and fd statistics to detect signatures of introgression from patterns of allele sharing [4]. These approaches benefit from computational efficiency and minimal demographic assumptions but offer limited power for pinpointing exact introgressed tracts. Probabilistic modeling methods employ hidden Markov models (HMMs) and related frameworks to infer introgression based on explicit demographic models. diCal-admix exemplifies this category, modeling the genealogical process along genomes to detect introgressed tracts while accounting for population history [5]. Supervised machine learning approaches such as VolcanoFinder, Genomatnn, and MaLAdapt leverage training datasets to classify genomic regions as introgressed or non-introgressed based on multiple features [6]. These methods can capture complex patterns but require extensive training data and may be sensitive to model misspecification.

Performance Comparison Across Methods

Comprehensive evaluations reveal that method performance varies significantly across evolutionary scenarios. A recent benchmark study tested VolcanoFinder, Genomatnn, and MaLAdapt on simulated datasets reflecting diverse divergence and migration times inspired by human, wall lizard (Podarcis), and bear (Ursus) lineages [6]. The results, summarized in Table 1, indicate that methods based on the Q95 summary statistic generally offer the best balance of power and precision for exploratory studies, particularly when accounting for the hitchhiking effects of adaptively introgressed mutations on flanking regions [6].

Table 1: Performance Comparison of Introgression Detection Methods

| Method | Category | Optimal Scenario | Strengths | Limitations |

|---|---|---|---|---|

| diCal-admix | Probabilistic modeling | Model-based detection in known demographic histories | Explicit demographic modeling; accurate tract length estimation | Performance depends on correct demographic model [5] |

| VolcanoFinder | Supervised learning | Adaptive introgression detection | Effectiveness in detecting selective sweeps from introgression | Variable performance across divergence times [6] |

| Genomatnn | Supervised learning | Complex introgression scenarios | Handles various introgression scenarios | Performance varies across evolutionary scenarios [6] |

| MaLAdapt | Supervised learning | Limited training data | Efficient with limited data | Lower power in some scenarios [6] |

| Q95-based methods | Summary statistics | Exploratory studies | Balanced performance; minimal assumptions | Less precise for tract boundary identification [6] |

Performance depends critically on evolutionary parameters including divergence time, migration rate, population size, selection strength, and recombination landscape [6]. Methods generally perform better with recent introgression events and stronger selection coefficients, while performance declines with increasing divergence between source and recipient populations. The genomic context of introgressed regions also significantly impacts detection power, with methods struggling more in low-recombination regions and near selective sweeps [6].

Introgression Across Biological Systems

Hominin Introgression

The study of Neanderthal introgression in modern humans represents a paradigm for understanding archaic introgression patterns and functional consequences. Genomic analyses reveal that 1-4% of genomes of present-day people outside Africa derive from Neanderthal ancestors, with these introgressed regions exhibiting distinct evolutionary fates [7]. Some Neanderthal alleles facilitated human adaptation to novel environments, including climate conditions, UV exposure levels, and pathogens, while others had deleterious consequences and were selectively removed [7].

Application of diCal-admix to 1000 Genomes Project data has revealed long regions depleted of Neanderthal ancestry that are enriched for genes, consistent with weak selection against Neanderthal variants [5]. This pattern appears driven primarily by higher genetic load in Neanderthals resulting from small effective population size rather than widespread Dobzhansky-Müller incompatibilities [5]. Notably, the X-chromosome shows particularly low levels of introgression, though the mechanistic basis for this pattern remains debated [5] [7]. Conversely, Neanderthal ancestry shows significant enrichment in genes related to hair and skin traits (keratin pathways), suggesting adaptive introgression helped modern humans adapt to non-African environments [5] [7].

Bacterial Introgression

While bacteria reproduce asexually, homologous recombination facilitates pervasive gene flow that shapes their evolution. Quantitative analyses across 50 major bacterial lineages reveal that introgression—defined here as gene flow between core genomes of distinct species—averages 2% of core genes but reaches 14% in highly recombinogenic genera like Escherichia-Shigella [2]. This challenges operational species definitions based solely on sequence identity thresholds (e.g., 95% ANI), as interruption of gene flow occurs across a range of sequence identities (90-98%) depending on the lineage [3].

Table 2: Introgression Patterns Across Bacterial Lineages

| Bacterial Group | Average Introgression Level | Notable Features | Implications for Species Definition |

|---|---|---|---|

| Escherichia–Shigella | Up to 14% of core genes | High recombination frequency | Porous species boundaries [2] |

| Campylobacter | ~20% of genome in some species | Gene flow between highly divergent species | Fuzzy species borders [2] |

| Neisseria | Variable | Recombinogenic nature | Historically noted "fuzzy" species [2] |

| Cronobacter | High levels | Extensive introgression | Challenges species delimitation [2] |

| Endosymbionts | Minimal | Clonal evolution | Clear species borders [3] |

Truly clonal bacterial species are remarkably rare, with only 2.6% of analyzed species showing no evidence of recombination [3]. These exceptional cases primarily include endosymbionts like Buchnera aphidicola with restricted access to exogenous DNA [3]. For most bacteria, homologous recombination maintains species cohesiveness while occasional introgression introduces adaptive variation across species boundaries, analogous to processes in sexual organisms [2] [3].

Experimental evolution studies with Escherichia coli demonstrate that high rates of conjugation-mediated recombination can sometimes overwhelm selection, with donor DNA segments fixation due to physical linkage to transfer origins rather than selective advantage [8]. This highlights how the mechanistic features of bacterial gene transfer can produce evolutionary outcomes distinct from eukaryotic introgression.

Experimental Protocols for Introgression Analysis

Probabilistic Modeling with diCal-admix

The diCal-admix method employs a hidden Markov model framework to detect introgressed tracts while explicitly incorporating demographic history [5]. The protocol begins with data preparation, requiring genomic sequences from the target population, putative source population, and outgroup. For Neanderthal introgression studies, this typically includes modern non-African individuals, Neanderthal reference genomes, and African individuals as an outgroup [5].

Next, model parameterization establishes key demographic parameters including divergence times, population sizes, migration rates, and introgression timing. For human-Neanderthal analyses, standard parameters include: divergence time of 26,000 generations (650 kya), Neanderthal-modern human split at 4,000 generations (100 kya), introgression event at 2,000 generations (50 kya), and introgression coefficient of 3% [5]. The HMM implementation then computes the probability of introgression along genomic windows based on patterns of haplotype sharing and differentiation, generating posterior probabilities for Neanderthal ancestry across the genome [5].

Validation through extensive simulations confirms method robustness to parameter misspecification, though accurate demographic modeling significantly enhances performance [5]. The output consists of genomic tracts with high posterior probability of introgression, which can be further analyzed for functional enrichment and selective signatures.

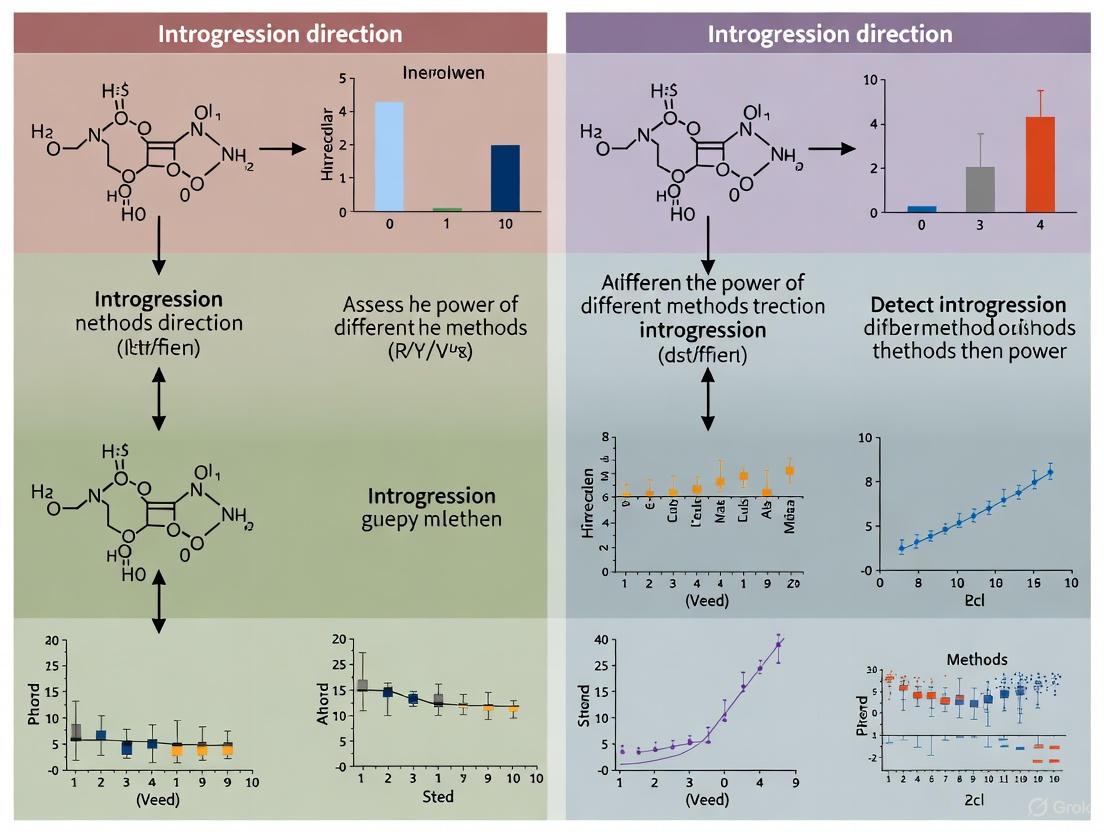

Figure 1: Workflow for model-based introgression detection using diCal-admix and related probabilistic approaches

Bacterial Introgression Detection

Protocols for detecting introgression in bacteria differ significantly from eukaryotic approaches due to distinct genetic system properties. The standard workflow begins with genome collection and core gene identification, assembling a comprehensive dataset of bacterial genomes within a target lineage and identifying orthologous core genes present in most strains [2].

Next, species delineation employs Average Nucleotide Identity (ANI) thresholds (typically 94-96%) to classify genomes into operational species units, followed by phylogenomic reconstruction using maximum likelihood methods on concatenated core genome alignments [2]. The core analytical step involves phylogenetic incongruence analysis, where individual gene trees are compared against the species tree to identify potential introgression events [2].

A gene is considered introgressed when it satisfies two criteria: (1) it forms a monophyletic clade with sequences from a different species that is inconsistent with the core genome phylogeny, and (2) it is statistically more similar to sequences from a different species than to sequences from its own species [2]. Finally, biological species concept refinement adjusts initial ANI-based species boundaries based on patterns of gene flow, reducing inflated introgression estimates between recently diverged populations [2].

Research Reagent Solutions

Successful introgression studies require specialized analytical tools and genomic resources. Key reagents and their applications across study systems include:

Table 3: Essential Research Reagents for Introgression Studies

| Reagent/Tool | Category | Function | Application Examples |

|---|---|---|---|

| Reference Genomes | Genomic data | Provides basis for sequence alignment and variant calling | Neanderthal genome (Altai); Bacterial reference strains [5] [2] |

| Outgroup Sequences | Genomic data | Enables polarization of ancestral/derived alleles | African genomes for Neanderthal introgression; Distantly related bacterial species [5] [3] |

| diCal-admix Software | Analytical tool | HMM-based introgression detection | Neanderthal tract identification in 1000 Genomes data [5] |

| VolcanoFinder | Analytical tool | Machine learning approach for adaptive introgression | Performance testing across multiple lineages [6] |

| ANI Calculator | Bioinformatics tool | Species delineation in bacteria | Defining bacterial species boundaries [2] [3] |

| Phylogenetic Software | Analytical tool | Species and gene tree reconstruction | Detecting phylogenetic incongruence in bacterial genes [2] |

Introgression represents a fundamental evolutionary process with comparable importance across biological domains, from Neanderthal DNA in modern humans to core genome exchanges in bacteria. Detection methods perform variably across evolutionary scenarios, with summary statistics (particularly Q95-based approaches) offering robust exploratory power, while model-based methods like diCal-admix provide finer-scale inference when demographic history is well-characterized [6] [5]. Bacterial systems present unique challenges and opportunities, with homologous recombination maintaining species cohesion while permitting adaptive introgression across porous species boundaries [2] [3].

Future methodological development should focus on improving detection power for ancient introgression events, distinguishing adaptive from neutral introgression, and integrating across taxonomic divides to develop unified theoretical frameworks. The continued expansion of genomic datasets across diverse taxa, coupled with benchmarking studies under realistic evolutionary scenarios, will further refine our ability to decode genomic landscapes of introgression and understand its creative role in evolution [4].

Statistical power, defined as the probability that a test will correctly reject a false null hypothesis, is a foundational concept that critically influences the reliability of research in both evolutionary genetics and biomedical science. Low statistical power significantly increases the likelihood that statistically significant findings represent false positive results and inflates the estimated magnitude of true effects when they are discovered [9]. In evolutionary biology, this translates to uncertainty in detecting introgression and inferring evolutionary history, while in biomedical research, it undermines the validity of associations between biological parameters and disease. Evidence suggests that underpowered studies are widespread, with one analysis of biomedical literature revealing that approximately 50% of studies have statistical power in the 0-20% range, far below the conventional 80% threshold considered adequate [9]. This review compares the performance of various methods for detecting introgression, with a specific focus on their statistical power and practical applications, providing researchers with a framework for selecting appropriate methodologies based on their specific investigative needs and constraints.

Comparative Performance of Introgression Detection Methods

The detection of introgression—the transfer of genetic material between species or populations through hybridization—relies on identifying genomic regions that show unexpected similarity between taxa. Different methods have been developed to detect these patterns, each with varying strengths, power, and susceptibility to confounding factors. The table below summarizes the key characteristics of several prominent methods.

Table 1: Comparison of Methods for Detecting Introgression

| Method | Underlying Principle | Data Requirements | Power & Strengths | Limitations & Vulnerabilities |

|---|---|---|---|---|

| D-statistic (ABBA-BABA) | Compares frequencies of discordant site patterns to detect gene tree heterogeneity [10]. | A minimum of four lineages (e.g., P1, P2, P3, Outgroup); works with a single sequence per species [10]. | High power to detect introgression between non-sister lineages; robust to selection [10]. | Requires an outgroup; cannot be used for sister species pairs [11]. |

| dXY | Measures the average pairwise sequence divergence between two populations [11]. | Can use single or multiple sequences per species; does not require phased data or an outgroup. | Robust to the effects of linked selection; provides an intuitive measure of divergence [11]. | Low sensitivity to recent or low-frequency introgression; confounded by variation in mutation rate [11]. |

| dmin | Identifies the minimum sequence distance between any pair of haplotypes from two taxa [11]. | Requires phased haplotypes from multiple individuals per species. | High power to detect rare introgressed lineages, as it focuses on the most similar haplotypes [11]. | Highly sensitive to variation in the neutral mutation rate; requires accurate phasing. |

| Gmin | The ratio of dmin to dXY, normalizing for background divergence [11]. | Requires phased haplotypes from multiple individuals per species. | More robust to mutation rate variation than dmin alone while retaining sensitivity to recent migration [11]. | Still requires phased data; power can be reduced by high background divergence. |

| RNDmin | A modified dmin statistic normalized by divergence to an outgroup [11]. | Requires an outgroup species; works with phased data from multiple individuals. | Robust to variation in mutation rate and inaccurate divergence time estimates [11]. | Modest power increase over related tests; requires an outgroup. |

| Convolutional Neural Networks (CNNs) | Deep learning models trained on genotype matrices to identify complex patterns of introgression and selection [12]. | Genomic windows with data from donor, recipient, and outgroup populations; can use unphased data. | Very high accuracy (~95%); can jointly model introgression and positive selection (adaptive introgression) [12]. | "Black box" nature makes it difficult to interpret which features drive the prediction; requires extensive training data. |

Experimental Protocols for Key Methods

Protocol for D-Statistic Analysis

The D-statistic is a powerful, widely-used method for detecting introgression that is based on counting discordant site patterns in an alignment. The following workflow outlines its key steps [10]:

- Sequence Alignment and Filtering: Generate a whole-genome alignment for the three ingroup populations (P1, P2, P3) and an outgroup (O). P1 and P2 are the tested sister species, and P3 is the potential introgressing lineage. Filter for neutral, biallelic sites to avoid confounding effects from selection.

- Site Pattern Counting: For each genomic window or site, categorize the alleles into four patterns based on the derived (non-outgroup) state:

- ABBA: P1 and O share the ancestral allele, while P2 and P3 share the derived allele.

- BABA: P1 and P3 share the derived allele, while P2 and O share the ancestral allele.

- Calculate the D-statistic: Compute the statistic across all considered sites using the formula:

- D = (∑ABBA - ∑BABA) / (∑ABBA + ∑BABA) A significant deviation from zero (often assessed via block jackknifing) indicates an excess of gene tree discordance, which is consistent with introgression between P2 and P3 (if D > 0) or P1 and P3 (if D < 0).

- Account for Incomplete Lineage Sorting (ILS): The null hypothesis of the D-statistic is that all gene tree discordance is due to ILS. The test is powerful because it expects the two discordant topologies (ABBA and BABA) to be equally frequent under ILS alone; introgression causes a systematic skew in their frequencies [10].

Protocol for RNDminAnalysis

The RNDmin method is particularly useful for detecting introgression between sister species. The protocol below details its implementation [11]:

- Data Preparation and Phasing: Obtain whole-genome sequence data from multiple individuals for the two sister species (X and Y) and an outgroup (O). Phase the haplotypes to resolve individual chromosomes.

- Calculate Minimum Divergence (dmin): For a given genomic window, compute the number of sequence differences (dx,y) for every possible pairing of haplotypes from species X and Y. Find dmin, which is the smallest of these pairwise distances.

- dmin = minx∈X,y∈Y{dx,y}

- Calculate Outgroup Divergence (dout): Compute the average sequence distance between species X and the outgroup O (dXO) and between species Y and the outgroup O (dYO). Then calculate dout.

- dout = (dXO + dYO) / 2

- Compute RNDmin:

- RNDmin = dmin / dout

- Identify Outliers: Calculate RNDmin for windows across the genome. Genomic regions with exceptionally low RNDmin values are candidate introgressed loci, as they indicate haplotypes in the sister species that are more similar to each other than expected given their collective divergence from the outgroup. The significance of candidates is typically assessed by comparing them to a null distribution generated through coalescent simulations.

Protocol for CNN-Based Detection of Adaptive Introgression

Convolutional Neural Networks (CNNs) represent a model-free approach that can detect complex patterns of adaptive introgression. The following methodology is based on the genomatnn framework [12]:

- Data Matrix Construction:

- Windowing: Partition the genome from the donor, recipient, and an unadmixed outgroup population into windows (e.g., 100 kbp).

- Binning and Encoding: Within each window, divide the sequence into equally sized bins. For each bin in each individual, encode the data as a count of minor alleles, creating a genotype matrix.

- Sorting and Concatenation: Sort the pseudo-haplotypes or genotypes within each population by their similarity to the donor population. Concatenate the matrices from the three populations into a single input matrix for the CNN.

- Model Training (Pre-training): Train the CNN using simulated data. Simulations must encompass a wide range of evolutionary scenarios, including:

- Neutral evolution with ILS.

- Selective sweeps without introgression.

- Adaptive introgression (AI) with varying selection coefficients and times of selection onset.

- Application and Inference: Apply the trained CNN to real genomic data. The network takes the genotype matrix for each window as input and outputs a probability score that the region underwent adaptive introgression. High-probability regions are considered strong AI candidates.

- Model Interpretation (Saliency Maps): To interpret the "black box" model, generate saliency maps that highlight which parts of the input genotype matrix most influenced the CNN's prediction, providing biological insight into the features of AI [12].

Visual Guide to Method Selection

The following diagram illustrates the logical decision process for selecting an appropriate introgression detection method based on the research question and data availability.

Diagram 1: A flow chart for selecting an introgression detection method.

The Scientist's Toolkit: Key Research Reagents and Solutions

Successful detection of introgression relies on a combination of bioinformatic tools, genomic resources, and analytical frameworks. The table below details essential components of the modern introgression research toolkit.

Table 2: Research Reagent Solutions for Introgression Studies

| Tool/Reagent | Type | Primary Function |

|---|---|---|

| Whole-Genome Sequencing Data | Genomic Data | Provides the raw nucleotide variation data required for all downstream analyses. Can be derived from a single individual or multiple individuals per species/population. |

| Phased Haplotypes | Processed Data | Resolved sequences of alleles on individual chromosomes, which are essential for methods like dmin, Gmin, and RNDmin that rely on pairwise haplotype comparisons [11]. |

| Reference Genome & Annotation | Genomic Resource | Serves as a coordinate system for alignment and allows for the functional interpretation of candidate introgressed regions (e.g., identifying genes). |

| stdpopsim & SLiM | Simulation Software | Provides a standardized framework for generating realistic genomic data under complex evolutionary models, which is critical for training CNNs and creating null distributions for summary statistics [12]. |

| genomatnn (CNN Framework) | Software/Method | A dedicated convolutional neural network pipeline for detecting adaptive introgression from genotype data, offering high accuracy even on unphased genomes [12]. |

| Outgroup Genome | Genomic Data | A genome from a lineage known to not have hybridized with the study species, which is required for polarizing alleles (D-statistic) and normalizing divergence (RNDmin) [10] [11]. |

The choice of method for detecting introgression has profound implications for the power, accuracy, and biological validity of evolutionary inferences. Summary statistics like the D-statistic and RNDmin offer powerful, intuitive, and computationally efficient approaches for specific phylogenetic contexts, while emerging deep learning techniques like CNNs provide unparalleled ability to detect complex patterns of adaptive introgression by leveraging the full information content of genomic data. The pervasive issue of low statistical power in biological research underscores the necessity of selecting methods with high discriminatory power and of designing studies with adequate sample sizes and sequencing depth. By carefully matching the methodological approach to the biological question and available data, researchers can more reliably uncover the historical and adaptive significance of introgression in shaping biodiversity.

The detection of introgressed genomic regions—where genetic material has been transferred between species or populations through hybridization and backcrossing—has become a fundamental analysis in evolutionary genetics. As genomic datasets expand across diverse taxa, the methodological landscape for identifying introgression has diversified into three major algorithmic families: reference-based, reference-free, and simulation-based methods [4]. Each approach offers distinct advantages and limitations, with performance varying significantly across different evolutionary scenarios.

Understanding the power of these methods to correctly identify the direction of introgression—which population donated genetic material and which received it—is particularly crucial for reconstructing accurate evolutionary histories [13]. This guide provides a systematic comparison of these methodological families, focusing on their underlying principles, experimental requirements, and empirical performance based on published benchmarking studies.

Methodological Families for Introgression Detection

Reference-Based Methods

Reference-based methods require genomic data from the putative introgressing (donor) population, which is used as a reference to identify foreign haplotypes in a target population.

IntroMap exemplifies this approach by employing signal processing techniques on next-generation sequencing data aligned to a reference genome. The pipeline identifies introgressed regions by detecting significant divergence in sequence homology without requiring variant calling or genome annotation. The method converts alignment information into a binary representation of matches/mismatches, applies signal averaging to reduce noise, and uses statistical thresholding to call introgressed regions [14]. This method is particularly valuable in plant breeding programs where one parental genome is available as a reference.

Key advantage: High accuracy when suitable reference genomes are available. Primary limitation: Limited applicability to scenarios involving "ghost" lineages or unsampled extinct populations.

Reference-Free Methods

Reference-free methods detect introgression without direct comparison to archaic reference genomes, instead leveraging population genetic patterns characteristic of admixed haplotypes.

ArchIE (ARCHaic Introgression Explorer) employs a logistic regression model trained on population genetic summary statistics to infer archaic local ancestry. The method combines multiple features including the individual frequency spectrum (IFS), pairwise haplotype distances, and their statistical moments to distinguish introgressed from non-introgressed regions [15]. This approach is particularly valuable for detecting introgression from unknown or unsampled archaic populations.

The S*-statistic is another reference-free method that identifies introgressed regions by detecting clusters of highly diverged single nucleotide polymorphisms (SNPs) in high linkage disequilibrium [15]. However, its power is generally lower than model-based approaches, especially for ancient introgression events [15].

Key advantage: Applicable to cases where reference genomes from donor populations are unavailable. Primary limitation: Generally lower power compared to reference-based approaches.

Simulation-Based Methods

Simulation-based approaches use training data generated under explicit evolutionary models to distinguish different introgression scenarios.

genomatnn implements a convolutional neural network (CNN) framework that takes genotype matrices as input to identify regions under adaptive introgression. The method uses a series of convolution layers to extract features informative of both introgression and selection, outputting the probability that a genomic region underwent adaptive introgression [16]. The CNN is trained on simulated data encompassing a wide range of selection coefficients and timing parameters, enabling detection of complete or incomplete sweeps at any time after gene flow.

MaLAdapt is another machine learning method that employs a random forest classifier trained on summary statistics to detect adaptive introgression. Its performance varies across different evolutionary scenarios but shows particular strength in cases of strong selection [6].

Key advantage: Can jointly model complex processes like introgression and selection. Primary limitation: Performance depends on the match between simulated training data and real evolutionary history.

Comparative Performance Analysis

Performance Across Evolutionary Scenarios

Recent benchmarking studies have evaluated these method families across diverse evolutionary scenarios, including those inspired by human, wall lizard (Podarcis), and bear (Ursus) lineages [6]. These lineages represent different combinations of divergence times and migration histories, providing a robust framework for comparing methodological performance.

Table 1: Performance Metrics Across Method Families

| Method Family | Example Tools | Power | False Discovery Rate | Direction Detection | Optimal Scenario |

|---|---|---|---|---|---|

| Reference-based | IntroMap | High [14] | Low [14] | High [14] | Donor genome available |

| Reference-free | ArchIE, S* | Moderate [15] | Variable [15] [17] | Limited [15] | Ghost introgression |

| Simulation-based | Genomatnn, MaLAdapt, VolcanoFinder | High [16] [6] | Low [16] | Moderate [16] | Complex introgression |

Impact of Genomic Context on Performance

A critical finding from comparative studies is that the genomic context of introgressed regions significantly impacts detection accuracy across all methods. The "hitchhiking effect" of an adaptively introgressed mutation affects flanking regions, making it challenging to discriminate between truly adaptive windows and adjacent neutral regions [6]. Performance metrics improve substantially when methods are trained to account for this effect by including adjacent windows in training data [6].

Table 2: Power Analysis Under Different Selection Strengths (Q95 Statistic) [6]

| Selection Coefficient | Divergence Time (Generations) | Power (Strongly Asymmetric Migration) | Power (Symmetric Migration) |

|---|---|---|---|

| 0.01 | 60,000 | 0.92 | 0.85 |

| 0.01 | 120,000 | 0.89 | 0.81 |

| 0.001 | 60,000 | 0.87 | 0.79 |

| 0.001 | 120,000 | 0.83 | 0.75 |

| 0.0001 | 60,000 | 0.75 | 0.68 |

| 0.0001 | 120,000 | 0.71 | 0.64 |

Performance in Direction Detection

Accurately determining the direction of introgression remains challenging for many methods. Full-likelihood approaches under the multispecies coalescent (MSC) framework generally provide the most reliable inference of directionality [13]. However, even these methods can produce biased estimates when gene flow is incorrectly assigned to ancestral rather than daughter lineages [13].

Summary statistic methods like the D-statistic (ABBA-BABA test) often struggle with direction detection, particularly for gene flow between sister lineages [13]. In comparative studies, the D-statistic demonstrated high false discovery rates, especially under scenarios with high incomplete lineage sorting [17].

Experimental Protocols and Workflows

Reference-Based Detection Workflow (IntroMap)

The IntroMap pipeline employs the following methodology [14]:

Sequence Alignment: NGS reads are aligned to a reference genome using standard tools (e.g., bowtie2) to produce BAM format alignment files.

Binary Representation: The MD tags in BAM files are parsed to create binary vectors for each read position, where 1 represents a match and 0 represents a mismatch/deletion.

Matrix Construction: Binary vectors are assembled into a sparse matrix C[d,l] where d represents read depth and l represents nucleotide position.

Signal Processing: Per-base calling scores are computed and smoothed using a low-pass filter convolution with a window vector of length w.

Homology Estimation: A locally weighted linear regression fit generates a homology signal hc, with values [0,1] representing the degree of homology at each position.

Threshold Detection: A threshold function T(hc,t) identifies regions where homology scores drop significantly, indicating potential introgression.

Reference-Free Detection Workflow (ArchIE)

The ArchIE methodology employs the following steps [15]:

Training Data Simulation: Coalescent simulations (e.g., using ms) generate genomic data under specified demographic models with known introgression events.

Feature Calculation: For each genomic window, multiple summary statistics are computed:

- Individual Frequency Spectrum (IFS)

- Pairwise haplotype distances and their moments (mean, variance, skew, kurtosis)

- Population differentiation metrics

Model Training: A logistic regression classifier is trained on the simulated data to distinguish introgressed from non-introgressed windows.

Application to Empirical Data: The trained model is applied to empirical genomic data to infer posterior probabilities of introgression.

Simulation-Based CNN Workflow (genomatnn)

The genomatnn framework implements the following protocol [16]:

Data Preparation: Genotype matrices are constructed from donor, recipient, and unadmixed outgroup populations for each genomic window (typically 100 kbp).

Matrix Sorting: Haplotypes within each population are sorted by similarity to the donor population.

Input Construction: Sorted matrices are concatenated into a single input matrix for the CNN.

CNN Architecture: The network uses:

- Multiple convolutional layers with 2×2 step size (instead of pooling layers)

- Feature extraction through successive dimensionality reduction

- Final classification layer outputting AI probability

Model Interpretation: Saliency maps identify genomic regions most influential to predictions.

The Scientist's Toolkit

Table 3: Essential Research Reagents and Computational Tools

| Tool Category | Specific Tools | Function | Application Context |

|---|---|---|---|

| Sequence Aligners | bowtie2 | Alignment of NGS reads to reference genomes | Reference-based methods [14] |

| Coalescent Simulators | ms, msprime, stdpopsim | Generate simulated genomic data under evolutionary models | Training data for reference-free and simulation methods [15] [16] [18] |

| Population Genetics Frameworks | Python, R, Scientific Python | Compute summary statistics and implement custom analyses | All methodological families [14] [15] |

| Machine Learning Libraries | TensorFlow, PyTorch | Implement neural networks for classification | Simulation-based methods [16] [18] |

| Visualization Tools | matplotlib, ggplot2 | Create publication-quality figures | Results presentation and quality control [14] |

The three major algorithmic families for introgression detection each offer complementary strengths for different research scenarios. Reference-based methods provide the highest accuracy when reference genomes from donor populations are available. Reference-free approaches enable detection of introgression from unknown or unsampled populations. Simulation-based methods offer powerful frameworks for detecting complex evolutionary scenarios like adaptive introgression.

For researchers specifically interested in determining the direction of introgression, full-likelihood methods under the multispecies coalescent framework currently provide the most reliable inference, despite computational intensivity [13]. The choice of method should be guided by data availability, evolutionary context, and specific research questions, with particular attention to recent benchmarking studies that validate performance across diverse scenarios.

The accurate detection of introgression direction—the flow of genetic material between species—is fundamental to understanding evolutionary processes, adaptation, and speciation. However, validating methodological approaches in this field is critically hampered by the "ground truth problem": the fundamental lack of a perfectly known, real-world standard against which to benchmark performance. Without a biological gold standard, researchers must rely on comparative performance assessments using simulated datasets, well-established model systems, and internal consistency checks to evaluate the power and accuracy of different analytical techniques. This guide objectively compares the performance of leading methods for detecting introgression direction, providing researchers with a framework for selecting and applying these tools amidst the inherent uncertainties of evolutionary genomics.

Core Methodologies for Detecting Introgression Direction

Methods for detecting introgression direction can be broadly categorized into likelihood-based frameworks and summary-statistic approaches. The table below summarizes their fundamental characteristics and data requirements.

Table 1: Core Methodological Frameworks for Introgression Detection

| Method Category | Key Example(s) | Underlying Principle | Data Requirements | Primary Output |

|---|---|---|---|---|

| Likelihood / Bayesian | MSC-I (Multispecies Coalescent with Introgression) [19] | Computes the probability of the observed sequence data given a model of speciation and gene flow, including direction. | Multi-locus sequence alignments, a pre-specified species tree model. | Estimates of introgression probability (φ), its timing, and direction, with Bayesian posterior probabilities. |

| Summary Statistic | D-statistic (ABBA-BABA) [20] | Compares counts of discordant site patterns (ABBA vs. BABA) to detect gene flow, can be extended to infer direction. | Genotype or sequence data for a 4-taxon set (P1, P2, P3, Outgroup); can use allele frequencies. | A significant D-value indicates gene flow; direction is inferred from the specific taxon sharing derived alleles. |

| Summary Statistic | RNDmin, Gmin [11] | Uses minimum sequence divergence between populations (normalized by an outgroup) to identify recently introgressed haplotypes. | Phased haplotypes from two sister species and an outgroup. | A value significantly lower than the genomic background indicates introgression; direction can be inferred from haplotype pairing. |

The following diagram illustrates the logical workflow for applying and validating these methods in the absence of a perfect biological ground truth.

Quantitative Performance Comparison

The power and accuracy of these methods vary significantly based on evolutionary parameters such as population size, divergence time, and the strength and direction of gene flow. The following performance data is synthesized from simulation studies.

Table 2: Performance Comparison of Introgression Detection Methods Under Different Scenarios

| Method | Key Performance Metric | Scenario of High Power / Key Finding | Scenario of Reduced Power / Limitation |

|---|---|---|---|

| MSC-I (Bayesian) [19] | Accuracy in inferring direction (A→B vs. B→A). | Easier to infer gene flow from a small to a large population (power > 80% under simulated conditions). Easier with longer time between divergence and introgression. | Power is reduced when gene flow is from a large to a small population. Requires correct species tree and model specification. |

| D-Statistic [20] | Significance of D-value (Z-score > 3). | Robust across a wide range of divergence times. Effective at detecting recent and ancient gene flow. | Sensitivity is highly dependent on population size (scaled by generations). Power drops with smaller population sizes. Cannot detect gene flow between sister species without additional modification. |

| RNDmin / Gmin [11] | Proportion of true introgressed loci detected (True Positive Rate). | Offers a modest increase in power over related statistics (e.g., FST, dXY). Robust to variation in mutation rate. | Requires phased haplotypes. Power is contingent on the strength and recency of introgression; older, weaker events are harder to detect. |

Detailed Experimental Protocols

To ensure reproducibility and critical evaluation, this section outlines the core experimental and analytical protocols for the featured methods.

Protocol for MSC-I Analysis Using BPP

This protocol employs the Bayesian software BPP under the Multispecies Coalescent with Introgression model [19].

- Input Data Preparation: Compile a multi-locus sequence alignment file. Prepare a control file specifying the species tree topology with an introgression branch (e.g.,

A->B). Define prior distributions for parameters (e.g., divergence timestau, population sizestheta, introgression probabilityphi). - MCMC Analysis: Run a Markov Chain Monte Carlo (MCMC) analysis for a sufficient number of generations (e.g., 100,000-1,000,000), discarding the initial samples as burn-in. Monitor convergence using trace plots and effective sample size (ESS) diagnostics.

- Model Comparison: To test for introgression, compare the model with gene flow against a null model without gene flow using Bayes factors (calculated from marginal likelihoods, e.g., with stepping-stone sampling). A significant Bayes factor (e.g., > 10) supports the presence of gene flow.

- Inference of Direction: The direction of introgression is inherent in the specified model. The estimated introgression probability (

phi) and its Bayesian credibility interval are directly interpreted for the specified direction (e.g.,A->B). Analysis should be run with the alternative direction model (B->A) and the model with higher marginal likelihood is preferred.

Protocol for D-Statistic Analysis

This protocol details the steps for detecting and inferring the direction of gene flow using the D-statistic [20].

- Taxon Selection and Data Preparation: Select four taxa with a known phylogeny:

P1,P2(sister species),P3(the potential introgressing species), and anOutgroup. The phylogeny must be((P1,P2),P3),Outgroup). Generate a genome-wide SNP dataset or sequence alignment for these taxa. - Site Pattern Counting: For each informative site in the genome, count the occurrences of ancestral (

A) and derived (B) alleles. A site isABBAifP1andOutgrouphave the ancestral allele, whileP2andP3have the derived allele. A site isBABAifP1andP3have the derived allele, whileP2andOutgrouphave the ancestral allele. - Calculation and Testing: Calculate the D-statistic as

D = (Sum(ABBA) - Sum(BABA)) / (Sum(ABBA) + Sum(BABA)). Perform a statistical test (e.g., block jackknife) to determine ifDsignificantly deviates from zero. A significant positiveDsuggests gene flow betweenP3andP2; a significant negativeDsuggests gene flow betweenP3andP1.

Visualizing Method Workflows

The computational workflow for a comprehensive analysis, integrating multiple methods to overcome their individual limitations, is depicted below.

The Scientist's Toolkit: Essential Research Reagents & Materials

Successful research in this field relies on a combination of bioinformatic tools, genomic resources, and model systems.

Table 3: Key Research Reagent Solutions for Introgression Studies

| Tool / Resource | Type | Primary Function in Analysis | Relevance to Ground Truth |

|---|---|---|---|

| BPP Software Suite [19] | Bioinformatics Tool | Implements Bayesian MCMC analysis under the MSC and MSC-I models for estimating species trees, divergence times, and introgression parameters. | A primary method for likelihood-based inference of direction, performance of which is tested via simulation. |

| Phased Haplotype Data | Genomic Resource | High-quality reference genomes or population genomic data where the phase of alleles (which chromosome they reside on) is known. | Required for methods like RNDmin. The quality of phasing directly impacts the accuracy of the ground truth signal in empirical data. |

| Heliconius Butterfly Genomes [19] | Model System | A well-studied system with known and adaptive introgression, used as an empirical benchmark for method validation. | Serves as a "known-positive" empirical test case where methodological inferences can be compared to established biological knowledge. |

| Coalescent Simulators (e.g., ms, msprime) | Computational Tool | Generates synthetic genomic sequence data under user-specified evolutionary models (divergence times, population sizes, migration). | Creates a controlled "synthetic ground truth" where the history of gene flow is known exactly, enabling rigorous power assessments and false positive rate calculations. |

The precise identification of introgressed genomic regions is a fundamental challenge in evolutionary biology, with significant implications for understanding adaptation, speciation, and disease. As genomic datasets expand across diverse taxa, researchers are presented with an array of methodological approaches for detecting introgression, each with distinct strengths, limitations, and underlying assumptions [4]. This comparison guide provides an objective evaluation of current methods for identifying a core set of introgressed regions, focusing on areas of consensus across different analytical frameworks. The performance assessment is framed within the broader thesis of evaluating statistical power across different methodological approaches, providing researchers with evidence-based guidance for selecting appropriate tools based on their specific study systems and evolutionary questions. With the growing recognition that introgression serves as a crucial evolutionary force promoting adaptation across taxonomic groups [1], the need for robust and reliable detection methods has never been more pressing. This guide synthesizes recent benchmarking studies to illuminate the conditions under which different methods achieve consensus and where their interpretations diverge, thereby empowering researchers to make informed decisions in their introgression detection workflows.

Methodological Approaches to Introgression Detection

Current methods for detecting introgression can be broadly categorized into three major frameworks: summary statistics, probabilistic modeling, and supervised learning approaches [4]. Each category operates on different principles and makes different assumptions about the underlying evolutionary processes.

Summary statistics represent some of the earliest approaches for detecting introgression and continue to evolve with new implementations that broaden their applicability across taxa [4]. These methods typically compute measures of genetic divergence, similarity, or allele frequency differences that are expected to deviate from neutral expectations in introgressed regions. Their relative simplicity and computational efficiency make them particularly valuable for initial exploratory analyses and for studying non-model organisms with less well-characterized demographic histories.

Probabilistic modeling approaches provide a powerful framework that explicitly incorporates evolutionary processes and has yielded fine-scale insights across diverse species [4]. These methods typically use coalescent-based or hidden Markov model frameworks to infer the probability of introgression given the observed genetic data and a specified demographic model. While often computationally intensive, they can provide more detailed insights into the timing, direction, and extent of introgression when appropriate demographic models are available.

Supervised learning represents an emerging approach with great potential, particularly when the detection of introgressed loci is framed as a semantic segmentation task [4]. These machine learning methods can capture complex, multi-dimensional patterns in genetic data that might be difficult to summarize with individual statistics. Their performance, however, is highly dependent on the quality and representativeness of training data, and they may struggle when applied to evolutionary scenarios different from those used in training [6] [21].

Table 1: Major Methodological Categories for Introgression Detection

| Category | Underlying Principle | Key Advantages | Common Tools |

|---|---|---|---|

| Summary Statistics | Measures deviation from expected patterns under neutrality | Fast computation; minimal assumptions; good for exploratory analysis | (f)-statistics; (D)-statistics; (Q_{95}) |

| Probabilistic Modeling | Explicit models of evolutionary processes incorporating gene flow | Provides detailed parameter estimates; model-based confidence intervals | VolcanoFinder; ∂a∂i |

| Supervised Learning | Pattern recognition trained on simulated or known introgressed regions | Can capture complex, multi-dimensional patterns; high accuracy in trained scenarios | MaLAdapt; Genomatnn |

Figure 1: Workflow for Identifying Consensus Introgressed Regions Across Multiple Methods

Performance Benchmarking Across Evolutionary Scenarios

Recent systematic benchmarking efforts have revealed that method performance varies significantly across different evolutionary scenarios, with no single approach universally outperforming others in all conditions. A comprehensive evaluation of adaptive introgression classification methods tested three prominent tools (VolcanoFinder, Genomatnn, and MaLAdapt) and a standalone summary statistic ((Q_{95})) across simulated datasets representing various evolutionary histories inspired by human, wall lizard (Podarcis), and bear (Ursus) lineages [6] [21]. These lineages were specifically chosen to represent different combinations of divergence and migration times, providing a robust test of method performance across diverse evolutionary contexts.

The benchmarking study examined the impact of multiple parameters on method performance, including divergence time, migration rate, population size, selection coefficient, and the presence of recombination hotspots [6]. Performance was evaluated based on both power (the ability to correctly identify truly introgressed regions) and false positive rates (the incorrect identification of non-introgressed regions as introgressed). Importantly, the study also investigated how different types of non-adaptive introgression windows affected performance, including independently simulated neutral introgression windows, windows adjacent to regions under selection, and windows from unlinked chromosomes [6].

Table 2: Performance Comparison of Introgression Detection Methods Across Scenarios

| Method | Approach Type | Human Model Performance | Non-Human Model Performance | Optimal Application Context |

|---|---|---|---|---|

| (Q_{95}) | Summary statistic | Moderate to high | High across scenarios | Exploratory studies; non-model organisms |

| VolcanoFinder | Probabilistic modeling | High | Variable depending on divergence | Well-characterized demographic histories |

| MaLAdapt | Supervised learning | High | Lower when training scenario mismatch | Scenarios similar to training data |

| Genomatnn | Supervised learning | High | Lower when training scenario mismatch | Human and primate studies |

One of the most notable findings from these benchmarking efforts was that (Q_{95}), a straightforward summary statistic, performed remarkably well across most scenarios and often outperformed more complex machine learning methods, particularly when applied to species or demographic histories different from those used in training data [21]. This surprising result suggests that simple summary statistics remain valuable tools, especially for initial exploratory analyses in non-model systems.

The performance of machine learning-based methods like MaLAdapt and Genomatnn was generally high when applied to evolutionary scenarios similar to their training data but decreased when applied to different demographic contexts [6] [21]. This highlights the importance of considering evolutionary context when selecting methods and suggests that retraining may be necessary when applying these tools to divergent study systems.

Experimental Protocols for Method Evaluation

Simulation Framework for Power Assessment

Benchmarking studies evaluating introgression detection methods typically employ sophisticated simulation frameworks that generate genomic data under known evolutionary scenarios with and without introgression. The protocol generally follows these key steps:

Scenario Definition: Researchers first define evolutionary parameters based on real biological systems, typically including divergence times, migration times, effective population sizes, selection coefficients, and recombination rates [6]. These parameters are often derived from well-studied systems such as humans, wall lizards (Podarcis), and bears (Ursus) to represent diverse evolutionary histories.

Data Simulation: Genomic data is simulated using coalescent-based approaches that incorporate the defined parameters. Studies often utilize tools such as msprime [6] to generate sequence data under realistic demographic models with specified gene flow events.

Method Application: Each detection method is applied to the simulated datasets using standardized parameters and thresholds. This includes both complex machine learning approaches (MaLAdapt, Genomatnn) and simpler summary statistics ((Q_{95})).

Performance Calculation: Power and false positive rates are calculated by comparing method outputs to the known simulated truth. Performance metrics typically include area under the curve (AUC) of receiver operating characteristic (ROC) curves, precision-recall curves, and true/false positive rates at specific thresholds [6] [21].

Evaluation of Different Neutral Backgrounds

A critical aspect of method evaluation involves testing performance against different types of neutral genomic regions, as the hitchhiking effect of an adaptively introgressed mutation can strongly impact flanking regions and complicate discrimination between adaptive and neutral introgression [6]. The experimental protocol typically includes:

- Independent neutral simulations: Genomic windows simulated without any selection or introgression.

- Adjacent windows: Regions flanking simulated adaptive introgression sites, which may be affected by linked selection.

- Unlinked chromosomal regions: Windows from chromosomes completely unlinked to those under selection.

This comprehensive approach helps researchers understand how different methods perform in distinguishing true adaptive introgression from neutral patterns and linked selection effects [6].

Successful detection and validation of introgressed regions requires careful selection of computational tools, statistical frameworks, and data resources. The following table summarizes key solutions available to researchers in this field.

Table 3: Research Reagent Solutions for Introgression Detection Studies

| Resource Type | Specific Tools/Resources | Function and Application |

|---|---|---|

| Simulation Tools | msprime [6]; SLiM | Generate synthetic genomic data under specified evolutionary scenarios for method testing and validation |

| Summary Statistics | (Q_{95}) [6] [21]; (f)-statistics | Calculate measures of genetic divergence and similarity to detect deviations from neutral expectations |

| Probabilistic Models | VolcanoFinder [6] [21]; ∂a∂i | Implement model-based approaches that explicitly incorporate demographic history and selection |

| Machine Learning Tools | MaLAdapt [6] [21]; Genomatnn [6] [21] | Apply trained classifiers to identify introgressed regions based on multi-dimensional patterns |

| Visualization & Analysis | R; Python; GENESPACE [22] | Visualize and interpret introgression results; analyze synteny and structural variation |

Consensus and Discordance in Detected Regions

The identification of a core set of introgressed regions requires careful consideration of consensus across methods, as different approaches may highlight distinct genomic intervals. Studies indicate that while different methods often show substantial overlap in regions with strong signals of introgression, the agreement decreases for weaker signals or in more complex evolutionary scenarios [6].

Areas of strongest consensus typically include regions with recent, strong selective sweeps and high-frequency introgressed haplotypes [6] [21]. These regions are more readily detected by multiple methodological approaches, providing greater confidence in their identification. Conversely, regions with older introgression events, weaker selection, or complex demographic histories often show greater discordance across methods, reflecting differences in statistical power and underlying assumptions.

The hitchhiking effect presents a particular challenge for establishing consensus, as methods vary in their ability to distinguish the core introgressed site from flanking regions [6]. This has practical implications for determining the precise boundaries of introgressed segments and for identifying the specific adaptive variants responsible for selection.

Guidelines for Method Selection and Application

Based on recent benchmarking studies, researchers can follow these evidence-based guidelines for selecting and applying introgression detection methods:

For exploratory studies in non-model organisms, begin with summary statistics like (Q_{95}), which show robust performance across diverse evolutionary scenarios without requiring extensive training data [21].

When working with well-characterized demographic histories, probabilistic approaches like VolcanoFinder can provide more detailed insights into the timing and strength of selection [6].

For systems similar to human evolutionary history, machine learning methods like Genomatnn and MaLAdapt show high performance but should be retrained or validated when applied to divergent taxa [6] [21].

To establish a high-confidence set of introgressed regions, prioritize regions identified by multiple methods with different underlying assumptions, as consensus across approaches provides stronger evidence [6].

Always consider adjacent genomic windows when interpreting results, as the hitchhiking effect can influence detection probabilities in flanking regions and lead to false positives if not properly accounted for [6].

These guidelines emphasize that method choice should be informed by biological context, and that a combination of approaches often yields the most reliable results [21]. As the field continues to evolve, systematic benchmarking across diverse evolutionary scenarios will remain essential for developing and validating new methods for detecting introgressed regions.

A Practical Guide to Prominent Introgression Detection Algorithms and Tools

The study of introgression, the transfer of genetic material between species or populations through hybridization and backcrossing, has been revolutionized by advances in genome sequencing and computational phylogenetics. The precise identification of introgressed loci is a rapidly evolving area of research, providing valuable insights into evolutionary history, adaptation, and the complex web of interactions between lineages [4]. For researchers and drug development professionals, understanding these genetic exchanges can illuminate pathways of disease resistance, environmental adaptation, and functional genetic diversity.

This guide focuses on three powerful tree-based methods for detecting introgression: the summary statistic-based approaches S* and Sprime, and the model-based method ARGweaver-D. Each offers distinct advantages for characterizing genomic landscapes of introgression across diverse evolutionary scenarios, including adaptive and "ghost" introgression from unsampled populations [4]. We objectively compare their performance, experimental requirements, and applicability to help researchers select the optimal tool for specific introgression detection challenges.

S* is a summary statistic designed to identify archaic introgression without reference panels from putative archaic populations. It leverages the principles of the D-statistic (ABBA-BABA test) but enhances sensitivity to older introgression events by incorporating information from a large number of individuals from the recipient population. The method scans the genome for regions with an excess of derived alleles and high divergence from an outgroup, which are characteristic signatures of archaic ancestry [23].

Sprime is an evolution of the S* method that uses a hidden Markov model (HMM) to better delineate the boundaries of introgressed segments. It improves upon S* by more accurately estimating the length of introgressed haplotypes, which is particularly valuable for studying older introgression events where recombination has broken down archaic segments into smaller pieces [23].

ARGweaver-D represents a fundamentally different approach, using a probabilistic framework to sample Ancestral Recombination Graphs (ARGs) conditional on a user-defined demographic model that includes population splits and migration events [23]. As a major extension of the ARGweaver algorithm, ARGweaver-D can infer local genetic relationships and identify migrant lineages along the genome, providing a powerful method for detecting even ancient introgression events [23].

Table: Comparison of S, Sprime, and ARGweaver-D Methodological Characteristics*

| Characteristic | S* | Sprime | ARGweaver-D |

|---|---|---|---|

| Methodological Category | Summary statistic | Summary statistic with HMM | Probabilistic modeling of ARGs |

| Underlying Principle | Excess of derived alleles and high divergence | HMM-refined haplotype identification | Bayesian sampling of genealogies with migration |

| Demographic Model Requirement | No | No | Yes (user-defined) |

| Key Advantage | No need for archaic reference panels | Better resolution of segment boundaries | Can detect older, more complex introgression |

| Computational Intensity | Moderate | Moderate | High |

Performance Comparison and Experimental Data

Each method demonstrates distinct strengths under different evolutionary scenarios. S* and Sprime excel at detecting relatively recent introgression into modern humans, having been optimized for this specific problem [23]. However, they face limitations for older proposed migration events, such as gene flow from ancient humans into Neanderthals (Hum→Nea) or from super-archaic hominins into Denisovans (Sup→Den) [23].

ARGweaver-D shows remarkable power for detecting both recent and ancient introgression. In simulation studies, it successfully identifies regions introgressed from Neanderthals and Denisovans into modern humans, even with limited genomic data [23]. More significantly, it maintains power for older gene-flow events, including Hum→Nea, Sup→Den, and introgression from unknown archaic hominins into Africans (Sup→Afr) [23].

Application of ARGweaver-D to real hominin genomes revealed that approximately 3% of the Neanderthal genome was putatively introgressed from ancient humans, with estimated gene flow occurring 200-300 thousand years ago [23]. The method also predicted that about 1% of the Denisovan genome was introgressed from an unsequenced, highly diverged archaic hominin ancestor, with roughly 15% of these "super-archaic" regions subsequently passing into modern humans [23].

Table: Empirical Performance on Hominin Introgression Detection

| Introgression Event | S*/Sprime Performance | ARGweaver-D Performance | Key Findings |

|---|---|---|---|

| Neanderthal→Modern Humans | Well-powered for detection | Successfully detects even with few samples | Identifies 1-3% of non-African genomes as Neanderthal-derived [23] |

| Denisovan→Modern Humans | Well-powered for detection | Successfully detects with high confidence | Identifies 2-4% of Oceanian genomes as Denisovan-derived [23] |

| Ancient Humans→Neanderthal | Limited power | Confidently detects | Predicts 3% of Neanderthal genome from ancient humans [23] |

| Super-Archaic→Denisovan | Limited power | Confidently detects | Predicts 1% of Denisovan genome from unsequenced archaic [23] |

Experimental Protocols and Workflows

S* and Sprime Implementation

The implementation of S* and Sprime typically follows a standardized workflow. For S, the analysis begins with genome-wide calculation of the S statistic, which identifies regions with an excess of derived alleles and high divergence. These candidate regions are then subjected to filtering based on predefined thresholds to eliminate false positives. Finally, the boundaries of putative introgressed segments are refined, and their lengths are estimated.

Sprime builds upon this foundation by incorporating a hidden Markov model to improve boundary detection. The workflow involves similar initial identification of candidate regions using the S* statistic, followed by application of an HMM to more precisely delineate segment boundaries. The HMM parameters are trained on the data, and the Viterbi algorithm is typically used to decode the most likely path of introgressed segments. Finally, posterior probabilities are calculated for each putative introgressed segment to assess confidence.

ARGweaver-D Implementation

The ARGweaver-D workflow is more complex due to its model-based nature. The initial critical step involves specifying a demographic model that includes population divergence times, effective population sizes, and potential migration events. The algorithm then employs a Markov Chain Monte Carlo (MCMC) approach to sample ancestral recombination graphs (ARGs) conditional on this demographic model. From these sampled ARGs, migrant lineages are identified, representing potential introgression events. Finally, probabilities of introgression are calculated along the genome, providing a fine-scale map of gene flow events.

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful implementation of these introgression detection methods requires specific computational resources and data inputs. The following table outlines key components of the research toolkit for phylogenetic analysis of introgression.

Table: Essential Research Reagents and Materials for Introgression Analysis

| Tool/Resource | Function/Purpose | Implementation Notes |

|---|---|---|

| High-Coverage Genomes | Primary input data for analysis | Multiple individuals per population enhance power [23] |

| Demographic Model | Population history framework (for ARGweaver-D) | Required for ARGweaver-D; includes divergence times and migration events [23] |

| Outgroup Sequence | Rooting phylogenetic trees and polarizing alleles | Essential for D-statistics and S* calculation; e.g., chimpanzee for hominin studies [23] |

| Reference Panels | Context for allele frequency spectra | Useful for S* but not required; can leverage existing datasets like 1000 Genomes |

| Computational Cluster | High-performance computing resources | Essential for ARGweaver-D MCMC sampling; reduces runtime for all methods |

The comparison of S, Sprime, and ARGweaver-D reveals a fundamental trade-off between computational efficiency and analytical power in introgression detection. Summary statistic methods like S and Sprime offer accessible approaches for detecting recent introgression, while ARGweaver-D provides a more powerful, model-based framework capable of uncovering ancient gene flow and complex demographic histories.

For researchers studying recent introgression with limited computational resources, Sprime represents an excellent choice, balancing sensitivity with reasonable computational demands. However, for investigations of deeper evolutionary history, complex gene flow scenarios, or ghost introgression from unsampled populations, ARGweaver-D offers unparalleled insights despite its significant computational requirements.

The continued development and refinement of these methods will further illuminate the complex web of interactions that have shaped the genomes of modern species, including humans, with potential implications for understanding disease susceptibility, adaptive traits, and evolutionary history.

The precise identification of introgressed genomic loci is a rapidly evolving area of research in population genetics [4]. Introgression, the transfer of genetic material between species or populations through hybridization and backcrossing, plays a significant role in evolution, potentially introducing adaptive traits or contributing to genetic load. Accurately detecting these introgressed sequences is crucial for understanding evolutionary history, adaptive processes, and functional consequences of gene flow.

Among the myriad of methods developed, the D-statistic (ABBA-BABA test) and IBDmix represent two distinct philosophical and technical approaches to introgression detection [24] [20]. The D-statistic is a widely adopted, population-based method that relies on reference populations and tests for deviations from a strict bifurcating tree model. In contrast, IBDmix is a more recent, individual-based method that identifies introgressed sequences by detecting segments identical by descent (IBD) without requiring an unadmixed reference population [24]. This guide provides a comprehensive comparison of these two methods, focusing on their performance in detecting introgression directionality across diverse research scenarios.

Theoretical Foundations and Methodological Principles

D-Statistic (ABBA-BABA Test)

The D-statistic is a parsimony-like method designed to detect gene flow between closely related species despite the existence of incomplete lineage sorting (ILS) [20]. It operates on a four-taxon system with an established phylogeny (((H1,H2),H3),O) and uses allele frequency patterns to test for introgression.

- Core Principle: The method compares counts of two discordant site patterns ("ABBA" and "BABA") that are equally likely under ILS but occur at different rates when gene flow has occurred [20].

- Key Formula: The D-statistic is calculated as D = (ABBA - BABA) / (ABBA + BABA). A significant deviation from zero indicates introgression between H2 and H3.

- Reference Dependency: Requires an unadmixed reference population (H1) and an outgroup (O) for polarization of ancestral and derived alleles [20].

- Limitations: Provides qualitative evidence of gene flow but quantitative interpretation requires careful consideration of demographic parameters [20].

IBDmix

IBDmix is a probabilistic method that identifies introgressed sequences by detecting segments identical by descent (IBD) between a test individual and an archaic reference genome, without using a modern human reference population [24].

- Core Principle: Directly identifies genomic regions in modern individuals that share long, identical-by-descent segments with an archaic genome, indicating recent shared ancestry [24].

- Key Innovation: Eliminates the need for an unadmixed modern reference population, enabling detection of introgression in populations where such references are unavailable or inappropriate [24].

- Reference Independence: This approach avoids biases introduced when using potentially admixed populations as references, allowing for more accurate detection of introgression across diverse populations [24].

- Applications: Particularly valuable for detecting archaic introgression in African populations, where previous methods underestimated Neanderthal ancestry due to lack of appropriate reference populations [24].

Table 1: Fundamental Characteristics of D-Statistic and IBDmix

| Feature | D-Statistic | IBDmix |

|---|---|---|

| Methodological Category | Summary statistic/Population-based | Probabilistic modeling/Individual-based |

| Core Principle | Allele frequency patterns (ABBA/BABA sites) | Identity-by-descent (IBD) segment sharing |

| Reference Requirement | Requires unadmixed reference population | No modern reference population needed |

| Data Input | SNP data or sequence alignment | Genome sequences (modern and archaic) |

| Primary Output | Statistical evidence for population-level introgression | Identification of introgressed segments in individuals |

| Introgression Direction | Can infer direction with careful study design | Can directly infer direction from IBD sharing |

Method Workflows

The fundamental differences between D-statistic and IBDmix approaches are visualized in their analytical workflows:

Performance Comparison and Experimental Data

Power and Sensitivity Across Scenarios

Recent evaluations of introgression detection methods reveal critical differences in performance across evolutionary scenarios:

Table 2: Performance Comparison Across Evolutionary Scenarios

| Scenario | D-Statistic Performance | IBDmix Performance | Supporting Evidence |

|---|---|---|---|

| Recent Introgression (Neanderthal-Non-African) | High power with appropriate reference | High power, detects 2-4% of genome | [24] [25] |

| Deep Divergence (>1% sequence distance) | Effective but sensitive to population size | Maintains power with sufficient IBD | [20] |

| African Populations with Archaic Ancestry | Limited due to reference dependency | Superior, detects stronger Neanderthal signal | [24] |

| Multiple Pulse Introgression | Can detect but may conflate signals | Can distinguish multiple pulses via segment length | [25] [26] |

| Ghost Population Introgression | Limited to inferred patterns | Can detect without reference genome | [4] [25] |

| Directionality Inference | Requires careful study design | Direct inference from IBD sharing | [24] [26] |

Key Performance Limitations

D-Statistic Limitations:

- Sensitivity is primarily determined by relative population size (population size scaled by generations since divergence) [20]

- Requires accurate species tree and unadmixed reference population

- Becomes less reliable when reference population is admixed [24]

- Provides population-level inference but limited individual-level detection

IBDmix Limitations:

- Requires high-quality archaic reference genome

- Performance depends on IBD segment length preservation

- May miss highly fragmented archaic segments due to recombination

- Computational complexity higher than summary statistics

Experimental Protocols and Implementation

Standard D-Statistic Implementation

Data Preparation:

- Variant Calling: Generate SNP datasets for all populations (H1, H2, H3, Outgroup)

- Filtering: Apply standard quality filters (mapping quality, base quality, missing data)

- Phylogenetic Framework: Validate the assumed species tree (((H1,H2),H3),O)

Analysis Workflow:

- Site Pattern Identification:

- Parse VCF files to identify ABBA (derived in H3 and H2) and BABA (derived in H3 and H1) sites

- Use outgroup to polarize ancestral/derived states

- Count Calculation:

- Sum ABBA and BABA patterns across all informative sites

- Exclude regions with potential recombination or selection if needed

- Statistical Testing:

- Calculate D = (ABBA - BABA) / (ABBA + BABA)