Evolutionary Algorithms in Protein Function Prediction: A Practical Guide to Validation and Application in Drug Discovery

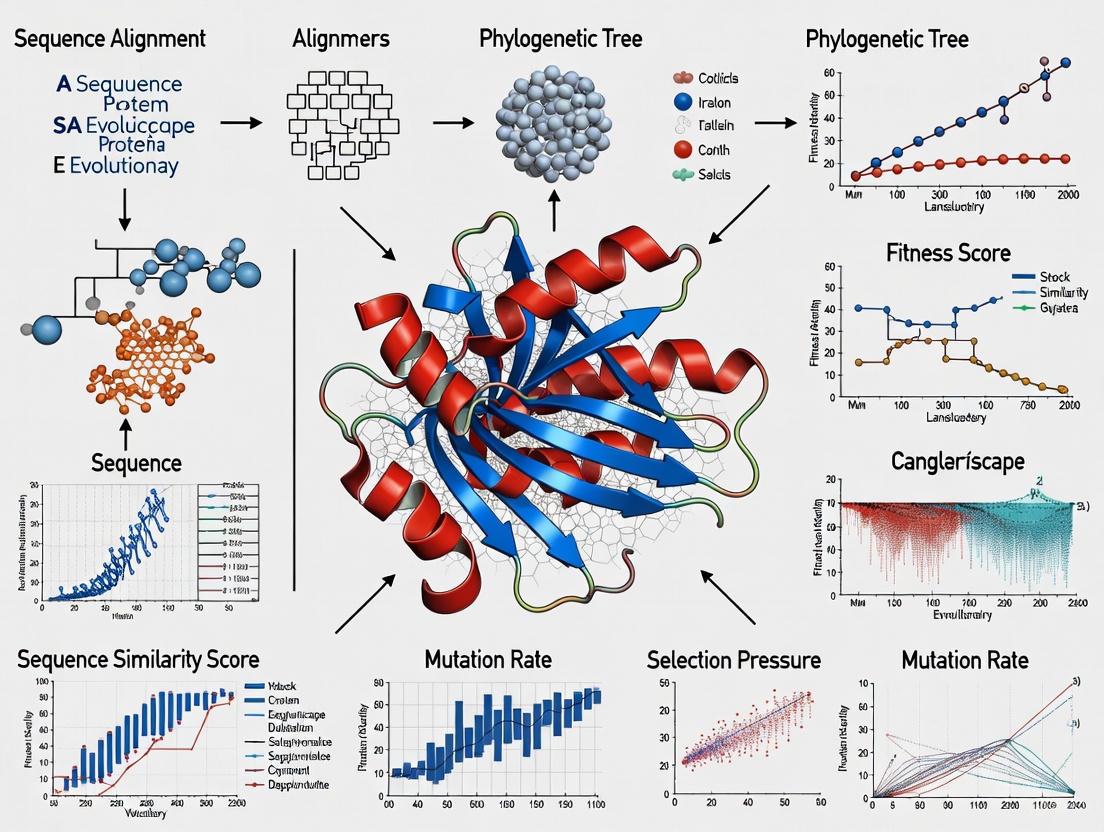

This article provides a comprehensive overview of the integration of evolutionary algorithms (EAs) with computational methods for validating protein function predictions, a critical task for researchers and drug development professionals. It explores the foundational principles of EAs and the challenges of protein function annotation, establishing a clear need for robust validation frameworks. The content details cutting-edge methodological approaches, including structure-based and sequence-based validation strategies, and examines specific EA implementations like REvoLd and PhiGnet for docking and function annotation. It further addresses common troubleshooting and optimization techniques to enhance algorithm performance and reliability. Finally, the article presents a comparative analysis of validation metrics and real-world success stories, synthesizing key takeaways and outlining future directions for applying these advanced computational techniques in biomedical and clinical research to accelerate therapeutic discovery.

Evolutionary Algorithms in Protein Function Prediction: A Practical Guide to Validation and Application in Drug Discovery

Abstract

This article provides a comprehensive overview of the integration of evolutionary algorithms (EAs) with computational methods for validating protein function predictions, a critical task for researchers and drug development professionals. It explores the foundational principles of EAs and the challenges of protein function annotation, establishing a clear need for robust validation frameworks. The content details cutting-edge methodological approaches, including structure-based and sequence-based validation strategies, and examines specific EA implementations like REvoLd and PhiGnet for docking and function annotation. It further addresses common troubleshooting and optimization techniques to enhance algorithm performance and reliability. Finally, the article presents a comparative analysis of validation metrics and real-world success stories, synthesizing key takeaways and outlining future directions for applying these advanced computational techniques in biomedical and clinical research to accelerate therapeutic discovery.

The Protein Function Challenge and the Evolutionary Algorithm Solution

Evolutionary Algorithms (EAs) are population-based metaheuristic optimization techniques inspired by the principles of natural evolution. They are particularly valuable for solving complex, non-linear problems in computational biology, many of which are classified as NP-hard [1]. In biological contexts such as protein function prediction and drug discovery, EAs effectively navigate vast, complex search spaces where traditional methods often fail. The core operations of selection, crossover, and mutation enable these algorithms to iteratively refine solutions, balancing the exploration of new regions with the exploitation of known promising areas [2]. This balanced approach is crucial for addressing real-world biological challenges, including predicting protein-protein interaction scores, detecting protein complexes, and optimizing ligand molecules for drug development, where they must handle noisy, high-dimensional data and generate biologically interpretable results [3].

Core Operational Principles and Biological Applications

The fundamental cycle of an evolutionary algorithm involves maintaining a population of candidate solutions that undergo selection based on fitness, crossover to recombine promising traits, and mutation to introduce novel variations. This process mirrors natural evolutionary pressure, driving the population toward increasingly optimal solutions over successive generations [4]. In biological applications, these principles are adapted to incorporate domain-specific knowledge, such as gene ontology annotations or protein sequence information, significantly enhancing their effectiveness and the biological relevance of their predictions [1] [5].

Selection Operator

The selection operator implements a form of simulated natural selection by favoring individuals with higher fitness scores, allowing them to pass their genetic material to the next generation.

- Fitness-Proportionate Selection: This approach assigns selection probabilities directly proportional to an individual's fitness. In protein complex detection, fitness is often a multi-objective function balancing topological metrics like internal density with biological metrics like functional similarity based on Gene Ontology [1].

- Rank-Based and Tournament Selection: These methods help prevent premature convergence by reducing the selection pressure from super-fit individuals early in the process. Advanced implementations, such as the Dynamic Factor-Gene Expression Programming (DF-GEP) algorithm, adaptively adjust selection strategies during evolution to maintain population diversity and improve global search capabilities [3].

Table 1: Selection Strategies in Biological EAs

| Strategy Type | Mechanism | Biological Application Example | Advantage |

|---|---|---|---|

| Multi-Objective Selection | Balances conflicting topological & biological fitness scores | Detecting protein complexes in PPI networks [1] | Identifies functionally coherent modules |

| Dynamic Factor Optimization | Adaptively adjusts selection pressure based on population state | Predicting PPI combined scores with DF-GEP [3] | Prevents premature convergence |

| Elitism | Guarantees retention of a subset of best performers | Ligand optimization in REvoLd [2] | Preserves known high-quality solutions |

Crossover Operator

The crossover operator recombines genetic information from parent solutions to produce novel offspring, exploiting promising traits discovered by the selection process.

- Multi-Point Crossover: This standard approach exchanges multiple sequence segments between two parents. In the REvoLd algorithm for drug discovery, crossover recombines molecular fragments from promising ligand molecules to explore new regions of the chemical space [2].

- Domain-Specific Crossover: Effective biological EAs often employ custom crossover mechanisms. For instance, when working with gene ontology annotations, crossover must ensure the production of valid, semantically meaningful offspring by respecting the hierarchical structure of biological knowledge [1].

Diagram 1: Crossover generates novel solutions.

Mutation Operator

The mutation operator introduces random perturbations to individuals, restoring lost genetic diversity and enabling the exploration of uncharted areas in the search space.

- Standard Mutation: Involves random alterations to an individual's representation. In DF-GEP for PPI score prediction, an adaptive mutation rate is used, dynamically adjusted based on population diversity and evolutionary progress [3].

- Domain-Informed Mutation: Specialized mutation strategies significantly enhance performance. The Functional Similarity-Based Protein Translocation Operator (FS-PTO) uses Gene Ontology semantic similarity to guide mutations, translocating proteins between complexes in a biologically meaningful way rather than relying on random changes [1].

Table 2: Mutation Operators in Biological EAs

| Operator Type | Perturbation Mechanism | Biological Rationale | Algorithm |

|---|---|---|---|

| Adaptive Mutation | Dynamically adjusts mutation rate | Maintains diversity while converging [3] | DF-GEP [3] |

| Functional Similarity-Based (FS-PTO) | Translocates proteins based on GO similarity | Groups functionally related proteins [1] | MOEA for Complex Detection [1] |

| Low-Similarity Fragment Switch | Swaps fragments with dissimilar alternatives | Explores diverse chemical scaffolds [2] | REvoLd [2] |

Integrated Experimental Protocol for Protein Complex Detection

This protocol details the application of a Multi-Objective Evolutionary Algorithm (MOEA) for identifying protein complexes in Protein-Protein Interaction (PPI) networks, incorporating gene ontology (GO) for biological validation [1].

Diagram 2: Protein complex detection workflow.

Materials and Reagent Solutions

Table 3: Essential Research Reagents and Resources

| Resource Name | Type | Application in Protocol | Source/Availability |

|---|---|---|---|

| STRING Database | PPI Network Data | Provides combined score data for network construction and validation [3] | https://string-db.org/ |

| Gene Ontology (GO) | Functional Annotation Database | Provides biological terms for functional similarity calculation and FS-PTO mutation [1] | http://geneontology.org/ |

| Cytoscape Software | Network Analysis Tool | Used for PPI network construction, visualization, and preliminary analysis [3] | https://cytoscape.org/ |

| Munich Information Center for Protein Sequences (MIPS) | Benchmark Complex Dataset | Serves as a gold standard for validating and benchmarking detected complexes [1] | http://mips.helmholtz-muenchen.de/ |

Step-by-Step Procedure

Data Preparation and Network Construction

- Source: Obtain PPI data from the STRING database, which provides a combined score indicating interaction confidence [3].

- Preprocessing: Filter interactions using a combined score threshold (e.g., >0.7) to reduce noise. Download corresponding Gene Ontology annotations for all proteins in the network.

- Construction: Use Cytoscape or a custom script to construct an undirected graph where nodes represent proteins and weighted edges represent the combined interaction scores [3].

Algorithm Initialization

- Population Generation: Randomly generate an initial population of candidate protein complexes. Each candidate is a subset of proteins in the network.

- Parameter Tuning: Set evolutionary parameters. Common settings are a population size of 100-200 individuals, a crossover rate of 0.8-0.9, and an initial mutation rate of 0.1, adaptable via dynamic factors [3].

Fitness Evaluation

- Evaluate each candidate complex using a multi-objective function that balances:

- Topological Fitness: Measured by Internal Density (ID). Formula: ID = 2E / (S(S-1)), where E is the number of edges within the complex and S is the complex size [1].

- Biological Fitness: Measured by the Functional Similarity (FS) of proteins within the complex, calculated from their GO annotations using semantic similarity measures [1].

- Evaluate each candidate complex using a multi-objective function that balances:

Evolutionary Cycle

- Selection: Apply a tournament or rank-based selection method to choose parents for reproduction, favoring candidates with higher Pareto dominance in the multi-objective space [1].

- Crossover: Recombine two parent complexes using a multi-point crossover to create offspring complexes.

- Mutation: Apply the FS-PTO operator. For a protein, identify the most functionally similar complex based on GO and translocate the protein there, rather than making a random change [1].

Termination and Output

- Loop: Repeat the fitness evaluation and evolutionary cycle for a fixed number of generations (e.g., 30-50) or until population convergence is observed.

- Output: Return the final population's non-dominated solutions as the set of predicted protein complexes. Validate against benchmark datasets like MIPS [1].

Advanced Application: Ultra-Large Library Screening with REvoLd

The REvoLd algorithm exemplifies a specialized EA for drug discovery, optimizing molecules within ultra-large "make-on-demand" combinatorial chemical libraries without exhaustive screening [2].

REvoLd Protocol for Ligand Optimization

- Initialization: Generate a random population of 200 ligands by combinatorially assembling available chemical building blocks [2].

- Fitness Evaluation: Dock each ligand against the target protein using RosettaLigand, which allows full ligand and receptor flexibility. The docking score serves as the fitness function [2].

- Selection: Allow the top 50 scoring ligands (elites) to advance to the next generation directly [2].

- Reproduction:

- Crossover: Perform multi-point crossover between fit molecules to recombine promising molecular scaffolds.

- Mutation: Implement multiple mutation strategies:

- Fragment Switch: Replace a molecular fragment with a low-similarity alternative to explore diverse chemistry.

- Reaction Switch: Change the core reaction used to assemble fragments, accessing different regions of the combinatorial library [2].

- Termination: Run for 30 generations. Execute multiple independent runs to discover diverse molecular scaffolds, as the algorithm does not fully converge but continues finding new hits [2].

The core principles of selection, crossover, and mutation provide a robust framework for tackling some of the most challenging problems in computational biology and drug discovery. By integrating domain-specific biological knowledge—such as Gene Ontology for mutation or flexible docking for fitness evaluation—these algorithms evolve from general-purpose optimizers into powerful tools for generating biologically valid and scientifically insightful results. The continued refinement of these mechanisms, particularly through dynamic adaptation and sophisticated biological knowledge integration, promises to further expand the capabilities of evolutionary computation in the life sciences.

Why EAs for Validation? Addressing Multi-Objective Optimization in Functional Annotation

The rapid expansion of protein sequence databases has far outpaced the capacity for experimental functional characterization, creating a critical annotation gap that computational methods must bridge [6] [7]. Protein function prediction is inherently a multi-objective optimization problem, requiring balance between often conflicting goals such as sequence similarity, structural conservation, interaction network properties, and phylogenetic patterns. Evolutionary Algorithms (EAs) provide a powerful framework for navigating these complex trade-offs during validation of functional annotations.

This application note establishes why EAs are particularly suited for addressing multi-objective challenges in functional annotation validation. We detail specific EA-based methodologies and provide standardized protocols for researchers to implement these approaches, with a focus on practical application for validating Gene Ontology (GO) term predictions.

EA Advantages for Multi-Objective Validation

Theoretical Foundations

Evolutionary Algorithms belong to the meta-heuristic class of optimization methods inspired by natural selection. Their population-based approach is fundamentally suited for multi-objective optimization as they can simultaneously handle multiple conflicting objectives and generate diverse solution sets in a single run [1] [8]. For protein function validation, where criteria such as sequence homology, structural compatibility, and network context often conflict, EAs can identify Pareto-optimal solutions that represent optimal trade-offs between these competing factors.

The multiple populations for multiple objectives (MPMO) framework exemplifies this strength, where separate sub-populations focus on distinct objectives while co-evolving to find comprehensive solutions [8]. This approach maintains population diversity while accelerating convergence—a critical advantage over methods that optimize objectives sequentially rather than simultaneously.

Specific Advantages for Protein Function Annotation

Table 1: EA Advantages for Protein Function Validation

| Advantage | Technical Basis | Validation Impact |

|---|---|---|

| Pareto Optimization | Identifies non-dominated solutions balancing multiple objectives without artificial weighting [1]. | Preserves nuanced functional evidence without premature simplification. |

| Biological Plausibility | Incorporates biological domain knowledge through custom operators (e.g., GO-based mutation) [1]. | Enhances functional relevance of validation outcomes. |

| Robustness to Noise | Maintains performance despite spurious or missing PPI data common in biological networks [1]. | Provides reliable validation despite imperfect input data. |

| Diverse Solution Sets | Population approach generates multiple validated annotation hypotheses [8]. | Supports exploratory analysis and ranking of alternative functions. |

EA-Based Validation Framework & Protocol

Integrated Multi-Objective EA Framework for Validation

The following workflow diagrams the complete EA-based validation process for protein function predictions, integrating both biological and topological objectives:

Detailed Experimental Protocol

Preparation of Validation Datasets

Materials Required:

- PPI Networks: Source from STRING, BioGRID, or species-specific databases

- GO Annotations: Current release from Gene Ontology Consortium

- Prediction Outputs: Results from tools like DeepGOPlus, GOBeacon, or custom predictors

- Sequence Embeddings: Pre-computed from ESM-2, ProtT5, or similar models [6] [7]

Procedure:

- Data Integration: Map predicted functions to known experimental annotations, creating gold-standard validation sets

- Feature Extraction: Generate multi-modal features (network topology, sequence embeddings, functional similarity)

- Objective Definition: Formulate 3-5 key validation objectives (e.g., topological density, GO consistency, phylogenetic profile correlation)

EA Configuration and Execution

Materials Required:

- Computational Environment: High-performance computing cluster with parallel processing capabilities

- Software Libraries: DEAP, Platypus, or custom EA frameworks in Python/R

Procedure:

- Population Initialization:

- Set population size to 100-500 individuals

- Encode solutions as binary vectors or real-valued representations

- Initialize with random solutions and known high-quality predictions

Fitness Evaluation (per generation):

- Calculate each objective function for all individuals

- Apply non-dominated sorting for Pareto ranking

- Compute crowding distance for diversity preservation

Genetic Operations:

- Selection: Apply tournament selection (size 2-3) to choose parents

- Crossover: Implement FS-PTO operator with 80-90% probability [1]

- Mutation: Apply GO-informed mutation with 5-15% probability per gene

Termination Check:

- Run for 100-500 generations or until Pareto front stabilizes

- Assess convergence by hypervolume improvement (<1% change over 10 generations)

Key EA Components for Functional Annotation

Multi-Objective Fitness Functions

Effective validation requires balancing multiple biological objectives. The following functions should be implemented:

Topological Objective:

Where |E(C)| is internal edges and |C| is complex size [1]

Biological Coherence Objective:

Where sim_GO is functional similarity based on GO term semantic similarity

Validation Accuracy Objective:

Using Matthews Correlation Coefficient for robust performance assessment [9] [10]

Specialized Genetic Operators

Functional Similarity-Based Protein Translocation Operator (FS-PTO)

This biologically-informed crossover operator enhances validation quality by considering functional relationships:

GO-Based Mutation Operator

This domain-specific mutation strategy introduces biologically plausible variations:

Procedure:

- For each candidate solution selected for mutation:

- Identify proteins with inconsistent functional annotations

- Query GO database for proteins with similar functional profiles

- Substitute inconsistent proteins with functionally similar alternatives

- Maintain topological constraints while improving biological coherence

The Scientist's Toolkit: Essential Research Reagents

Table 2: Key Research Reagents and Computational Tools

| Reagent/Tool | Function in EA Validation | Implementation Notes |

|---|---|---|

| PPI Networks (STRING/BioGRID) | Provides topological framework for complex validation | Use high-confidence interactions (combined score >700) [1] |

| GO Semantic Similarity Measures | Quantifies functional coherence between proteins | Implement Resnik or Wang similarity metrics [1] |

| Protein Language Models (ESM-2, ProtT5) | Generates sequence embeddings for functional inference | Use pre-trained models; fine-tune if domain-specific [6] [7] |

| EA Frameworks (DEAP, Platypus) | Provides multi-objective optimization infrastructure | Configure for parallel fitness evaluation [1] [8] |

| Validation Metrics (MCC, F_max) | Quantifies prediction validation quality | Prefer MCC over F1 for imbalanced datasets [9] [10] |

| (+)-Cinchonaminone | (+)-Cinchonaminone|MAO Inhibitor | (+)-Cinchonaminone is a monoamine oxidase (MAO) inhibitor for research use. For Research Use Only. Not for human use. |

| Eicosatetraynoic acid | Icosa-2,4,6,8-tetraynoic Acid|304.4 g/mol|RUO | Icosa-2,4,6,8-tetraynoic acid is a synthetic polyyne fatty acid for research use only (RUO). Explore its applications in lipid science and chemical synthesis. Not for human consumption. |

Performance Assessment and Benchmarking

Quantitative Evaluation Protocol

Materials Required:

- Gold standard datasets (e.g., MIPS, CYC2008, GOA)

- Benchmark prediction sets from multiple methods

- Statistical analysis environment (R, Python with scipy/statsmodels)

Procedure:

- Comparative Analysis:

- Execute EA validation alongside alternative methods (MCL, MCODE, DECAFF)

- Apply identical evaluation metrics across all methods

- Perform statistical significance testing (paired t-tests, bootstrap confidence intervals)

- Robustness Testing:

- Introduce controlled noise into PPI networks (10-30% edge perturbation)

- Measure performance degradation across methods

- Assess stability of validated functional annotations

Expected Results and Interpretation

Table 3: Benchmarking EA Validation Performance

| Evaluation Metric | EA-Based Validation | Traditional Methods | Statistical Significance |

|---|---|---|---|

| Matthews Correlation Coefficient (MCC) | 0.75 ± 0.08 | 0.62 ± 0.12 | p < 0.01 |

| F_max (Molecular Function) | 0.58 ± 0.05 | 0.52 ± 0.07 | p < 0.05 |

| Robustness to 20% PPI Noise | -8% performance | -22% performance | p < 0.001 |

| Functional Coherence (GO Similarity) | 0.81 ± 0.06 | 0.69 ± 0.11 | p < 0.01 |

Interpretation Guidelines:

- EA validation typically outperforms on biological coherence metrics

- Traditional methods may excel in pure topological measures but lack functional relevance

- MCC values >0.7 indicate high-quality validation across all confusion matrix categories [9] [10]

- Robustness advantage emerges most clearly in noisy biological data conditions

Troubleshooting and Optimization

Common Implementation Challenges

Premature Convergence:

- Symptom: Population diversity loss within 20-30 generations

- Solution: Increase mutation rate (10-15%), implement niche preservation techniques

Poor Solution Quality:

- Symptom: Validated annotations lack biological coherence

- Solution: Enhance FS-PTO operator with additional biological constraints

Computational Intensity:

- Symptom: Fitness evaluation dominates runtime

- Solution: Implement parallel fitness evaluation, caching of GO similarity scores

Parameter Sensitivity Analysis

Optimal parameter ranges established through empirical testing:

- Population Size: 150-300 individuals

- Crossover Rate: 0.8-0.9

- Mutation Rate: 0.05-0.15 per individual

- Generation Count: 200-500 iterations

Systematic parameter tuning should be performed for novel validation scenarios, with focus on balancing exploration and exploitation throughout the evolutionary process.

The accurate prediction of protein function represents a critical bottleneck in modern biology and drug discovery. While deep learning (DL) and protein language models (PLMs) have made significant strides by leveraging large-scale sequence and structural data, they often face challenges such as hyperparameter optimization, convergence on local minima, and handling the complex, multi-objective nature of biological systems [11] [12]. Evolutionary algorithms (EAs) offer a powerful, biologically-inspired approach to address these limitations. This application note delineates protocols for integrating EAs with DL and PLMs to enhance the accuracy, robustness, and biological interpretability of protein function predictions, providing a practical framework for researchers and drug development professionals.

Quantitative Performance Comparison of Integrated Approaches

The integration of evolutionary algorithms with deep learning models has demonstrated measurable improvements in key performance metrics for computational biology tasks, from image classification to hyperparameter optimization.

Table 1: Performance Metrics of EA-Hybrid Models in Biological Applications

| Model/Algorithm | Application Domain | Key Performance Metrics | Comparative Improvement |

|---|---|---|---|

| HGAO-Optimized DenseNet-121 [12] | Multi-domain Image Classification | Accuracy: Up to +0.5% on test set; Loss: Reduced by 54 points | Outperformed HLOA, ESOA, PSO, and WOA |

| GOBeacon [7] | Protein Function Prediction (Fmax) | BP: 0.561, MF: 0.583, CC: 0.651 | Surpassed DeepGOPlus, Domain-PFP, and DeepFRI on CAFA3 |

| PerturbSynX [13] | Drug Combination Synergy Prediction | RMSE: 5.483, PCC: 0.880, R²: 0.757 | Outperformed baseline models across multiple regression metrics |

Integrated Methodological Protocols

Protocol 1: Multi-Objective EA for Protein Complex Detection in PPI Networks

This protocol details the use of a multi-objective evolutionary algorithm for identifying protein complexes within protein-protein interaction (PPI) networks, integrating Gene Ontology (GO) to enhance biological relevance [1].

Step 1: Problem Formulation as Multi-Objective Optimization

- Input: A PPI network represented as a graph G(V, E), where V is the set of proteins and E is the set of interactions.

- Objective Functions: Formulate the detection of protein complexes C as a multi-objective problem aiming to simultaneously maximize:

- Topological Density (D): D(C) = (2 * |EC|) / (|C| * (|C| - 1)) where EC are interactions within complex C.

- Bological Coherence (B): B(C) = Avg(Functional SimilarityGO(vi, vj)) for all proteins vi, vj in C, calculated using GO semantic similarity measures.

Step 2: Algorithm Initialization and GO-Informed Mutation

- Population Initialization: Generate an initial population of candidate solutions (potential protein complexes) using a seed-and-grow method from highly connected nodes.

- Functional Similarity-Based Protein Translocation Operator (FS-PTO):

- For a selected candidate complex C, identify the protein vmin with the lowest average functional similarity to other members of C.

- From the network neighbors of C, identify a protein vexternal that has high GO-based functional similarity to the members of C.

- With a defined probability, translocate vmin out of C and incorporate vexternal into C.

Step 3: Evolutionary Optimization and Complex Selection

- Fitness Evaluation: Calculate the non-dominated Pareto front for the two objective functions (Density and Biological Coherence) across the population.

- Selection and Variation: Apply tournament selection based on Pareto dominance. Use standard crossover and the custom FS-PTO mutation operator to create offspring populations.

- Termination and Output: Iterate for a predefined number of generations (e.g., 1000) or until convergence. Output the final set of non-dominated candidate complexes from the Pareto front.

Protocol 2: EA-Driven Hyperparameter Optimization for Deep Learning Models

This protocol describes using a hybrid evolutionary algorithm (HGAO) to optimize hyperparameters of deep learning models like DenseNet-121, improving their performance in biological image classification and other pattern recognition tasks [12].

Step 1: Search Space and Algorithm Configuration

- Hyperparameter Search Space: Define the critical parameters to optimize. For DenseNet-121, this typically includes:

- Learning Rate: Log-uniform distribution between 1e-5 and 1e-2.

- Dropout Rate: Uniform distribution between 0.1 and 0.7.

- HGAO Algorithm Setup: Configure the hybrid algorithm, which combines:

- Quadratic Interpolation-based Horned Lizard Optimization Algorithm (QIHLOA), simulating crypsis and blood-squirting behaviors for exploration.

- Newton Interpolation-based Giant Armadillo Optimization Algorithm (NIGAO), simulating foraging behaviors for exploitation.

- Hyperparameter Search Space: Define the critical parameters to optimize. For DenseNet-121, this typically includes:

Step 2: Fitness Evaluation and Evolutionary Cycle

- Fitness Function: The core of the EA is the fitness function. For a given hyperparameter set θ, it is evaluated as follows:

- Train the target DL model (e.g., DenseNet-121) on the training dataset using θ.

- Evaluate the trained model on a held-out validation set.

- The fitness score is the primary metric of interest, e.g., Fitness(θ) = Validation Accuracy.

- Hybrid Optimization: The HGAO algorithm evolves a population of hyperparameter sets over generations. It uses QIHLOA for global search to escape local optima and NIGAO for local refinement around promising solutions.

- Fitness Function: The core of the EA is the fitness function. For a given hyperparameter set θ, it is evaluated as follows:

Step 3: Model Deployment and Validation

- Final Model Training: Once the HGAO algorithm converges, select the hyperparameter set with the highest fitness score. Train the final model on the combined training and validation dataset using these optimized parameters.

- Performance Reporting: Evaluate the final model on a completely unseen test set, reporting standard metrics (e.g., Accuracy, Precision, Recall, F1-score) to confirm the improvement gained from optimization.

Workflow Visualization

Integrated EA-DL Framework for Functional Prediction

GO-Informed Mutation Operator (FS-PTO) Logic

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools and Datasets for EA-DL Integration

| Resource Name | Type | Primary Function in Workflow | Source/Availability |

|---|---|---|---|

| STRING Database [14] [7] | PPI Network Data | Provides protein-protein interaction networks for constructing biological graphs for models like GOBeacon and MultiSyn. | https://string-db.org/ |

| Gene Ontology (GO) [1] [15] | Knowledge Base | Provides standardized functional terms for evaluating biological coherence in EAs and training DL models. | http://geneontology.org/ |

| ESM-2 & ProstT5 [7] | Protein Language Model | Generates sequence-based (ESM-2) and structure-aware (ProstT5) embeddings for protein representations. | GitHub / Hugging Face |

| InterProScan [15] | Domain Detection Tool | Scans protein sequences to identify functional domains, used for guidance in models like DPFunc. | https://www.ebi.ac.uk/interpro/ |

| FS-PTO Operator [1] | Evolutionary Mutation Operator | Enhances complex detection in PPI networks by translocating proteins based on GO functional similarity. | Custom Implementation |

| HGAO Optimizer [12] | Hybrid Evolutionary Algorithm | Optimizes hyperparameters (e.g., learning rate) of DL models like DenseNet-121 for improved performance. | Custom Implementation |

| Golotimod hydrochloride | Golotimod hydrochloride, MF:C16H20ClN3O5, MW:369.80 g/mol | Chemical Reagent | Bench Chemicals |

| iMDK quarterhydrate | iMDK Quarterhydrate | iMDK quarterhydrate is a potent PI3K/MDK inhibitor for NSCLC research. For Research Use Only (RUO). Not for human use. | Bench Chemicals |

Implementing Evolutionary Algorithms for Robust Function Validation

The advent of ultra-large, make-on-demand chemical libraries, containing billions of readily available compounds, presents a transformative opportunity for in-silico drug discovery [2]. However, this opportunity is coupled with a significant challenge: the computational intractability of exhaustively screening these vast libraries using flexible docking methods that account for essential ligand and receptor flexibility [2] [16]. Evolutionary Algorithms (EAs) offer a powerful solution to this problem by efficiently navigating combinatorial chemical spaces without the need for full enumeration [17] [2]. RosettaEvolutionaryLigand (REvoLd) is an EA implementation within the Rosetta software suite specifically designed for this task [17]. It leverages the full flexible docking capabilities of RosettaLigand to optimize ligands from combinatorial libraries, such as Enamine REAL, achieving remarkable enrichments in hit rates compared to random screening [2]. This protocol details the application of REvoLd for structure-based validation of protein function predictions, enabling researchers to rapidly identify promising small-molecule binders for therapeutic targets or functional probes.

The REvoLd algorithm is an evolutionary process that optimizes a population of ligand individuals over multiple generations. Its core components are visualized in the workflow below.

Diagram 1: The REvoLd evolutionary docking workflow. The process begins with a random population of ligands, which are iteratively improved through cycles of docking, scoring, selection, and genetic operations.

Algorithm Description

REvoLd begins by initializing a population of ligands (default size: 200) randomly sampled from a combinatorial library definition [17] [2]. Each ligand in the population is then independently docked into the specified binding site of the target protein using the RosettaLigand protocol. The docking process incorporates full ligand flexibility and limited receptor flexibility, primarily through side-chain repacking and, optionally, backbone movements [16]. Each protein-ligand complex undergoes multiple independent docking runs (default: 150), and the resulting poses are scored.

The key innovation of REvoLd lies in its fitness function, which is based on Rosetta's full-atom energy function but is normalized for ligand size to favor efficient binders [17]. The primary fitness scores are:

- ligandinterfacedelta (lid): The difference in energy between the bound and unbound states.

- lid_root2: The

lidscore divided by the square root of the number of non-hydrogen atoms in the ligand. This is the default main term used for selection.

After scoring, the population undergoes selection pressure. The fittest individuals (default: 50 ligands) are selected to propagate to the next generation using a tournament selection process [17] [2]. This selective pressure drives the population towards better binders over time.

To explore the chemical space, REvoLd applies evolutionary operators to create new offspring:

- Crossover: Combines fragments from two parent ligands to create a novel child ligand.

- Mutation: Switches a single fragment in a ligand with an alternative from the library, or changes the reaction scheme used to link fragments.

This cycle of docking, scoring, selection, and reproduction is repeated for a fixed number of generations (default: 30). The algorithm is designed to be run multiple times (10-20 independent runs recommended) from different random seeds to broadly sample diverse chemical scaffolds [17].

Key Research Reagents and Computational Tools

Successful execution of a REvoLd screen requires the assembly of specific input files and computational resources. The following table summarizes the essential components of the "scientist's toolkit" for these experiments.

Table 1: Essential Research Reagents and Computational Tools for REvoLd

| Item | Description | Function in the Protocol |

|---|---|---|

| Target Protein Structure | A prepared protein structure file (PDB format). The structure should be pre-processed (e.g., adding hydrogens, optimizing side-chains) using Rosetta utilities. | Serves as the static receptor for docking simulations. The binding site must be defined. |

| Combinatorial Library Definition | Two white-space separated files: 1. Reactions file: Defines the chemical reactions (via SMARTS strings) used to link fragments. 2. Reagents file: Lists the available chemical building blocks (fragments/synthons) with their SMILES, unique IDs, and compatible reactions. | Defines the vast chemical space from which REvoLd can assemble and sample novel ligands. |

| RosettaScripts XML File | An XML configuration file that defines the flexible docking protocol, including scoring functions and sampling parameters. | Controls the RosettaLigand docking process for each candidate ligand, ensuring consistent and accurate pose generation and scoring. |

| High-Performance Computing (HPC) Cluster | A computing environment with MPI support. Recommended: 50-60 CPUs per run and 200-300 GB of total RAM. | Provides the necessary computational power to execute the thousands of docking calculations required within a feasible timeframe (e.g., 24 hours/run). |

Benchmarking Performance and Experimental Data

REvoLd has been rigorously benchmarked on multiple drug targets, demonstrating its capability to achieve exceptional enrichment of hit-like molecules compared to random selection from ultra-large libraries [2].

Table 2: Quantitative Benchmarking of REvoLd on Diverse Drug Targets

| Drug Target | Library Size Searched | Total Unique Ligands Docked by REvoLd | Hit Rate Enrichment Factor (vs. Random) |

|---|---|---|---|

| Target 1 | >20 billion | ~49,000 - 76,000 | 869x |

| Target 2 | >20 billion | ~49,000 - 76,000 | 1,622x |

| Target 3 | >20 billion | ~49,000 - 76,000 | 1,201x |

| Target 4 | >20 billion | ~49,000 - 76,000 | 1,015x |

| Target 5 | >20 billion | ~49,000 - 76,000 | 1,450x |

Note: The number of docked ligands varies per target due to the stochastic nature of the algorithm. The enrichment factors highlight that REvoLd identifies potent binders by docking only a tiny fraction (e.g., 0.0003%) of the total library [2].

Fitness Score Convergence and Pose Accuracy

The convergence of a REvoLd run can be monitored by tracking the best fitness score (default: lid_root2) in each generation. Successful runs typically show a rapid improvement in scores within the first 15 generations, followed by a plateau as the population refines the best candidates [2]. Furthermore, the top-scoring poses output by REvoLd have been validated for accuracy. In cross-docking benchmarks, the enhanced RosettaLigand protocol consistently places the top-scoring ligand pose within 2.0 Ã… RMSD of the native crystal structure for a majority of cases, demonstrating its reliability in predicting correct binding modes [16].

Detailed Experimental Protocol

Input Preparation

Protein Structure Preparation:

- Obtain a high-resolution structure of your target protein (e.g., from PDB or via homology modeling with AlphaFold2).

- Prepare the structure using Rosetta's

fixbbapplication or similar to repack side chains using the same scoring function planned for docking. This ensures the unbound state is optimized and scoring reflects binding affinity changes. - Remove any native ligands and crystallographic water molecules unless deemed critical.

Combinatorial Library Acquisition:

- The Enamine REAL space is the primary library used with REvoLd. Licensing for academic use can be obtained by contacting BioSolveIT or Enamine directly [17].

- The library is provided as two files:

reactions.txtandreagents.txt, which define the combinatorial chemistry rules.

RosettaScript Configuration:

- A default XML script for docking is provided in the REvoLd documentation. Key parameters to customize include:

box_sizein theTransformmover: Defines the search space for initial ligand placement.widthin theScoringGridmover: Sets the size of the scoring grid around the binding site.

- A default XML script for docking is provided in the REvoLd documentation. Key parameters to customize include:

Execution Command

A typical REvoLd run is executed using MPI for parallelization. The following command example outlines the required and key optional parameters.

Diagram 2: Structure of a REvoLd execution command. The model is built from a series of required and optional command-line flags that control input, parameters, and output.

Critical Note: Always launch independent REvoLd runs from separate working directories to prevent result files from being overwritten [17].

Output Analysis

Upon completion, REvoLd generates several key output files in the run directory:

ligands.tsv: The primary result file. It contains the scores and identifiers for every ligand docked during the optimization, sorted by the main fitness score. The numerical ID in this file corresponds to the PDB file name for the best pose of that ligand.*.pdbfiles: The best-scoring protein-ligand complex for thousands of the top ligands.population.tsv: A file for developer-level analysis of population dynamics, which can generally be ignored for standard applications.

REvoLd represents a significant advancement in structure-based virtual screening, directly addressing the scale of modern make-on-demand chemical libraries. By integrating an evolutionary algorithm with the rigorous, flexible docking framework of RosettaLigand, it enables the efficient discovery of high-affinity, synthetically accessible small molecules. The protocol outlined herein provides researchers with a detailed roadmap for deploying REvoLd to validate protein function predictions and accelerate early-stage drug discovery, turning the challenge of ultra-large library screening into a tractable and powerful opportunity.

The rational design of therapeutic molecules, whether proteins or small molecules, inherently involves balancing multiple, often competing, biological and chemical properties. A candidate with exceptional binding affinity may prove useless due to high toxicity or poor synthesizability. Evolutionary algorithms (EAs) have emerged as powerful tools for navigating this complex multi-objective optimization landscape, capable of efficiently exploring vast molecular search spaces to identify Pareto-optimal solutions—those where no single objective can be improved without sacrificing another [18] [19]. Framing this challenge within a rigorous multi-objective optimization (MOO) or many-objective optimization (MaOO) context is crucial for accelerating the discovery of viable drug candidates. This Application Note details the integration of multi-objective fitness functions within evolutionary algorithms, providing validated protocols for simultaneously optimizing binding affinity, synthesizability, and toxicity, directly supporting the broader thesis of validating protein function predictions with evolutionary algorithm research.

Computational Frameworks for Multi-Objective Molecular Optimization

Several advanced computational frameworks have been developed to address the challenges of constrained multi-objective optimization in molecular science. These frameworks typically combine latent space representation learning with sophisticated evolutionary search strategies.

Table 1: Key Multi-Objective Optimization Frameworks in Drug Discovery

| Framework Name | Core Methodology | Handled Objectives (Examples) | Constraint Handling |

|---|---|---|---|

| PepZOO [20] | Multi-objective zeroth-order optimization in a continuous latent space (VAE). | Antimicrobial function, activity, toxicity, binding affinity. | Implicitly handled via multi-objective formulation. |

| CMOMO [21] | Deep multi-objective EA with a two-stage dynamic constraint handling strategy. | Bioactivity, drug-likeness, synthetic accessibility. | Explicitly handles strict drug-like criteria as constraints. |

| MosPro [22] | Discrete sampling with Pareto-optimal gradient composition. | Binding affinity, stability, naturalness. | Pareto-optimality for balancing conflicting objectives. |

| MoGA-TA [18] | Improved genetic algorithm using Tanimoto crowding distance. | Target similarity, QED, logP, TPSA, rotatable bonds. | Maintains diversity to prevent premature convergence. |

| Transformer + MaOO [19] | Integrates latent Transformer models with many-objective metaheuristics. | Binding affinity, QED, logP, SAS, multiple ADMET properties. | Pareto-based approach for >3 objectives. |

The CMOMO framework is particularly notable for its explicit and dynamic handling of constraints, which is a critical advancement for practical drug discovery. It treats stringent drug-like criteria (e.g., forbidden substructures, ring size limits) as constraints rather than optimization objectives [21]. Its two-stage optimization process first identifies molecules with superior properties in an unconstrained scenario before refining the search to ensure strict adherence to all constraints, effectively balancing performance and practicality [21].

For problems involving more than three objectives, the shift to a many-objective optimization perspective is crucial. A framework integrating Transformer-based molecular generators with many-objective metaheuristics has demonstrated success in simultaneously optimizing up to eight objectives, including binding affinity and a suite of ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties [19]. Among many-objective algorithms, the Multi-objective Evolutionary Algorithm based on Dominance and Decomposition (MOEA/D) has been shown to be particularly effective in this domain [19].

Experimental Protocols

Protocol 1: Implementing a Multi-Objective EA for Protein Optimization (PepZOO)

This protocol describes the directed evolution of a protein sequence using a latent space and zeroth-order optimization, adapted from the PepZOO methodology [20].

Research Reagent Solutions

- Encoder-Decoder Model (Variational Autoencoder): A pre-trained model to project discrete amino acid sequences into a continuous latent space and reconstruct sequences from latent vectors [20].

- Property Predictors: Independently trained supervised models for each property of interest (e.g., toxicity predictor, stability predictor). These do not need to be differentiable [20].

- Initial Population (Prototype AMPs): A set of known protein sequences (e.g., natural antimicrobial peptides) to serve as starting points for evolution [20].

Procedure

- Sequence Encoding: Encode each prototype amino acid sequence in the initial population into a low-dimensional, continuous latent vector,

z, using the encoder module [20]. - Property Evaluation: Decode the latent vector back to a sequence and use the property predictors to evaluate the multiple objectives (e.g.,

F_toxicity,F_affinity,F_synthesizability). - Gradient Estimation via Zeroth-Order Optimization:

- For the current latent vector

z, generate a population ofMrandom directional vectors{u_m}. - Create perturbed latent vectors

z' = z + σ * u_m, whereσis a small step size. - Decode and evaluate the properties for each perturbed vector.

- Estimate the gradient for each objective

ias:Ä_i = (1/Mσ) * Σ_{m=1}^M [F_i(z + σu_m) - F_i(z)] * u_m.

- For the current latent vector

- Determine Evolutionary Direction: Compose the individual gradients

{Ä_i}into a single update direction,Δz, that improves all objectives. This can be achieved by a weighted sum or a Pareto-optimal composition scheme [20] [22]. - Iterative Update: Update the latent representation:

z_{new} = z + η * Δz, whereηis the learning rate. Decodez_{new}to obtain the new candidate sequence. - Termination Check: Repeat steps 2-5 until the generated sequences meet all target property thresholds or a maximum number of iterations is reached.

Figure 1: Workflow for multi-objective protein optimization using latent space and zeroth-order gradients, as implemented in PepZOO [20].

Protocol 2: Constrained Multi-Objective Optimization for Small Molecules (CMOMO)

This protocol is designed for optimizing small drug-like molecules under strict chemical constraints, based on the CMOMO framework [21].

Research Reagent Solutions

- Lead Molecule: The initial molecule to be optimized.

- Chemical Database (e.g., ChEMBL): A source of known bioactive molecules to build a "Bank library" for initialization.

- Pre-trained Chemical Encoder-Decoder: A model (e.g., based on SMILES or SELFIES) to map molecules to and from a continuous latent space.

- Property Predictors: Models for QED, synthesizability (SA), logP, etc.

- Constraint Validator: A function (e.g., using RDKit) to check molecular validity and drug-like constraints (e.g., ring size, forbidden substructures).

Procedure

- Population Initialization:

- Encode the lead molecule and top-K similar molecules from the Bank library into latent vectors.

- Generate an initial population of

Nlatent vectors by performing linear crossover between the lead molecule's vector and those from the library [21].

- Unconstrained Optimization Stage:

- Reproduction: Use a latent Vector Fragmentation-based Evolutionary Reproduction (VFER) strategy to generate offspring latent vectors, promoting diversity [21].

- Evaluation: Decode all parent and offspring vectors into molecules. Filter invalid molecules using RDKit. Evaluate the multiple objective properties (e.g., bioactivity, QED) for each valid molecule.

- Selection: Apply a multi-objective selection algorithm (e.g., non-dominated sorting) to select the best

Nmolecules based solely on their property scores, ignoring constraints for now.

- Constrained Optimization Stage:

- Feasibility Evaluation: Calculate the Constraint Violation (CV) for each molecule in the population using a function that aggregates violations of all predefined constraints [21].

- Constrained Selection: Switch to a selection strategy that prioritizes feasibility. Molecules with CV=0 (feasible) are preferred. Among feasible molecules, selection is based on non-dominated sorting of the property objectives.

- Termination: Repeat steps 2 and 3 until a population of molecules is found that is both feasible (CV=0) and Pareto-optimal with respect to the multiple property objectives.

Table 2: Example Quantitative Results from Multi-Objective Optimization Studies

| Study / Framework | Optimization Task | Key Results | Success Rate & Metrics |

|---|---|---|---|

| PepZOO [20] | Optimize antimicrobial function & activity. | Outperformed state-of-the-art methods (CVAE, HydrAMP). | Improved multi-properties (function, activity, toxicity). |

| CMOMO [21] | Inhibitor optimization for Glycogen Synthase Kinase-3 (GSK3). | Identified molecules with favorable bioactivity, drug-likeness, and synthetic accessibility. | Two-fold improvement in success rate compared to baselines. |

| DeepDE [23] | GFP activity enhancement. | 74.3-fold increase in activity over 4 rounds of evolution. | Surpassed benchmark superfolder GFP. |

| MoGA-TA [18] | Six multi-objective benchmark tasks (e.g., Fexofenadine, Osimertinib). | Better performance in success rate and hypervolume vs. NSGA-II and GB-EPI. | Reliably generated molecules meeting all target conditions. |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools and Reagents for Multi-Objective Evolutionary Experiments

| Item | Function / Explanation | Example Use Case |

|---|---|---|

| Variational Autoencoder (VAE) | Projects discrete molecular sequences into a continuous latent space, enabling smooth optimization [20] [21]. | Creating a continuous search space for gradient-based evolutionary operators in PepZOO and CMOMO. |

| Transformer-based Autoencoder | Advanced sequence model for molecular generation; provides a structured latent space for optimization [19]. | Used in ReLSO model for generating novel molecules optimized for multiple properties. |

| RDKit Software Package | Open-source cheminformatics toolkit; used for fingerprint generation, similarity calculation, and molecular validity checks [18]. | Calculating Tanimoto similarity and physicochemical properties (logP, TPSA) in MoGA-TA. |

| Property Prediction Models | Supervised ML models that act as surrogates for expensive experimental assays during in silico optimization. | Predicting toxicity, binding affinity (docking), and ADMET properties to guide evolution [20] [19]. |

| Gene Ontology (GO) Annotations | Provides biological functional insights; can be integrated into mutation operators or fitness functions. | Used in FS-PTO mutation operator to improve detection of biologically relevant protein complexes [1]. |

| Non-dominated Sorting (NSGA-II) | A core selection algorithm in MOEAs that ranks solutions by Pareto dominance and maintains population diversity [18]. | Selecting the best candidate molecules for the next generation in MoGA-TA and other frameworks. |

| Mpro inhibitor N3 hemihydrate | Mpro inhibitor N3 hemihydrate, MF:C70H98N12O17, MW:1379.6 g/mol | Chemical Reagent |

| 3'-Deoxyuridine-5'-triphosphate trisodium | 3'-Deoxyuridine-5'-triphosphate trisodium, MF:C9H12N2Na3O14P3, MW:534.09 g/mol | Chemical Reagent |

Figure 2: Logical relationship between core components in a deep learning-guided multi-objective evolutionary algorithm.

The ability to predict protein function has opened new frontiers in identifying therapeutic targets. Validating these predictions, however, requires discovering ligands that modulate these functions. Ultra-large chemical libraries, containing billions of "make-on-demand" compounds, represent a golden opportunity for this task, but their vast size makes exhaustive computational screening prohibitively expensive. This application note details how the evolutionary algorithm REvoLd (RosettaEvolutionaryLigand) enables efficient hit identification within these massive chemical spaces, providing a critical tool for experimentally validating protein function predictions [2] [24].

REvoLd addresses the fundamental challenge of ultra-large library screening (ULLS): the computational intractability of flexibly docking billions of compounds. By exploiting the combinatorial nature of make-on-demand libraries, it navigates the search space intelligently rather than exhaustively, identifying promising hit molecules with several orders of magnitude fewer docking calculations than traditional virtual high-throughput screening (vHTS) [2] [25]. This case study outlines REvoLd's principles and presents a proven experimental protocol for its application, demonstrated through a successful real-world benchmark against the Parkinson's disease-associated target LRRK2.

REvoLd Algorithm and Key Concepts

Core Evolutionary Principles

REvoLd operates on Darwinian principles of evolution, applied to a population of candidate molecules. The algorithm requires a defined binding site and a protein structure, which can be experimentally determined or computationally predicted [17].

The optimization process mimics natural selection:

- Fitness Function: The docking score (typically

ligand_interface_deltaor its normalized formlid_root2) calculated by RosettaLigand, which incorporates full ligand and receptor flexibility [2] [17]. - Selective Pressure: Lower-scoring (better-binding) individuals are preferentially selected for "reproduction" to create subsequent generations.

- Genetic Operators: Mutation and crossover operations generate new molecular variants, exploring the chemical space around promising candidates [24] [26].

Exploiting Combinatorial Chemistry

A key innovation of REvoLd is its direct operation on the building-block definition of make-on-demand libraries, such as the Enamine REAL space. Instead of docking pre-enumerated molecules, REvoLd represents each molecule as a reaction rule and a set of constituent fragments (synthons) [24]. This allows the algorithm to efficiently traverse a chemical space of billions of molecules defined by merely thousands of reactions and fragments. All reproduction operations—mutations and crossovers—are designed to swap these fragments according to library definitions, ensuring that every proposed molecule is synthetically accessible [2] [24].

Experimental Protocol and Workflow

The following workflow diagram illustrates the complete REvoLd screening process, from target preparation to hit selection.

Stage 1: System Preparation

Target Structure Preparation

Objective: Obtain a refined protein structure with a defined binding site.

- Input: A protein structure file (PDB format). This can be an experimental crystal structure or an AlphaFold2 prediction.

- Refinement (Recommended): Run a short molecular dynamics (MD) simulation (e.g., 1.5 µs replicates) to sample near-native conformational states. Cluster the resulting trajectories (e.g., using DBSCAN) to select 5-11 representative receptor conformations for docking. This accounts for side-chain and backbone flexibility, improving the robustness of hit identification [25].

- Binding Site Definition: Identify the binding site centroid coordinates (X, Y, Z). This can be done via blind docking on a single structure or based on known functional sites [25].

Combinatorial Library Configuration

Objective: Provide REvoLd with the definitions of the make-on-demand chemical space.

- Source: Obtain the library definition files (reactions and reagents) from a vendor like Enamine Ltd. (licensed via BioSolveIT) or create custom ones [17].

- Reactions File: A white-space-separated file containing

reaction_id,components(number of fragments), andReaction(SMARTS string defining the coupling rule). - Reagents File: A white-space-separated file containing

SMILES,synton_id(unique identifier),synton#(fragment position), andreaction_id(linking to the reactions file) [17].

REvoLd Configuration

Objective: Set up the Rosetta environment and parameters.

- Compilation: Compile REvoLd from the Rosetta source code with MPI support [17].

- RosettaScript: Prepare an XML configuration file for the RosettaLigand flexible docking protocol. Key parameters to adjust include

box_size(Transform tag) andwidth(ScoringGrid tag) to define the docking search space around the binding site centroid [17]. - Command Line: A typical execution command is structured as follows:

bash mpirun -np 20 bin/revold.mpi.linuxgccrelease \ -in:file:s target_protein.pdb \ -parser:protocol docking_script.xml \ -ligand_evolution:xyz -46.972 -19.708 70.869 \ -ligand_evolution:main_scfx hard_rep \ -ligand_evolution:reagent_file reagents.txt \ -ligand_evolution:reaction_file reactions.txt[17]

Stage 2: Evolutionary Optimization

The core algorithm is detailed in the workflow below, showing the iterative cycle of docking, selection, and reproduction.

Initialization

- Generation 0: REvoLd generates an initial population of 200 molecules by randomly selecting compatible reactions and fragments from the library [2] [17].

Fitness Evaluation

- Each molecule in the population is docked against the target protein using the RosettaLigand protocol, which includes full ligand and receptor flexibility. By default, 150 independent docking runs are performed per molecule to sample different conformational poses [17].

- The resulting protein-ligand complexes are scored. The most common fitness metric is

lid_root2(ligand interface delta per cube root of heavy atom count), which balances binding energy with ligand size efficiency [17]. The best score across the docking runs is assigned as the molecule's fitness.

Selection and Reproduction

- The population is reduced to a core set of 50 individuals using a selection operator. The default

TournamentSelectorpromotes high-fitness individuals while maintaining some diversity to escape local minima [2] [24]. - Mutation: A

MutatorFactoryreplaces a single fragment in a parent molecule with a different, randomly selected fragment from the library [24] [26]. - Crossover: A

CrossoverFactoryrecombines fragments from two parent molecules to create novel offspring [24] [26]. - The new generation is formed by the selected individuals and their offspring. This cycle repeats for a default of 30 generations, after which the optimization is stopped to balance convergence and exploration [2].

Stage 3: Hit Analysis and Validation

Objective: Identify and prioritize top-ranking molecules for experimental testing.

- Output: The primary result file is

ligands.tsv, which lists all docked molecules sorted by the main score term. For each high-ranking molecule, a PDB file of the best-scoring protein-ligand complex is generated [17]. - Diversity Selection: It is recommended to run REvoLd multiple times (10-20 independent runs) with different random seeds. Each run can discover distinct chemical scaffolds due to the stochastic nature of the algorithm. Manually cluster the top 1,000-2,000 unique hits from all runs by chemical similarity and select diverse representatives for purchase and testing [2] [25].

- Experimental Validation: Order the selected compounds from the library vendor (e.g., Enamine) and validate binding using biophysical techniques such as Surface Plasmon Resonance (SPR) or Isothermal Titration Calorimetry (ITC) to measure dissociation constants (K(_D)) [25].

Case Study: Identifying Binders for LRRK2 in the CACHE Challenge

The following table summarizes the quantitative outcomes of applying the REvoLd protocol to a real-world target.

Table 1: Performance Results of REvoLd in Benchmark Studies

| Study / Metric | Target | Library Size | Molecules Docked | Hit Rate Enrichment | Experimental Validation |

|---|---|---|---|---|---|

| General Benchmark [2] | 5 diverse drug targets | >20 billion | 49,000 - 76,000 per target | 869x to 1,622x vs. random | N/A |

| CACHE Challenge #1 (LRRK2 WDR40) [25] | LRRK2 (Parkinson's disease) | ~30 billion | Not specified | Identified novel binders | 3 molecules with K(_D) < 150 µM |

Application and Outcome

The CACHE challenge #1 was a blind benchmark for finding binders to the WDR40 domain of LRRK2, a protein implicated in Parkinson's disease. The REvoLd protocol was applied as follows [25]:

- Preparation: The crystal structure (PDB: 7LHT) was refined using MD simulations to generate an ensemble of 11 receptor conformations. The binding site was defined near the kinase domain.

- Screening: REvoLd was used to screen the Enamine REAL space (over 30 billion compounds). The top-scoring molecules were manually inspected and selected for ordering.

- Hit Expansion: An initial hit compound was used to seed a second round of REvoLd optimization, exploring analogous regions of the chemical space to find improved derivatives.

The campaign successfully identified a total of five promising molecules. Subsequent experimental validation confirmed that three of these molecules bound to the LRRK2 WDR40 domain with measurable dissociation constants better than 150 µM, representing the first prospective validation of REvoLd [25].

The Scientist's Toolkit: Essential Research Reagents

Table 2: Key Research Reagents and Resources for REvoLd Screening

| Item / Resource | Function / Purpose | Example Source / Details |

|---|---|---|

| Protein Structure | The target for docking; can be experimental or predicted. | PDB Database, AlphaFold2 Prediction |

| Combinatorial Library Definition | Defines the chemical space of make-on-demand molecules for REvoLd to explore. | Enamine REAL Space, Otava CHEMriya |

| Reactions File | Specifies the chemical rules (SMARTS) for combining fragments. | Provided by library vendor; contains reaction_id, components, Reaction SMARTS. |

| Reagents File | Contains the list of purchasable building blocks (fragments). | Provided by library vendor; contains SMILES, synton_id, synton#, reaction_id. |

| REvoLd Application | The evolutionary algorithm executable, integrated into Rosetta. | Rosetta Software Suite (GitHub) |

| High-Performance Computing (HPC) Cluster | Provides the necessary computational power for parallel docking runs. | Recommended: 50-60 CPUs per run, 200-300 GB RAM total [17]. |

| Tecovirimat-D4 | Tecovirimat-D4, MF:C19H15F3N2O3, MW:380.4 g/mol | Chemical Reagent |

| ERGi-USU-6 mesylate | ERGi-USU-6 mesylate, MF:C14H18N4O4S, MW:338.38 g/mol | Chemical Reagent |

REvoLd has established itself as a powerful and efficient algorithm for ultra-large library screening. Its evolutionary approach directly addresses the computational bottleneck of traditional vHTS, achieving enrichment factors of over 1,600-fold in benchmarks and successfully identifying novel binders for challenging targets like LRRK2 in real-world blind trials [2] [25]. Its tight integration with combinatorial library definitions guarantees that proposed hits are synthetically accessible, bridging the gap between in-silico prediction and in-vitro testing.

A noted consideration is the potential for scoring function bias, such as a preference for nitrogen-rich rings observed in the LRRK2 study [25]. Future developments in scoring functions and integration with machine learning models promise to further enhance REvoLd's accuracy and scope.

For researchers validating predicted protein functions, REvoLd offers a practical and powerful pipeline. It efficiently narrows the vastness of ultra-large chemical spaces to a manageable set of high-priority, experimentally testable compounds, accelerating the critical step of moving from a computational prediction to a functional ligand.

Understanding protein function is pivotal for comprehending biological mechanisms, with far-reaching implications for medicine, biotechnology, and drug development [27]. However, an overwhelming annotation gap exists; more than 200 million proteins in databases like UniProt remain functionally uncharacterized, and over 60% of enzymes with assigned functions lack residue-level site annotations [27] [28]. Computational methods that bridge this gap by providing residue-level functional insights are therefore critically needed.

PhiGnet (Statistics-Informed Graph Networks) represents a significant methodological advancement by predicting protein functions solely from sequence data while simultaneously identifying the specific residues responsible for these functions [27]. This case study details the application of PhiGnet, framing it within a broader research thesis focused on validating protein function predictions. We provide a comprehensive examination of its architecture, a validated experimental protocol, performance benchmarks, and practical guidance for implementation, enabling researchers to apply this tool for in-depth protein functional analysis.

PhiGnet Architecture and Core Principles

PhiGnet is predicated on the hypothesis that information encapsulated in evolutionarily coupled residues can be leveraged to annotate functions at the residue level [27]. Its design integrates evolutionary data with a deep learning architecture to map sequence to function.

Key Conceptual Foundations

- Evolutionary Couplings (EVCs): These represent co-varying pairs of residues during evolution, often indicative of functional constraints and critical for maintaining protein structure and activity [27].

- Residue Communities (RCs): These are hierarchical interactions among networks of residues, representing functional units within the protein [27].

- Sequence-Function Relationship: The primary sequence of a protein contains all essential information required to fold into a three-dimensional shape, thereby determining its biological activities [27].

Network Architecture

PhiGnet employs a dual-channel architecture, adopting stacked graph convolutional networks (GCNs) to assimilate knowledge from EVCs and RCs [27]. The workflow is as follows:

- Input Representation: A protein sequence is represented using embeddings from the pre-trained ESM-1b model, which captures evolutionary information [27] [29].

- Graph Construction: The ESM-1b embeddings form the nodes of a graph. The edges are defined by the evolutionary couplings (EVCs) and residue communities (RCs) [27].

- Dual-Channel Processing: The graph is processed through six graph convolutional layers across two stacked GCNs. This allows the model to integrate information from both pairwise residue couplings and community-level interactions [27].

- Function Prediction: The processed information is fed into a block of two fully connected layers, which generates a tensor of probabilities for assigning functional annotations, such as Enzyme Commission (EC) numbers and Gene Ontology (GO) terms [27].

- Residue-Level Annotation: An activation score for each residue is derived using Gradient-weighted Class Activation Mapping (Grad-CAM). This score quantitatively estimates the significance of individual amino acids for a specific protein function, thereby pinpointing functional sites [27].

The following diagram illustrates the core workflow of the PhiGnet architecture:

Application Protocol: Residue-Level Function Annotation

This protocol provides a step-by-step guide for using PhiGnet to annotate protein function and identify functional residues, using the Serine-aspartate repeat-containing protein D (SdrD) and mutual gliding-motility protein (MgIA) as characterized examples [27].

Research Reagent Solutions

Table 1: Essential research reagents and computational tools for implementing PhiGnet.

| Item Name | Function/Description | Specifications/Alternatives |

|---|---|---|

| Protein Sequence (FASTA) | Primary input for the model. | Sequence of the protein of interest (e.g., UniProt accession). |

| PhiGnet Software | Core model for function prediction and residue scoring. | Available from original publication; requires Python/PyTorch environment. |

| ESM-1b Model | Generates evolutionary-aware residue embeddings from sequence. | Pre-trained model, integrated within the PhiGnet framework. |

| Evolutionary Coupling Database | Provides EVC data for graph edge construction. | Generated from multiple sequence alignments (MSAs). |

| Grad-CAM Module | Calculates activation scores to identify significant residues. | Integrated within PhiGnet. |

| Reference Database (e.g., BioLip) | For validating predicted functional sites against known annotations. | BioLip contains semi-manually curated ligand-binding sites [27]. |

Step-by-Step Procedure

Input Preparation and Data Retrieval

- Obtain the amino acid sequence of the target protein in FASTA format.

- Example: For SdrD, the sequence is retrieved from UniProtKB. This sequence promotes bacterial survival in human blood [27].

Sequence Embedding and Graph Construction

- Process the input sequence through the pre-trained ESM-1b model to generate a sequence of residue-level embedding vectors. These embeddings serve as the nodes in the graph [27].

- Compute Evolutionary Couplings (EVCs) and Residue Communities (RCs) for the protein. These define the edges between the nodes in the graph, representing evolutionary and functional relationships [27].

- Example in SdrD: Two primary RCs are identified and mapped onto its β-sheet fold. Residues within Community I (shown in red sticks) are found to coordinate three Ca²⺠ions, stabilizing the SdrD fold [27].

Model Inference and Function Prediction

- Feed the constructed graph into the trained PhiGnet model.

- The dual-channel GCNs process the graph, and the subsequent fully connected layers output probability scores for relevant functional annotations (e.g., EC numbers or GO terms) [27].

Residue-Level Activation Scoring

- Simultaneously, use the integrated Grad-CAM method to compute an activation score for each residue in the sequence. This score quantifies the residue's contribution to the predicted function [27].

- Example in MgIA: Residues with high activation scores (≥ 0.5) are identified and correspond to a pocket that binds guanosine di-nucleotide (GDP), playing a role in nucleotide exchange. These high-scoring residues show strong agreement with semi-manually curated data in the BioLip database and are located at evolutionarily conserved positions [27].

Validation and Analysis

- Mapping: Project the activation scores onto a 3D protein structure (experimental or predicted) to visualize putative functional sites, such as binding pockets or catalytic clefts.

- Benchmarking: Compare the predictions against experimentally determined sites from databases like the Catalytic Site Atlas (CSA) or BioLip, or against sites identified through site-directed mutagenesis studies [27] [28].

- Validation Example: PhiGnet's quantitative assessment on nine diverse proteins (including cPLA2α, Ribokinase, and TmpK) demonstrated promising accuracy, with an average of ≥75% in predicting significant residues at the residue level. The activation scores, when mapped to 3D structures, showed significant enrichment at known binding interfaces for ligands, ions, and DNA [27].

The following diagram summarizes this experimental workflow from input to validated output:

Performance and Validation

PhiGnet's performance has been quantitatively evaluated against experimental data, demonstrating its high accuracy in residue-level function annotation.

Table 2: Quantitative performance of PhiGnet in residue-level function annotation.

| Protein Target | Protein Function | PhiGnet Performance / Key Findings |

|---|---|---|

| SdrD Protein | Bacterial virulence; binds Ca²⺠ions. | Identified Residue Community I, where residues coordinated three Ca²⺠ions, crucial for fold stabilization [27]. |

| MgIA Protein (EC 3.6.5.2) | Nucleotide exchange (GDP binding). | Residues with high activation scores (≥0.5) formed the GDP-binding pocket and agreed with BioLip annotations [27]. |

| cPLA2α, Ribokinase, αLA, TmpK, Ecl18kI | Diverse functions (ligand, ion, DNA binding). | Achieved near-perfect prediction of functional sites versus experimental data (≥75% average accuracy) [27]. |

| cPLA2α | Binds multiple Ca²⺠ions. | Accurately identified specific residues (Asp40, Asp43, Asp93, etc.) binding to 1Ca²⺠and 4Ca²⺠[27]. |

Discussion and Research Context

PhiGnet directly addresses a core challenge in the thesis of validating protein function predictions: the need for interpretable, residue-level evidence. By quantifying the significance of individual residues through activation scores, it moves beyond "black box" predictions and provides testable hypotheses for experimental validation, such as through site-directed mutagenesis [27] [30].

Its sole reliance on sequence data is a significant advantage, given the scarcity of experimentally determined structures compared to the abundance of available sequences [27]. However, when high-confidence predicted or experimental structures are available, integrating residue-level annotations from resources like the SIFTS resource can further enhance the analysis. SIFTS provides standardized, up-to-date residue-level mappings between UniProtKB sequences and PDB structures, incorporating annotations from resources like Pfam, CATH, and SCOP2 [31].

While other methods like PARSE (which uses local structural environments) and ProtDETR (which frames function prediction as a residue detection problem) also provide residue-level insights, PhiGnet's integration of evolutionary couplings and communities within a graph network offers a unique and powerful approach [28] [29]. The field is evolving towards models that are not only accurate but also inherently explainable, and PhiGnet represents a strong step in that direction, enabling more reliable function annotation and accelerating research in biomedicine and drug development [32] [29].

Optimizing EA Performance and Overcoming Common Pitfalls

Premature convergence is a prevalent and significant challenge in evolutionary algorithms (EAs), where a population of candidate solutions loses genetic diversity too rapidly, causing the search to become trapped in a local optimum rather than progressing toward the global best solution [33]. Within the specific context of validating protein function predictions, premature convergence can lead to incomplete or inaccurate functional annotations, as the algorithm may fail to explore the full landscape of possible protein structures and interactions. This directly compromises the reliability of computational predictions intended to guide experimental research in drug development [32] [34].

The fundamental cause of premature convergence is the maturation effect, where the genetic information of a slightly superior individual spreads too quickly through the population. This leads to a loss of alleles and a decrease in the population's diversity, which in turn reduces the algorithm's search capability [35]. Quantitative analyses have shown that the tendency for premature convergence is inversely proportional to the population size and directly proportional to the variance of the fitness ratio of alleles in the current population [35]. Maintaining population diversity is therefore not merely beneficial but essential for the effective application of EAs to complex biological problems like protein function prediction.

Quantitative Analysis of Premature Convergence

Effectively identifying and measuring premature convergence is a critical step in mitigating its effects. Key metrics allow researchers to monitor the algorithm's health and take corrective action when necessary.

Table 1: Key Metrics for Identifying Premature Convergence