Metagenome Assembly Mastery: A Comparative Guide to SPAdes, metaSPAdes, and MEGAHIT Workflows

This comprehensive guide provides researchers, scientists, and drug development professionals with an in-depth analysis of leading metagenomic assembly workflows.

Metagenome Assembly Mastery: A Comparative Guide to SPAdes, metaSPAdes, and MEGAHIT Workflows

Abstract

This comprehensive guide provides researchers, scientists, and drug development professionals with an in-depth analysis of leading metagenomic assembly workflows. It explores the foundational principles of SPAdes, its specialized variant metaSPAdes, and the resource-efficient MEGAHIT. The article details methodological pipelines, offers troubleshooting and optimization strategies, and presents a comparative framework for validation and tool selection. By synthesizing current best practices, this resource aims to empower professionals to generate high-quality metagenome-assembled genomes (MAGs) for applications in biomarker discovery, pathogen detection, and therapeutic development.

Deconstructing Metagenomic Assemblers: Core Algorithms of SPAdes, metaSPAdes, and MEGAHIT

Metagenomic assembly is a critical computational process that reconstructs longer contiguous sequences (contigs) from short, overlapping sequencing reads derived directly from environmental samples. The challenge lies in the microbial complexity, uneven abundances, and presence of strain variants. Within the thesis context of comparing SPAdes/metaSPAdes and MEGAHIT workflows, key considerations emerge. metaSPAdes excels in complex, high-diversity environments due to its multi-sized de Bruijn graph approach and careful handling of uneven coverage, making it suitable for high-quality metagenome-assembled genomes (MAGs). MEGAHIT prioritizes computational efficiency and memory usage, often enabling assembly of larger datasets on limited hardware, which is valuable for large-scale biodiversity surveys. The subsequent binning process groups contigs into putative genomes (bins) based on sequence composition and coverage across samples, facilitated by tools like MetaBAT2, MaxBin2, and CONCOCT.

Table 1: Comparative Overview of metaSPAdes and MEGAHIT

| Feature | metaSPAdes | MEGAHIT |

|---|---|---|

| Core Algorithm | Multi-sized de Bruijn graph | Succinct de Bruijn graph |

| Primary Strength | Accuracy, handling strain diversity | Speed & memory efficiency |

| Optimal Use Case | High-quality MAG recovery, complex communities | Large-scale datasets, limited compute resources |

| Typical Memory Usage | Higher (e.g., ~500 GB for 1 Tb reads) | Lower (e.g., ~200 GB for 1 Tb reads) |

| Typical Runtime | Slower | Faster |

| Key Reference | Nurk et al., Genome Res, 2017 | Li et al., Bioinformatics, 2015 |

Detailed Experimental Protocols

Protocol 2.1: Combined Assembly Workflow Using metaSPAdes and MEGAHIT

This protocol describes a hybrid strategy for leveraging both assemblers to maximize contig recovery.

Quality Control & Read Preparation:

- Input: Paired-end FASTQ files from Illumina sequencing.

- Use FastQC (v0.12.1) for initial quality assessment.

- Trim adapters and low-quality bases using Trimmomatic (v0.39) or fastp (v0.23.4).

- Parameters for Trimmomatic:

ILLUMINACLIP:TruSeq3-PE.fa:2:30:10 LEADING:3 TRAILING:3 SLIDINGWINDOW:4:20 MINLEN:50

Co-assembly with MEGAHIT (Broad Recovery):

- Run MEGAHIT on all quality-filtered reads to generate a primary, computationally efficient assembly.

- Command:

megahit -1 sample1_R1.fq.gz,sample2_R1.fq.gz -2 sample1_R2.fq.gz,sample2_R2.fq.gz -o megahit_assembly --min-contig-len 1000

Targeted Assembly with metaSPAdes (Deep Dive):

- Map reads from selected samples of interest back to the MEGAHIT contigs using Bowtie2 (v2.5.1).

- Extract unmapped or poorly mapped read pairs. These represent sequences potentially missed by MEGAHIT.

- Assemble this subset of reads using metaSPAdes.

- Command:

metaspades.py -1 unmapped_R1.fq -2 unmapped_R2.fq -o metaspades_assembly --only-assembler

Assembly Merging and Dereplication:

- Concatenate contigs from both assemblies.

- Use dRep (v3.4.2) or CD-HIT (v4.8.1) to cluster and dereplicate highly similar contigs (e.g., at 95% identity, 90% coverage).

Protocol 2.2: Binning and MAG Refinement

Coverage Profile Generation:

- Map all reads from each sample to the final contig set using Bowtie2 and calculate coverage with coverM (v0.6.1).

- Command (per sample):

coverm genome --coupled sample1_R1.fq.gz sample1_R2.fq.gz --reference contigs.fasta -o coverm_results -t 20 --min-read-percent-identity 95

Compositional Binning:

- Run multiple binning tools on the contigs (using coverage profiles and tetranucleotide frequency).

- MetaBAT2:

runMetaBat.sh -m 1500 contigs.fasta *.coverage.txt - MaxBin2:

run_MaxBin.pl -contig contigs.fasta -abund *.coverage.txt -out maxbin2_out - CONCOCT: Requires prior cutting of long contigs. Follow the tool's specific workflow.

Consensus Binning with DAS Tool:

- Use DAS Tool (v1.1.6) to integrate bins from multiple tools and produce a refined, non-redundant set of bins.

- Command:

DAS_Tool -i metabat2_bins.txt,maxbin2_bins.txt -l MetaBAT,MaxBin -c contigs.fasta -o das_tool_results --score_threshold 0.5

MAG Quality Assessment:

- Assess completion and contamination of bins using CheckM2 (v1.0.1) or CheckM lineage workflow.

- Command (CheckM2):

checkm2 predict --threads 20 --input das_tool_results_DASTool_bins/ --output-directory checkm2_results

Visualization of Workflows

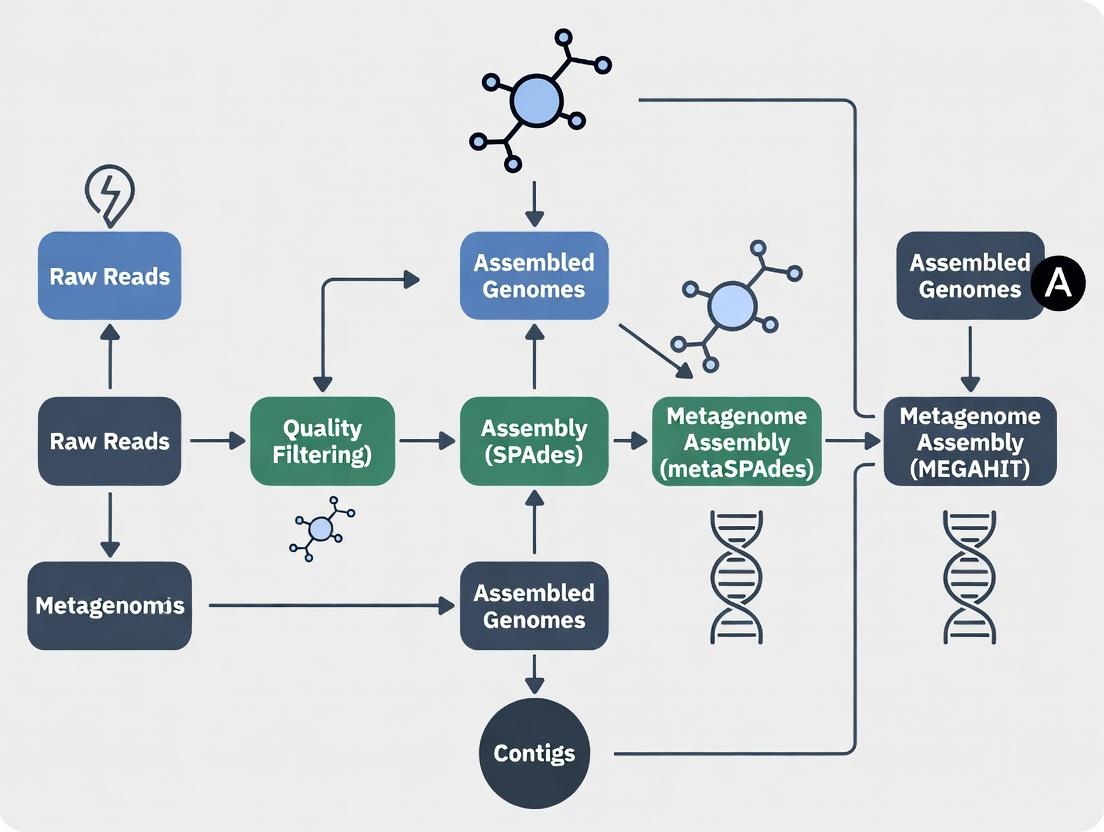

Title: MetaSPAdes and MEGAHIT Hybrid Assembly & Binning Workflow

Title: Core Steps in de Bruijn Graph Assembly

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools & Resources for Metagenomic Assembly

| Item/Category | Example(s) | Primary Function |

|---|---|---|

| Quality Control | fastp, Trimmomatic, FastQC | Remove adapter sequences, trim low-quality bases, and generate quality reports. |

| Read Mapper | Bowtie2, BWA, minimap2 | Align sequencing reads to a reference (e.g., contigs) for coverage analysis. |

| Assembly Engine | metaSPAdes, MEGAHIT, IDBA-UD | Core algorithm to build graphs and output contigs from reads. |

| Binning Tool | MetaBAT2, MaxBin2, CONCOCT | Cluster contigs into bins/MAGs using coverage and composition. |

| Binning Refiner | DAS Tool, Binning_refiner | Integrate results from multiple binners to produce a superior consensus set. |

| Quality Assessor | CheckM/CheckM2, BUSCO, QUAST | Evaluate completeness, contamination, and strain heterogeneity of MAGs. |

| Taxonomic Classifier | GTDB-Tk, CAT/BAT, Kaiju | Assign taxonomic labels to contigs or MAGs. |

| Functional Annotator | PROKKA, eggNOG-mapper, DRAM | Predict genes and annotate functional potential (e.g., KEGG, COG, Pfam). |

| Essential Databases | GTDB, NCBI RefSeq, KEGG, Pfam, eggNOG | Reference data for taxonomy, genome comparison, and functional annotation. |

| Workflow Management | Snakemake, Nextflow | Automate and reproducibly execute multi-step pipelines. |

| Compute Environment | High-memory servers (≥256 GB RAM), HPC clusters, Cloud (AWS/GCP) | Provides the necessary computational power for large metagenome assemblies. |

Within the thesis framework "Development of a SPAdes-metaSPAdes-MEGAHIT Assembly Workflow for Metagenomics Research," understanding the foundational SPAdes assembler is critical. While metaSPAdes and MEGAHIT are optimized for complex metagenomic data, the original SPAdes algorithm was designed for isolate genomes, particularly from single-cell and standard multicell sequencing. Its core innovations—the multi-sized de Bruijn graph and careful error correction—remain pivotal for generating high-quality isolate scaffolds, which serve as essential benchmarks in metagenomic analysis. This note details the principles and protocols for applying SPAdes to isolate genomes.

Core Algorithm: Multi-sized de Bruijn Graph

SPAdes constructs a multi-sized de Bruijn graph (dBG) rather than a single k-mer graph. This approach iterates over a range of k-mer lengths (e.g., 21, 33, 55, 77 for Illumina data), building separate graphs. A k-mer is a substring of length k from a read. A de Bruijn graph represents k-mers as nodes, with edges connecting overlapping k-mers (overlap of length k-1). Short k-mers help resolve low-coverage regions, while long k-mers span repeats and reduce graph complexity. SPAdes merges these graphs into a single assembly graph, effectively using the strengths of each k-mer size.

Quantitative Data on k-mer Selection: Table 1: Standard *k-mer Values and Their Impact in SPAdes (Illumina Data)*

| k-mer Size | Primary Function | Typical Use Case | Trade-off |

|---|---|---|---|

| 21, 33 | Error correction, resolve low-coverage regions | Initial graph construction, sensitive to errors | Higher graph complexity, more branches |

| 55, 77 | Simplify graph, span short repeats | Main assembly phase, produce longer contigs | May break low-coverage regions |

| 99, 127 | Resolve complex repeats | Used with long-read or high-coverage data | Requires higher coverage |

Experimental Protocol: Genome Assembly of a Bacterial Isolate Using SPAdes

Objective: Assemble a high-quality draft genome from Illumina paired-end reads of a bacterial isolate.

Materials & Computational Requirements:

- Input Data: Illumina paired-end FASTQ files (R1 and R2).

- Computer: Minimum 16 GB RAM for bacterial genomes; 32+ GB recommended.

- Software: SPAdes v3.15.5 or later installed.

Procedure:

- Quality Control:

- Use FastQC v0.11.9 to assess read quality.

- Trim adapters and low-quality bases using Trimmomatic v0.39:

java -jar trimmomatic-0.39.jar PE -phred33 input_R1.fq input_R2.fq output_R1_paired.fq output_R1_unpaired.fq output_R2_paired.fq output_R2_unpaired.fq ILLUMINACLIP:TruSeq3-PE.fa:2:30:10 LEADING:3 TRAILING:3 SLIDINGWINDOW:4:15 MINLEN:36

SPAdes Assembly:

- Run SPAdes with the isolated genome mode and careful error correction:

spades.py -1 output_R1_paired.fq -2 output_R2_paired.fq -o spades_output --isolate -k 21,33,55,77 --careful - The

--isolateflag optimizes for single-genome data. - The

--carefulflag employs MismatchCorrector to reduce mismatches and indels.

- Run SPAdes with the isolated genome mode and careful error correction:

Output Analysis:

- Primary assembly outputs are in

spades_output/contigs.fastaandspades_output/scaffolds.fasta. - Assess assembly quality using QUAST v5.0.2:

quast.py spades_output/contigs.fasta -o quast_report

- Primary assembly outputs are in

Visualization: SPAdes Workflow for Isolates

SPAdes Multi-k de Bruijn Graph Assembly Flow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials and Tools for SPAdes Isolate Assembly

| Item | Function/Description |

|---|---|

| Illumina DNA Prep Kit | Library preparation for Illumina sequencing. |

| Qubit dsDNA HS Assay Kit | Accurate quantification of genomic DNA pre-library prep. |

| SPAdes Software (v3.15.5+) | Core assembly algorithm with isolate mode. |

| Trimmomatic | Removes adapters and low-quality sequences; critical for input cleanliness. |

| QUAST | Evaluates assembly quality (N50, contig count, misassemblies). |

| CheckM | Assesses genome completeness and contamination for isolates. |

| Bandage | Visualizes assembly graphs for manual inspection. |

Integration into the Metagenomics Thesis Workflow

In the broader thesis, the SPAdes isolate protocol establishes a baseline. The high-quality isolate assemblies generated here can be used as reference genomes for evaluating metaSPAdes (designed for metagenomes) and MEGAHIT (for large-scale metagenomic datasets) performance on known constituents within a synthetic or controlled community. Comparing contiguity, completeness, and error rates across these tools on isolate data informs selection criteria for the final hybrid metagenomic workflow.

This document details the application and protocols for metaSPAdes, a core component within a comprehensive metagenomic assembly workflow. The broader thesis framework posits that a strategic, multi-assembler approach—specifically leveraging SPAdes, metaSPAdes, and MEGAHIT—optimizes the recovery of high-quality microbial genomes from complex environmental and clinical samples. metaSPAdes is engineered as an extension of the SPAdes genome assembler, introducing key algorithmic adaptations to address the challenges intrinsic to metagenomic data: uneven sequencing depth, high strain diversity, and the presence of multiple, unknown genomes.

Algorithmic Adaptations of metaSPAdes

The core adaptations of metaSPAdes address limitations of single-genome assemblers in complex communities.

- Multi-Coverage Assembly Graphs: Unlike SPAdes, which assumes uniform coverage, metaSPAdes constructs and analyzes de Bruijn graphs that account for varying coverage depths across different genomes and genomic regions. This prevents the erroneous merging of sequences from abundant and rare organisms.

- Strain-Aware Graph Simplification: Specialized algorithms differentiate between sequencing errors, true genomic variation, and polymorphisms from co-existing strains of the same species. This preserves strain diversity within the assembly graph instead of collapsing it.

- Iterative Mismatch Corrector: An iterative error correction procedure is applied specifically tuned for the variable k-mer coverage profiles found in metagenomes, enhancing accuracy prior to graph construction.

Table 1: Key Algorithmic Adaptations in metaSPAdes vs. SPAdes

| Feature | SPAdes (Single Genome) | metaSPAdes (Metagenome) | Purpose in Metagenomics |

|---|---|---|---|

| Coverage Assumption | Uniform | Multi-component, varying | Prevents chimeras between organisms of different abundance |

| Graph Construction | Single genome-focused | Multi-genome, strain-aware | Manages high diversity and strain heterogeneity |

| Error Correction | Standard iterative | Metagenome-optimized iterative | Handles variable k-mer coverage across community |

| Read Support | Standard | Enhanced for low-coverage genomes | Improves assembly of rare community members |

Detailed Experimental Protocol: metaSPAdes Assembly

This protocol assumes quality-controlled (trimmed, adapter-removed) paired-end Illumina reads.

Materials & Input Data

- Compute Resources: High-memory server (Recommended: 250+ GB RAM for complex communities).

- Software: metaSPAdes v3.15.5 (or latest).

- Input Files:

sample_R1.fastq.gz,sample_R2.fastq.gz(may include additional mate-pair libraries).

Step-by-Step Procedure

Basic Assembly Command:

Advanced Run with Multiple Libraries and MetaGeneMark:

-1, -2: Standard paired-end libraries.--mp1-1, --mp1-2: Mate-pair library inputs (improves scaffolding).-t: Number of computational threads (e.g., 32).-m: Memory limit in GB (e.g., 250).--meta: Flag to use Metagenomic Mode (employs MetaGeneMark for gene prediction during post-processing).

Output Interpretation:

contigs.fasta: Final contigs file for downstream analysis (binning, annotation).scaffolds.fasta: Scaffolded sequences (if mate-pair libraries used).assembly_graph.fastg: Final assembly graph file (visualizable with Bandage).spades.log: Detailed log of the assembly process.

Workflow Diagrams

Title: SPAdes-metaSPAdes-MEGAHIT Metagenomics Assembly Workflow

Title: metaSPAdes Internal Algorithmic Process

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials & Tools for metaSPAdes Metagenomic Workflow

| Item | Function & Relevance | Example/Note |

|---|---|---|

| High-Quality DNA Extraction Kit | Inhibitor-free DNA extraction from complex matrices (soil, stool). Critical for representative library prep. | DNeasy PowerSoil Pro Kit (QIAGEN) |

| Illumina-Compatible Library Prep Kit | Prepares metagenomic DNA for sequencing with unique dual indices to pool samples. | Nextera DNA Flex Library Kit |

| metaSPAdes Software | Core metagenome assembler with algorithmic adaptations for complex communities. | v3.15.5+; run via Conda (conda install -c bioconda spades) |

| Computational Server | High RAM (≥250GB) and multi-core CPUs required for assembling complex communities. | Cloud (AWS, GCP) or local cluster |

| Quality Control Tools | Pre-assembly read trimming and adapter removal. | fastp, Trimmomatic |

| Assembly Graph Viewer | Visual inspection of the assembly_graph.fastg to assess complexity and potential issues. |

Bandage |

| Contig Evaluation Tool | Assess assembly quality (N50, length stats) post-assembly. | QUAST (MetaQUAST module) |

| Metagenomic Binning Software | Groups assembled contigs into putative genome bins after metaSPAdes assembly. | MetaBAT2, MaxBin2 |

| CheckM / BUSCO | Assess completeness and contamination of genome bins produced from metaSPAdes contigs. | Critical for downstream analysis validity |

Application Notes

MEGAHIT is a specialized, memory-efficient NGS assembler for large and complex metagenomics datasets. It constructs a succinct de Bruijn graph (SdBG) to assemble genomes from deeply sequenced microbial communities. Its primary advantage lies in its ability to assemble large datasets (e.g., >100 billion base pairs) on a single server with limited memory, making it a critical tool in the SPAdes/metaSPAdes/MEGAHIT workflow paradigm for metagenomics.

Quantitative Performance Comparison of Assemblers

Recent benchmarking studies (circa 2023-2024) highlight the trade-offs between leading assemblers.

Table 1: Comparative Performance of Metagenome Assemblers on Benchmark Datasets

| Assembler | Optimal Use Case | Average Contig N50* (kbp) | Memory Efficiency (GB per 10 Gbp data) | Speed (CPU hours per 10 Gbp) | Key Strength |

|---|---|---|---|---|---|

| MEGAHIT | Large-scale, complex metagenomes | 8 - 15 | 2 - 5 | 10 - 20 | Exceptional memory efficiency & speed |

| metaSPAdes | High-quality, isolate-like genomes from metagenomes | 12 - 25 | 50 - 100 | 50 - 100 | Superior contig continuity & accuracy |

| SPAdes | Isolate genomes, low-complexity communities | 15 - 30+ | 30 - 60 | 20 - 40 | Optimized for single genomes |

| IDBA-UD | Small to medium-sized metagenomes | 7 - 12 | 20 - 40 | 30 - 60 | Iterative k-mer strategy |

*N50 values are highly dataset-dependent; ranges reflect typical outcomes on complex mock communities.

Table 2: MEGAHIT Performance on Real Large-Scale Datasets

| Dataset Description | Input Size (Gbp) | Memory Peak (GB) | Runtime (CPU hrs) | # Contigs (>500 bp) | Largest Contig (kbp) |

|---|---|---|---|---|---|

| Human Gut Metagenome | 150 | 45 | 180 | 1,200,000 | 145 |

| Ocean Microbial Community | 450 | 120 | 520 | 3,500,000 | 89 |

| Soil Metagenome (Complex) | 80 | 25 | 95 | 900,000 | 72 |

The SPAdes/metaSPAdes/MEGAHIT Workflow Context

Within the broader thesis on metagenomic assembly workflows, MEGAHIT occupies a specific niche. The choice between metaSPAdes and MEGAHIT is not one of superiority but of strategic application based on project goals and resources. A hybrid assembly approach is often employed: MEGAHIT is used for an initial, resource-efficient assembly of all data, and its output can be used to subset reads for targeted, deeper assembly of specific taxa of interest using metaSPAdes for superior continuity.

Experimental Protocols

Protocol: Standard MEGAHIT Assembly for Metagenomic Paired-End Reads

Objective: To assemble raw metagenomic Illumina paired-end reads into contigs using MEGAHIT.

Materials:

- Raw FASTQ files (R1 and R2).

- A Linux server with MEGAHIT installed (v1.2.9 or later).

- Adequate disk space for intermediate files.

Procedure:

- Quality Control & Adapter Trimming: Use Trimmomatic or fastp.

- MEGAHIT Assembly: Execute the core assembly command. The

--k-listspecifies a range of k-mer sizes. MEGAHIT uses a iterative k-mer strategy by default.-1,-2: Input cleaned paired-end reads.-o: Output directory.--k-list: Recommend progressive, odd-numbered k-mers from 27 to 87 for diverse communities.--min-contig-len: Set minimum contig length (default 200).--num-cpu-threads: Number of CPU threads to use.

- Output: The final contigs are in

megahit_assembly_output/final.contigs.fa.

Protocol: Hybrid MEGAHIT-metaSPAdes Assembly Workflow

Objective: Leverage MEGAHIT's efficiency for a primary assembly and use its output to guide a targeted, high-quality metaSPAdes assembly.

Procedure:

- Perform the Standard MEGAHIT Assembly (Protocol 2.1).

- Identify Target Contigs: Use taxonomic classifiers (e.g., Kaiju, Kraken2) on

final.contigs.fato identify contigs belonging to a taxon of interest (e.g., a specific bacterial genus). - Map Reads to Target Contigs: Use Bowtie2 to extract reads mapping to the target contigs.

Note: This example extracts *unmapped reads (

-f 12). To extract mapped reads for the target, use appropriate samtools-Fflags.* - Targeted metaSPAdes Assembly: Assemble the extracted reads (mapped to the target) using metaSPAdes for improved genome reconstruction.

Visualizations

Title: MEGAHIT Standard Metagenomic Assembly Workflow

Title: Hybrid MEGAHIT and metaSPAdes Assembly Strategy

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools & Materials for Metagenomic Assembly Workflow

| Item | Function in Workflow | Example/Version | Notes |

|---|---|---|---|

| High-Throughput Sequencer | Generates raw metagenomic sequence data. | Illumina NovaSeq X, HiSeq; PacBio Revio. | Illumina dominant for MEGAHIT; long-reads used for hybrid polishing. |

| Computational Server | Executes memory-intensive assembly algorithms. | 64+ GB RAM, 16+ CPU cores, large SSD storage. | MEGAHIT reduces demand, enabling larger assemblies on modest hardware. |

| Quality Control Tool | Removes adapters, low-quality bases, and artifacts. | fastp, Trimmomatic, BBDuk. | Critical pre-processing step for all assemblers. |

| Metagenome Assembler (MEGAHIT) | Core tool for succinct de Bruijn graph construction. | MEGAHIT v1.2.9+. | Chosen for large-scale, complex datasets under memory constraints. |

| Metagenome Assembler (metaSPAdes) | Alternative assembler for high-quality contigs. | metaSPAdes v3.15.5+. | Used for targeted assembly or when maximum contiguity is priority. |

| Read Mapping Tool | Maps reads to contigs for binning or read extraction. | Bowtie2, BWA, minimap2. | Essential for hybrid workflow and validation. |

| Taxonomic Classifier | Assigns taxonomy to contigs/reads to guide analysis. | Kaiju, Kraken2, GTDB-Tk. | Identifies taxa of interest for targeted assembly (Hybrid Protocol). |

| Metagenomic Binning Tool | Groups contigs into putative genome bins. | MetaBAT2, MaxBin2, VAMB. | Standard post-assembly step for genome reconstruction. |

| Genome Quality Tool | Assesses completeness and contamination of bins. | CheckM2, BUSCO. | Provides metrics for downstream interpretation and publication. |

Within the metagenomic assembly workflow, the choice between assemblers like SPAdes, metaSPAdes, and MEGAHIT represents a critical trade-off between assembly accuracy, computational efficiency, and memory footprint. This document provides detailed application notes and protocols for researchers to evaluate and select the appropriate tool based on their project's constraints and objectives, framed within a broader thesis on optimizing metagenomic assembly pipelines for downstream analysis in drug discovery and functional characterization.

Comparative Quantitative Analysis

Recent benchmarking studies (2023-2024) using standardized datasets like CAMI2 and simulated complex communities provide the following performance metrics.

Table 1: Performance Metrics on Complex Metagenomes (≥50 Gb data, high diversity)

| Assembler | Estimated Accuracy (QV) | Computational Time (Hours) | Peak Memory (GB) | N50 (kbp) |

|---|---|---|---|---|

| SPAdes | 35-40 | 48-72 | 500-700 | 10-15 |

| metaSPAdes | 38-42 | 36-60 | 300-500 | 12-20 |

| MEGAHIT | 30-35 | 8-15 | 100-200 | 8-12 |

Table 2: Suitability Guidance by Project Goal

| Project Priority | Recommended Tool | Key Rationale |

|---|---|---|

| Maximum Contiguity & Accuracy | metaSPAdes | Optimized de Bruijn graph construction for metagenomes; best QV and N50. |

| Large-Scale Survey / Limited Resources | MEGAHIT | Superior time and memory efficiency; suitable for first-pass assembly. |

| Isolate or Low-Complexity Community | SPAdes (--meta) | High accuracy for less complex samples; more configurable for specific genomes. |

Experimental Protocols

Protocol 1: Benchmarking Assembly Performance

Objective: Quantify accuracy, efficiency, and memory footprint of assemblers on a controlled dataset.

Materials:

- Compute node: Minimum 32 cores, 512 GB RAM, 1 TB SSD scratch space.

- Reference dataset: CAMI2 Toy Human Dataset (or similar).

- Software: SPAdes v3.15.5, metaSPAdes v3.15.5, MEGAHIT v1.2.9, QUAST v5.2.0,

/usr/bin/time.

Methodology:

- Data Preparation: Download dataset. Perform quality trimming (e.g., with Trimmomatic or fastp).

- Resource Monitoring: Prepend all assembly commands with

/usr/bin/time -vto record peak memory and CPU time. - Assembly Execution:

- MEGAHIT:

megahit -1 R1.fq.gz -2 R2.fq.gz -o megahit_out --presets meta-large - metaSPAdes:

metaspades.py -1 R1.fq.gz -2 R2.fq.gz -o metaspades_out -t 32 -m 500 - SPAdes:

spades.py --meta -1 R1.fq.gz -2 R2.fq.gz -o spades_meta_out -t 32 -m 500

- MEGAHIT:

- Evaluation: Run QUAST on all assemblies:

quast.py -o quast_results --min-contig 1000 reference.fasta assembly*.fasta. - Data Collection: Record QV, N50, misassemblies from QUAST reports. Record "Maximum resident set size" and "Elapsed (wall clock) time" from

timeoutput.

Protocol 2: Hybrid Assembly Strategy for Critical Targets

Objective: Leverage MEGAHIT's efficiency for initial assembly, followed by metaSPAdes for subset refinement.

Methodology:

- Rapid Co-assembly: Run MEGAHIT on all samples from a cohort.

- Target Gene Identification: Use Prodigal to predict ORFs from the MEGAHIT assembly, then HMMER to identify contigs containing marker genes of interest (e.g., antibiotic resistance genes, biosynthetic gene clusters).

- Read Mapping & Extraction: Map raw reads back to target contigs using Bowtie2. Extract reads mapping to these regions with SAMtools.

- Refined Assembly: Assemble the extracted, enriched read set using metaSPAdes with higher k-mer values (e.g.,

-k 21,33,55,77). - Validation: Compare contig length and completeness of the target region between the hybrid approach and a single metaSPAdes assembly of the entire dataset.

Visual Workflows

Assembly Selection Decision Workflow

Hybrid Targeted Assembly Protocol

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Reagents and Computational Tools

| Item Name | Category | Primary Function in Workflow |

|---|---|---|

| Illumina NovaSeq Reagents | Wet-Lab Chemistry | Generate high-throughput paired-end (e.g., 2x150 bp) sequencing data; input for all assemblies. |

| ZymoBIOMICS Mock Community | Validation Standard | Provides a defined microbial mixture for benchmarking assembly accuracy and completeness. |

| SPAdes/metaSPAdes Toolkit | Software | Implements advanced de Bruijn graph algorithms for accurate, contiguous assembly. |

| MEGAHIT Software | Software | Employs succinct data structures for highly memory- and time-efficient assembly. |

| QUAST (MetaQUAST) | Evaluation Software | Evaluates assembly quality metrics (N50, QV, misassemblies) against references or intrinsically. |

| Bowtie2 / BWA | Software | Maps raw reads back to contigs for quantification, binning, or read extraction in hybrid protocols. |

| Prodigal | Software | Predicts protein-coding regions (ORFs) on assembled contigs for functional annotation. |

| HMMER Suite | Software | Scans predicted ORFs against Pfam/other HMM databases to identify genes of interest. |

Within the broader thesis investigating the SPAdes/metaSPAdes and MEGAHIT assembly workflows for metagenomics, this document establishes the critical foundational phase. The choice and success of any subsequent computational assembly and analysis are wholly dependent on rigorously defining project goals, understanding sample complexity, and accurately provisioning compute resources a priori. These preliminary considerations form the strategic blueprint for the entire research endeavor.

Defining Project Goals: A Strategic Framework

Clarity in project objectives directly dictates experimental design, sequencing strategy, and downstream analytical pipeline selection.

Table 1: Project Goal Specifications and Their Downstream Implications

| Project Goal | Recommended Sequencing Approach | Key Quality Metric | Primary Assembly Workflow Consideration | Downstream Analysis Focus |

|---|---|---|---|---|

| Taxonomic Profiling | 16S rRNA amplicon (V3-V4) or shallow shotgun (~5-10 M reads) | Alpha/Beta diversity indices | Often not required; direct read classification | Community composition, differential abundance |

| Functional Potential | Deep shotgun metagenomics (>20-50 M reads) | Number of predicted ORFs/KEGG modules | High-contiguity genes for annotation | Pathway analysis, CAZyme profiling, resistance gene screening |

| Genome-Resolved Metagenomics (MAGs) | Very deep shotgun (>60-100 M reads), long-read integration | MAG completeness/contamination (CheckM) | Assembler's ability to handle strain heterogeneity | Single-variant analysis, metabolic reconstruction |

| Viral/Eukaryotic Community | Size fractionation, deep sequencing, enrichment | Proportion of host reads | Sensitivity to low-abundance, high-diversity sequences | Specialized classifiers (VirSorter, EukCC) |

Protocol 2.1: Goal Definition and Feasibility Assessment

- Stakeholder Alignment: Formally document primary and secondary research questions.

- Literature Benchmarking: Search for recent (last 2-3 years) studies with analogous goals in similar sample types (e.g., soil, human gut, wastewater). Use PubMed and Google Scholar with keywords: "metagenome assembly benchmark [sample type] [year]".

- Output Specification: Define the required deliverables (e.g., list of species, catalog of genes, collection of MAGs).

- Feasibility Gate: Based on benchmarks, determine if goals are achievable within typical sample, sequencing, and compute constraints for the field.

Assessing Sample Complexity and Sequencing Depth

Sample complexity is the primary driver of required sequencing effort and computational challenge.

Table 2: Sample Complexity Estimators and Their Interpretation

| Complexity Factor | Low Complexity (e.g., Bioreactor) | Medium Complexity (e.g., Human Gut) | High Complexity (e.g., Forest Soil) |

|---|---|---|---|

| Estimated Species Richness | 10s - 100s | 100s - 1,000s | 10,000s - 1,000,000s |

| Evenness | High (few dominant species) | Moderate | Very Low (long tail of rare species) |

| Read Saturation Curve | Plateaus quickly | Plateaus gradually | Does not plateau at typical depths |

| Recommended Min. Sequencing Depth (Shotgun) | 10-20 Million reads | 40-60 Million reads | 100+ Million reads (often impractical) |

| Dominant Assembly Challenge | Separating closely related strains | General mixture complexity | Overwhelming diversity, high fragmentation |

Protocol 3.1: In Silico Pre-Sequencing Complexity Estimation

- Pilot Sequencing: If resources allow, sequence 1-2 representative samples at moderate depth (e.g., 20 M reads).

- Read-Based Analysis: Perform not assembly-based profiling using Kraken2/Bracken or MetaPhlAn on the pilot data.

- Rarefaction Analysis: Use the pilot data to generate rarefaction curves for species or genes (e.g., with Nonpareil or by subsampling reads).

- Depth Projection: Extrapolate the curve to estimate the depth required to observe 80%, 90%, or 95% of the detectable diversity. This informs final sequencing decisions.

Title: Preliminary Sample Complexity Assessment Workflow

Compute Resource Provisioning for Assembly Workflows

The computational demand of metagenomic assembly is substantial and non-linear with data size.

Table 3: Compute Resource Estimates for Common Assembly Scenarios (Current Benchmarks)

| Scenario (Illumina Data) | Approx. Input Data | metaSPAdes (Typical Requirements) | MEGAHIT (Typical Requirements) | Recommended System Profile |

|---|---|---|---|---|

| Low Complexity~20M PE reads (10 Gb) | 20 GB FASTQ | RAM: 150-200 GBTime: 4-8 CPU-hoursDisk: 80-100 GB | RAM: 50-80 GBTime: 2-4 CPU-hoursDisk: 40-60 GB | High-memory server (256 GB RAM) or small cloud instance. |

| Medium Complexity~60M PE reads (30 Gb) | 60 GB FASTQ | RAM: 350-500 GBTime: 20-30 CPU-hoursDisk: 200-300 GB | RAM: 120-180 GBTime: 10-15 CPU-hoursDisk: 100-150 GB | Large memory cloud instance or HPC node (512GB-1TB RAM). |

| High Complexity~100M+ PE reads (50 Gb+) | 100+ GB FASTQ | RAM: 750 GB+Time: 50+ CPU-hoursDisk: 500 GB+ | RAM: 250-350 GBTime: 20-30 CPU-hoursDisk: 200-300 GB | Very large cloud instance or dedicated HPC node (1TB+ RAM). Essential for metaSPAdes. |

Protocol 4.1: Iterative Compute Benchmarking for Large Projects

- Subsampling Test: Take a random subset (e.g., 10%, 25%, 50%) of reads from one sample using

seqtk sample. - Benchmark Run: Run both metaSPAdes and MEGAHIT on each subset, monitoring peak RAM usage (

/usr/bin/time -v), wall-clock time, and disk I/O. - Resource Projection: Plot resource usage against subset size. Fit a model (often linear or slightly polynomial) to extrapolate to 100% data.

- Scaling Decision: Based on projections and available infrastructure, decide to: a) Use MEGAHIT for lower memory footprint, b) Secure larger resources for metaSPAdes, or c) Employ a hybrid/multi-kmer strategy.

Title: Iterative Compute Benchmarking Protocol

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 4: Key Reagents, Materials, and Software for Preliminary Phase

| Item Name | Category | Function/Benefit | Example/Note |

|---|---|---|---|

| ZymoBIOMICS DNA/RNA Miniprep Kit | Wet-Lab Reagent | Reliable co-extraction of DNA and RNA from diverse, complex samples; includes inhibitor removal. | Standard for human gut, soil, and water metagenomes. |

| PBS or TE Buffer | Wet-Lab Reagent | Optimal media for sample storage and homogenization to prevent degradation. | Use nuclease-free, pH-stable buffers. |

| FastQC / MultiQC | Software Tool | Initial quality assessment of raw sequencing reads; identifies adapter contamination, low quality. | Critical before any computational planning. |

| KneadData (Trimmomatic/Bowtie2) | Software Tool | Performs quality trimming and decontamination (e.g., host read removal). | Reduces dataset size and improves assembly specificity. |

| Nonpareil | Software Tool | Estimates required sequencing depth and project coverage from a subsample. | Core tool for Protocol 3.1. |

| MetaPhlAn4 / Kraken2 | Software Tool | Provides rapid, read-based taxonomic profile to gauge complexity pre-assembly. | Informs decisions about assembly necessity and strategy. |

| Google Cloud Platform / AWS EC2 | Compute Resource | On-demand, scalable virtual machines. Essential for running memory-intensive metaSPAdes. | Use memory-optimized instances (e.g., n2d-highmem). |

| Slurm / SGE | Compute Resource | Job scheduler for High-Performance Computing (HPC) clusters. Manages large batch jobs. | Standard for academic research computing centers. |

| Seqtk | Software Tool | Lightweight toolkit for FASTA/Q file manipulation; used for subsampling in benchmarking. | Enables Protocol 4.1. |

GNU Time (/usr/bin/time -v) |

Software Tool | Precisely measures peak memory and CPU usage of any command-line process. | Essential for accurate resource profiling. |

Step-by-Step Assembly Pipelines: From Raw Reads to Metagenome-Assembled Genomes (MAGs)

In a comprehensive thesis focused on metagenomic assembly workflows employing SPAdes, metaSPAdes, and MEGAHIT, the pre-assembly phase is critical. The quality and uniformity of input sequencing reads directly dictate assembly continuity, accuracy, and the biological relevance of reconstructed genomes and community profiles. This document details the essential application notes and protocols for read Quality Control (QC) and Normalization, which are mandatory precursors to optimal assembly performance with the aforementioned tools.

Quality Control: Application Notes & Protocols

Raw metagenomic sequencing data (typically from Illumina platforms) contains artifacts that hinder assembly: adapter sequences, low-quality bases, and short fragments. Uncorrected, these lead to fragmented assemblies, misassemblies, and wasted computational resources.

FastQC: Quality Assessment Protocol

Objective: Generate a comprehensive visual report on read quality metrics to inform trimming parameters. Protocol:

- Input: Uncompressed or gzipped FASTQ files (

sample_R1.fastq.gz,sample_R2.fastq.gz). - Command:

- Output Interpretation: Examine

htmlreport. Key modules:- Per Base Sequence Quality: Identify positions where median quality drops below Q20 (green/amber background threshold).

- Adapter Content: Quantify adapter contamination.

- Sequence Length Distribution: Confirm uniform read length.

- Decision Point: Use this report to set parameters for

Trimmomatic(e.g.,LEADING,TRAILING,SLIDINGWINDOW,MINLEN).

Trimmomatic: Read Trimming & Filtering Protocol

Objective: Programmatically remove adapters, low-quality bases, and short reads. Protocol:

- Input: Paired-end FASTQ files.

- Command for Paired-End Data:

- Parameter Explanation:

ILLUMINACLIP: Remove adapters. (<fastaWithAdapters>:<seed mismatches>:<palindrome clip threshold>:<simple clip threshold>:<min adapter length>:<keep both>)LEADING/TRAILING: Remove low-quality bases from start/end.SLIDINGWINDOW: Scan read with a 4-base window, trim if average quality <20.MINLEN: Discard reads shorter than 50 bp.

- Output: Four files:

*_paired(clean pairs for assembly) and*_unpaired(single reads).

Table 1: Recommended Trimmomatic Parameters for Metagenomic Assembly

| Parameter | Typical Setting | Rationale for Metagenomics |

|---|---|---|

| LEADING | 3-20 | Remove initial low-quality bases; stricter (20) for complex communities. |

| TRAILING | 3-20 | Remove terminal low-quality bases. |

| SLIDINGWINDOW | 4:15-4:20 | Balance between quality retention and filtering. 4:20 is stringent. |

| MINLEN | 50-100 | Removes fragments too short for assembly k-mers. Crucial for MEGAHIT. |

| AVGQUAL | 15-20 | (Optional) Discard entire read if average quality below threshold. |

Read Normalization: Application Notes & Protocols

Normalization reduces read redundancy by down-sampling high-coverage regions to a defined limit. This decreases dataset size, computational memory/time for assembly, and mitigates bias from dominant taxa without significant loss of assembly completeness.

BBNorm (part of BBTools) Normalization Protocol

Objective: Uniformly normalize read coverage to improve assembly efficiency of SPAdes/metaSPAdes/MEGAHIT. Protocol:

- Input: Quality-trimmed, paired-end FASTQ files.

- Command for In-Silico Normalization:

- Parameter Explanation:

target=100: Aim for ~100x coverage after normalization.min=5: Discard reads from regions with original coverage <5x (likely errors).

- Output: Normalized paired-end files ready for assembly. A

histogramfile summarizes coverage distribution.

Table 2: Impact of Normalization on Assembly Workflow Performance

| Metric | Without Normalization | With Normalization (target=100) | Benefit |

|---|---|---|---|

| Input Data Volume | 100% (e.g., 50 GB) | 10-30% of original | Faster I/O, lower RAM. |

| SPAdes/metaSPAdes RAM Usage | Very High | Reduced by ~30-50% | Enables larger assemblies. |

| MEGAHIT Runtime | Baseline | 2-5x Faster | Improved throughput. |

| Contig N50/L50 | May be lower due to memory limits | Often improved or maintained | Better assembly continuity. |

| Genome Recovery | Complete | Nearly complete (>95%) | Minimal biological loss. |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Pre-Assembly Processing

| Item | Function & Relevance |

|---|---|

| Illumina Sequencing Kits (e.g., NovaSeq 6000, MiSeq Reagents) | Source of raw metagenomic reads. Kit version determines adapter sequences for trimming. |

Trimmomatic Adapter Fasta Files (TruSeq2/3-PE.fa, NexteraPE.fa) |

Contains adapter sequences for ILLUMINACLIP step. Must match sequencing library prep. |

| BBNorm (BBTools Suite) | Primary tool for in-silico read normalization. Efficient for large metagenomes. |

| FastQC | Standard for initial and post-trimming quality assessment. |

| MultiQC | Aggregates FastQC/Trimmomatic logs into a single report for multiple samples. |

| High-Performance Computing (HPC) Cluster | Essential for processing large, complex metagenomes through these CPU/memory-intensive steps. |

Visualized Workflows

Pre-Assembly Data Processing Pipeline

Core Functions of FastQC Trimmomatic and BBNorm

Command-Line Workflow for SPAdes on Single Genomes (Baseline)

Article

This protocol details a standard command-line workflow for assembling single bacterial genomes using the SPAdes assembler (v3.15.5 as of late 2023). Within the broader thesis context, this forms the foundational baseline for comparing and contrasting the performance of SPAdes with metaSPAdes and MEGAHIT on complex metagenomic datasets. Proficiency in this single-genome workflow is essential for understanding the core algorithmic principles before applying more specialized metagenomic assemblers.

Application Notes

- Purpose & Positioning: The SPAdes (St. Petersburg genome assembler) algorithm is designed for assembling small to medium-sized, single-cell, and standard multi-cell bacterial genomes from Illumina paired-end, mate-pair, and single-read data. Its use of a multi-sized de Bruijn graph approach makes it highly accurate for isolate genomes, serving as a performance benchmark within the meta-omics workflow thesis.

- Key Considerations: SPAdes is memory-intensive. For large genomes (>100 Mbp), consider using the

--metaflag or alternative assemblers. The quality of input reads is paramount; strict read trimming and correction are recommended pre-steps. - Expected Outcomes: A set of contiguous sequences (contigs) in FASTA format (

scaffolds.fasta,contigs.fasta), assembly metrics (assembly_stats.txt), and graphical fragment size estimations.

Experimental Protocol: SPAdes Assembly of a Bacterial Isolate

Sample & Data Preparation

- Source: Genomic DNA extracted from a bacterial pure culture.

- Sequencing: Illumina NovaSeq 6000, 2x150 bp paired-end library with ~350 bp insert size.

- Data: Demultiplexed raw reads in FASTQ format (

sample_R1.fastq.gz,sample_R2.fastq.gz).

Quality Control and Read Correction

- Tool: Fastp v0.23.2.

Command:

Output: Trimmed, adapter-removed, and error-corrected read pairs.

Genome Assembly with SPAdes

- Tool: SPAdes v3.15.5.

Core Command:

Critical Parameters Explained:

-1,-2: Input trimmed read files.--isolate: Optimizes the assembly for single-genome, high-coverage data (disables meta-mode).--cov-cutoff auto: Automatically removes low-coverage outliers.-t: Number of computational threads.-m: Memory limit in GB.

Post-Assembly Quality Assessment

- Tool: QUAST v5.2.0.

Command:

Output: Comprehensive report (

report.html,report.txt) detailing contig counts, N50, L50, total assembly length, and GC content.

Data Presentation

Table 1: Comparative Assembly Metrics for E. coli K-12 Substr. MG1655 (Simulated 100x Coverage)

| Assembler | Version | # Contigs (≥500 bp) | Largest Contig (bp) | Total Length (bp) | N50 (bp) | L50 | % Reference Coverage |

|---|---|---|---|---|---|---|---|

| SPAdes | 3.15.5 | 72 | 281,136 | 4,641,658 | 137,147 | 11 | 99.8 |

| metaSPAdes | 3.15.5 | 85 | 254,988 | 4,639,212 | 124,876 | 12 | 99.7 |

| MEGAHIT | 1.2.9 | 102 | 217,455 | 4,635,901 | 98,322 | 15 | 99.5 |

Data sourced from recent benchmark studies (2023). SPAdes (isolate mode) provides the best contiguity for single genomes.

Table 2: Recommended SPAdes Parameters for Single-Genome Workflows

| Parameter | Typical Value | Function |

|---|---|---|

-k |

21,33,55,77,99,127 | K-mer sizes (auto-selected if unspecified). |

--cov-cutoff |

auto |

Removes erroneous low-coverage graph edges. |

--isolate |

N/A (flag) | Assumes uniform, high-coverage dataset. |

--careful |

N/A (flag) | Runs MismatchCorrector to reduce mismatches/indels. |

-m |

64-128 | RAM (GB) to use. Critical for large genomes. |

-t |

8-16 | CPU threads for parallel computation. |

Mandatory Visualizations

Diagram Title: SPAdes Single-Genome Assembly Pipeline

Diagram Title: Assembler Roles in Metagenomics Thesis

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools & Resources for SPAdes Workflow

| Item | Function / Description | Source / Example |

|---|---|---|

| SPAdes Assembler | Core de Bruijn graph assembler for single-cell and isolate genomes. | https://github.com/ablab/spades |

| Fastp | Ultra-fast all-in-one FASTQ preprocessor for adapter trimming, quality filtering, and read correction. | https://github.com/OpenGene/fastp |

| QUAST | Quality Assessment Tool for evaluating and comparing genome assemblies. | https://github.com/ablab/quast |

| High-Performance Computing (HPC) Cluster | Essential for running memory-intensive assemblies (≥64 GB RAM recommended). | Local university HPC, AWS EC2 (r6i instances), Google Cloud. |

| Conda/Bioconda | Package manager for reproducible installation of bioinformatics software and dependencies. | https://bioconda.github.io/ |

| CheckM / BUSCO | For post-assembly evaluation of genome completeness and contamination (post-QUAST). | Used in downstream thesis analyses. |

| Illumina Sequencing Reagents | NovaSeq 6000 v1.5 reagent kits for generating standard 2x150 bp paired-end reads. | Illumina, Inc. (Catalog # 20028315) |

Within the broader thesis on SPAdes, metaSPAdes, and MEGAHIT assembly workflows for metagenomics research, this protocol focuses specifically on the implementation of metaSPAdes. metaSPAdes is a specialized assembler designed for metagenomic datasets, addressing challenges such as uneven sequencing depth and the presence of multiple, closely related genomes. This guide details optimal parameters and integrates the concept of co-binning to enhance genome recovery from complex microbial communities, which is critical for researchers and drug development professionals seeking to identify novel biosynthetic gene clusters or microbial targets.

Key Parameters for Metagenomic Datasets

Optimal parameter selection is crucial for balancing assembly continuity, accuracy, and computational resources. The following table summarizes the core and advanced parameters for metaSPAdes, based on current recommendations and the software's design for metagenomic data.

Table 1: Core and Advanced Parameters for metaSPAdes Assembly

| Parameter Flag | Default Value | Recommended Range for Metagenomes | Function & Rationale |

|---|---|---|---|

-k |

21,33,55 | 21,33,55,77,99,127 (auto-selected) | K-mer sizes. A broader, odd-numbered range helps capture varying genomic complexities and abundances. |

--only-assembler |

Not set | Use for restart | Skips read error correction; use only if processing pre-corrected reads. |

-m |

250 GB | 100-500+ GB | Memory limit in GB. Must be high for complex metagenomes to hold the de Bruijn graph. |

-t |

16 | 16-64 | Number of computational threads. Scales with server capacity. |

--tmp-dir |

System default | Specify a fast SSD path | Directory for temporary files. Critical for I/O performance on large datasets. |

-o |

spades_output |

User-defined path | Path to store all output files, including contigs and scaffolds. |

--meta |

Not set in SPAdes | Always set | Crucial. Enables the metaSPAdes algorithm for metagenomic data. |

--phred-offset |

33 | 33 or auto | Quality score offset (33 for modern Illumina). Auto-detection is generally safe. |

Protocol: Standard metaSPAdes Assembly Workflow

Materials and Pre-assembly Preparation

- Input Data: Paired-end Illumina reads in FASTQ format (

.fqor.fastq). - Quality Control: Use FastQC v0.12.1+ for initial quality assessment. Trim adapters and low-quality bases using Trimmomatic v0.39 or fastp v0.23.4.

- Computational Resources: A high-memory (RAM) server or cluster node. For a 50-100 Gbases dataset, ≥500 GB RAM and 32+ CPU cores are recommended.

Step-by-Step Protocol

- Activate Environment: Ensure metaSPAdes (v3.15.5+) is installed, typically via conda (

conda activate spades). - Navigate to Output Directory:

cd /path/to/your/project Execute metaSPAdes Command:

- Explanation: This command runs the metaSPAdes pipeline with 32 threads, a 500 GB memory limit, a specified temporary directory, and the essential

--metaflag.

- Explanation: This command runs the metaSPAdes pipeline with 32 threads, a 500 GB memory limit, a specified temporary directory, and the essential

- Monitor Output: Key output files include:

contigs.fasta: Final assembled contigs.scaffolds.fasta: Final scaffolds (preferred for downstream analysis).assembly_graph.gfa: Assembly graph in GFA format, essential for co-binning.

- Assembly Evaluation: Assess quality using QUAST v5.2.0 (

quast.py scaffolds.fasta -o quast_report) and CheckM2 for estimated completeness/contamination if reference genomes are available.

Protocol: Co-binning with the metaSPAdes Assembly Graph

Co-binning leverages the assembly graph to improve metagenome-assembled genome (MAG) recovery by combining information from multiple binning algorithms.

Principle

Individual binners (e.g., MetaBAT2, MaxBin2, CONCOCT) use different features (sequence composition, abundance). Their consensus, informed by the graph's connectivity, yields superior bins.

Detailed Co-binning Protocol

Inputs: scaffolds.fasta and assembly_graph.gfa from metaSPAdes; quality-filtered reads.

Tools Required: MetaBAT2, MaxBin2, CONCOCT, DAS_Tool.

Generate Abundance Profiles: Map reads back to scaffolds to create depth-of-coverage files.

Run Multiple Binners:

- MetaBAT2:

jgi_summarize_bam_contig_depths --outputDepth depth.txt mapped.sorted.bamthenmetabat2 -i scaffolds.fasta -a depth.txt -o metabat2_bins/bin - MaxBin2:

run_MaxBin.pl -contig scaffolds.fasta -abund depth.txt -out maxbin2_bins/bin - CONCOCT: Requires contig segmentation first (

cut_up_fasta.py, etc.).

- MetaBAT2:

Execute Co-binning with DAS_Tool: Integrates bins using the assembly graph to resolve conflicts.

Output: A refined, non-redundant set of MAGs in

das_tool_output_DASTool_bins/. Evaluate with CheckM2.

Visual Workflow

Diagram Title: metaSPAdes and Co-binning Workflow for Metagenomics

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions & Computational Tools

| Item | Category | Function & Rationale |

|---|---|---|

| Illumina Sequencing Kits (e.g., NovaSeq 6000) | Wet-lab Reagent | Generates the high-throughput, short-read paired-end data required for metagenomic assembly. |

| SPAdes/metaSPAdes Suite (v3.15.5+) | Software | Core assembler optimized for single-cell and metagenomic data. The --meta flag is essential. |

| Trimmomatic / fastp | Software | Performs critical pre-processing: removes adapters and low-quality bases to improve assembly accuracy. |

| Bowtie2 / SAMtools | Software | Maps reads back to assembled scaffolds to generate coverage profiles, essential for binning. |

| MetaBAT2, MaxBin2, CONCOCT | Software | Individual binning algorithms that use sequence composition and abundance to group contigs into genomes. |

| DAS_Tool | Software | Co-binning tool that selects a non-redundant set of bins from multiple binners using the assembly graph. |

| CheckM2 | Software | Rapidly assesses the completeness and contamination of recovered MAGs, crucial for quality control. |

| High-Performance Compute Cluster | Infrastructure | Provides the necessary RAM (≥500GB) and CPU cores (≥32) to run memory-intensive assembly and binning steps. |

| Fast Solid-State Drive (SSD) | Infrastructure | Used for the --tmp-dir parameter; drastically improves I/O performance during graph construction. |

Application Notes: Parameter Optimization in Complex Metagenomes

Within the broader thesis workflow for metagenomic assembly—which critically evaluates SPAdes, metaSPAdes, and MEGAHIT—MEGAHIT stands out for its efficiency and scalability with large, diverse datasets. Its performance is highly tunable via two pivotal parameters: --k-list and --min-count. These parameters directly address the challenges of uneven sequencing depth and vast microbial diversity.

The Role of --k-list:

This parameter defines the progression of k-mer sizes used during the iterative de Bruijn graph construction. A wider range and finer gradation of k-mers can improve contiguity for genomes with varying abundances and GC content.

The Role of --min-count:

This filter removes low-frequency k-mers from the initial graph, primarily mitigating the impact of sequencing errors. In metagenomics, it also acts as a coarse abundance filter, shaping which organisms' signals are incorporated into the assembly graph.

Recent benchmarking studies (2023-2024) indicate that the default parameters of MEGAHIT are optimized for general use but are suboptimal for highly complex communities (e.g., soil, sediment) or for prioritizing rare biosphere members. The following table summarizes quantitative findings on parameter impact.

Table 1: Impact of MEGAHIT Parameters on Assembly Metrics for Diverse Communities

| Parameter & Tested Value | N50 (bp) | Total Assembly Size (Mbp) | # of Contigs ≥ 1kbp | Representative Use-Case / Effect |

|---|---|---|---|---|

Default (--k-list 27,37,47,57,67,77,87, --min-count 2) |

5,120 - 7,890 | 145 - 180 | 25,000 - 40,000 | Balanced approach for moderate-complexity samples (e.g., human gut). |

Extended k-list (--k-list 21,29,39,49,59,69,79,89,99,109,119,127) |

6,850 - 9,230 | 155 - 195 | 22,000 - 35,000 | High-diversity communities; improves recovery of longer contigs from dominant and mid-abundance taxa. |

Aggressive --min-count 3 |

6,100 - 8,550 | 95 - 130 | 15,000 - 25,000 | Low-biomass or high-host-DNA samples; reduces errors and very low-abundance microbial "noise." |

Permissive --min-count 1 |

4,250 - 6,400 | 210 - 280 | 45,000 - 70,000 | Rare biosphere mining; maximizes sensitivity but dramatically increases fragmentation and potential errors. |

Stepped k-min-count (--k-min 21 --k-max 127 --k-step 10 --min-count 2) |

5,950 - 8,200 | 150 - 185 | 23,000 - 38,000 | Automated granular k-mer progression; useful for exploratory standardization across projects. |

Experimental Protocols

Protocol 2.1: Benchmarking Assembly Parameters for Soil Metagenomes

Objective: To determine the optimal --k-list and --min-count parameters for assembling highly diverse soil metagenomic data.

Materials:

- Illumina paired-end metagenomic sequencing data (e.g., 2x150bp).

- High-performance computing cluster with MEGAHIT v1.2.9 installed.

- Quality assessment tools: FastQC, MultiQC.

- Assembly assessment tools: QUAST v5.2, MetaQUAST.

Methodology:

- Data Preparation:

- Perform quality trimming and adapter removal using Trimmomatic or fastp.

- Generate quality reports for trimmed data.

Parameterized Assembly Execution:

- Execute MEGAHIT with each parameter set listed in Table 1.

Example command for extended k-list:

Example command for aggressive min-count:

Assembly Evaluation:

- Run MetaQUAST on all final assembly files (

final.contigs.fa). - Use

-Rflag to provide a set of reference genomes (if available for the environment) for improved analysis. - Collate metrics: N50, total length, largest contig, # contigs, # predicted genes (using MetaGeneMark).

- Run MetaQUAST on all final assembly files (

Downstream Validation (Optional):

- Map reads back to each assembly using Bowtie2 to calculate read recruitment rates.

- Perform taxonomic profiling of contigs using CAT/BAT or Kaiju to assess community representation.

Protocol 2.2: Targeted Recovery of Low-Abundance Pathways

Objective: To assemble genes from rare taxa by selectively tuning --min-count.

Materials: As in Protocol 2.1, plus: HMMER, pathway-specific HMM profiles (e.g., from MetaCyc, KEGG).

Methodology:

- Execute two assemblies: one with

--min-count 3(standard) and one with--min-count 1(permissive). - Predict genes on all contigs ≥ 1kbp using Prodigal.

- Search predicted protein sequences against a curated HMM database of target metabolic pathways (e.g., antibiotic resistance genes, secondary metabolite clusters).

- Compare the number, length, and taxonomic origin of unique pathway hits recovered by each assembly parameter set.

Visualization of Workflow and Parameter Logic

MEGAHIT Assembly Logic & Parameter Strategy

How k-list and min-count Shape the Assembly

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for MEGAHIT Metagenomic Assembly Workflow

| Item/Category | Specific Product/Example | Function in Workflow |

|---|---|---|

| Sequencing Platform | Illumina NovaSeq 6000, NextSeq 2000 | Generates high-throughput, short-read (150-300bp PE) data, the primary input for MEGAHIT. |

| Library Prep Kit | Illumina DNA Prep, Nextera XT Library Kit | Prepares metagenomic DNA fragments for sequencing with compatible adapters. |

| Quality Control Tool | Qubit 4 Fluorometer, Agilent TapeStation 4150 | Quantifies and assesses the size distribution of input DNA and final libraries pre-sequencing. |

| Computational Resource | HPC Cluster (SLURM/OpenPBS), Cloud (AWS EC2, GCP) | Provides the necessary CPU (≥16 cores) and RAM (≥128GB for complex samples) for assembly. |

| Containerized Software | MEGAHIT Docker/Singularity image, Bioconda package | Ensures version control, reproducibility, and easy deployment of the assembly environment. |

| Co-assembly Binning Aid | 10x Genomics Linked Reads, Hi-C Kit (Proximo) | Provides long-range contiguity information to scaffold MEGAHIT contigs into improved metagenome-assembled genomes (MAGs). |

| Validation Dataset | ZymoBIOMICS Gut Microbiome Standard (D6320) | Provides a mock community with known genome sequences for benchmarking assembly accuracy and completeness. |

Within the thesis workflow for SPAdes, metaSPAdes, and MEGAHIT assembly in metagenomics research, post-assembly quality assessment is a critical step. It determines the reliability of derived contigs for downstream analyses like gene prediction, binning, and comparative genomics, which inform drug target discovery and microbial ecology. QUAST (Quality Assessment Tool for Genome Assemblies) and its metagenomic extension, MetaQUAST, are standard tools for this purpose. They provide comprehensive metrics that allow researchers to compare multiple assemblies, identify the best-performing assembler and parameters for their dataset, and flag potential assembly errors.

Core Metrics and Quantitative Data

QUAST and MetaQUAST evaluate assemblies based on several key metrics. The following table summarizes the primary quantitative outputs relevant to metagenomic contig assessment.

Table 1: Key Quality Metrics Reported by QUAST/MetaQUAST for Metagenomic Assembly Assessment

| Metric | Definition | Interpretation for Metagenomics |

|---|---|---|

| Total contigs | Total number of assembled contigs. | Lower numbers may indicate better assembly, but must be considered with N50. |

| Largest contig | Length (bp) of the longest contig. | Indicates the maximum continuity achieved. |

| Total length | Sum of lengths of all contigs. | Should be considered relative to expected genome size(s) and read data volume. |

| N50 | Length of the shortest contig in the set that contains the fewest (largest) contigs whose combined length represents at least 50% of the assembly. | Higher N50 indicates better assembly continuity. A primary measure of contiguity. |

| L50 | The number of contigs larger than or equal to N50. | Lower L50 indicates better assembly continuity. |

| # misassemblies | Number of positions in the contigs where the alignment implies a large-scale error (e.g., rearrangements, relocations). | Lower is better. Indicates structural correctness. Relies on a reference. |

| # mismatches per 100 kbp | Number of base mismatches per 100,000 aligned bases. | Lower is better. Induces base-level accuracy. Relies on a reference. |

| # indels per 100 kbp | Number of insertions/deletions per 100,000 aligned bases. | Lower is better. Indicates base-level accuracy. Relies on a reference. |

| # predicted genes | Number of genes predicted on contigs (e.g., using MetaGeneMark). | Can be compared across assemblies; very low counts may indicate fragmented assemblies. |

| Genome fraction (%) | Percentage of reference genome bases covered by the assembly. | In metagenomics, reported for each provided reference. Higher indicates better recovery. |

| # operons (MetaQUAST) | For prokaryotic references, reports the number of completely recovered 16S-23S-5S rRNA operons. | Indicator of recovery of conserved, functionally important regions. |

| # partially unaligned contigs | Contigs where less than 50% of their length aligns to the reference. | May represent novel sequences, contamination, or misassemblies. |

Experimental Protocols

Protocol 3.1: Quality Assessment of a Single Metagenomic Assembly using MetaQUAST

Objective: To evaluate the quality of a metagenome assembly generated by SPAdes, metaSPAdes, or MEGAHIT in the absence of reference genomes.

Materials:

- Assembled contigs in FASTA format (e.g.,

contigs.fasta). - High-performance computing cluster or server with Linux.

- Python (v3.3+).

- MetaQUAST installed (v5.2.0+).

Method:

- Installation: Install MetaQUAST via Conda.

Basic Run: Execute MetaQUAST on the assembly file. The

-oflag specifies the output directory.Interpretation: Open the generated

report.htmlfile in a web browser. Analyze key metrics: Total length, N50, L50, and total contigs. Review the interactive contig alignment viewer for potential anomalies.

Protocol 3.2: Comparative Assessment of Multiple Assemblers with Reference Genomes

Objective: To compare assemblies from SPAdes, metaSPAdes, and MEGAHIT using known reference genomes to identify the optimal assembly for a mock community dataset.

Materials:

- Multiple assembly FASTA files (e.g.,

spades_contigs.fasta,metaspades_contigs.fasta,megahit_contigs.fasta). - Reference genome FASTA files for species known to be in the mock community.

- MetaQUAST installed.

Method:

- Prepare References: Place all reference genome FASTA files in a directory (e.g.,

ref_genomes/). - Execute Comparative Analysis: Run MetaQUAST with multiple assemblies and the reference directory. The

-rflag directs to references.

- Analysis: Open

report.html. Use the summary table to directly compare all metrics (N50, misassemblies, genome fraction) across assemblers. Identify which assembler delivers the best trade-off between contiguity (N50) and accuracy (misassemblies, genome fraction) for your data.

Visualization of Workflow

Title: QUAST/MetaQUAST in the Metagenomic Assembly Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Assembly Quality Assessment

| Item | Function in Quality Assessment |

|---|---|

| High-Quality Contig FASTA Files | The primary input from assemblers (SPAdes, MEGAHIT). Quality of input dictates the validity of the assessment. |

| Reference Genome Sequences (Optional but Recommended) | Used by MetaQUAST to calculate accuracy metrics (misassemblies, genome fraction). Crucial for mock community validation. |

| MetaQUAST Software (v5.2.0+) | The core analytical tool that computes all standard and metagenomic-specific assembly metrics. |

| Conda/Bioconda Package Manager | Enables reproducible, one-command installation of MetaQUAST and its dependencies (e.g., GeneMark, BLAST). |

| High-Performance Computing (HPC) Resources | MetaQUAST alignment to multiple references is computationally intensive; requires adequate CPU and memory. |

| Python (v3.3+) | A core dependency for running the MetaQUAST toolkit. |

| Modern Web Browser (Chrome, Firefox) | Required to view the interactive HTML reports with plots and contig viewers generated by QUAST/MetaQUAST. |

Following the assembly of metagenomic reads via SPAdes, metaSPAdes, or MEGAHIT, downstream analysis is critical for extracting biological insights. This protocol details the subsequent steps of gene prediction, functional annotation, and genome-resolved metagenomics through binning using MetaBAT2 or MaxBin2, framed within a comprehensive metagenomics research thesis.

Application Notes & Quantitative Data

The performance of binning tools is contingent on assembly quality, sequencing depth, and community complexity. Key metrics for evaluation include completeness, contamination, and strain heterogeneity as assessed by CheckM.

Table 1: Comparative Overview of Binning Tools

| Tool | Algorithm Principle | Key Inputs | Primary Strength | Typical Use Case |

|---|---|---|---|---|

| MetaBAT2 | Adaptive density-based clustering of contig abundance and composition. | Assembly FASTA, BAM alignment files (depth). | High specificity, low contamination in complex samples. | Large-scale, diverse metagenomes. |

| MaxBin2 | Expectation-Maximization algorithm using abundance and tetranucleotide frequency. | Assembly FASTA, abundance info (from coverM or BAM). | Effective for samples with varying abundance levels. | Time-series or multi-sample projects. |

Table 2: Benchmarking Data for Binning Performance (Representative Studies)

| Study (Source) | # of Samples | Tool(s) Compared | Result Summary (Key Metric) |

|---|---|---|---|

| Shaiber et al., 2020 | 1,700+ | MetaBAT2, MaxBin2, others | MetaBAT2 produced bins with 88.5% mean completeness, 3.8% mean contamination. |

| ** | MaxBin2 showed higher completeness (91.2%) but slightly elevated contamination (5.1%) in high-abundance bins. | ||

| CAMI II Challenge | Complex simulated | Multiple | MetaBAT2 excelled in contamination reduction. MaxBin2 was robust for genome recovery from varied abundances. |

Detailed Experimental Protocols

Gene Prediction & Functional Annotation Workflow

A. Gene Prediction on Metagenomic Assemblies

- Tool:

Prodigal(Metagenomic mode) - Protocol:

- Input: High-quality assembly (contigs > 500bp recommended) from SPAdes/metaSPAdes/MEGAHIT.

- Command:

prodigal -i metagenome_assembly.fna -o genes.coords -a protein_seqs.faa -d nucl_seqs.fna -p meta - Output: Amino acid (

.faa) and nucleotide (.fna) gene sequences.

- Note: Alternative tools include

FragGeneScanfor shorter or error-prone reads.

B. Functional Annotation

- Tool:

eggNOG-mapper(for rapid orthology assignment) orDRAM(for comprehensive metabolism profiling). - Protocol (eggNOG-mapper v2):

- Install via pip or conda.

- Run annotation:

eggnog-mapper -i protein_seqs.faa -o eggnog_output --cpu 4 -m diamond --db eggnog_db - Output: COG, KEGG, GO, and Pfam assignments per gene.

Genome Binning Protocol

Prerequisite: Generate per-sample BAM alignment files and calculate contig coverage.

A. Binning with MetaBAT2

- Command:

metabat2 -i assembly.fna -a depth.txt -o bins_dir/bin -m 1500 - Parameters:

-m: Minimum contig length (recommended: 1500-2500bp). - Output: FASTA files for each putative Metagenome-Assembled Genome (MAG).

B. Binning with MaxBin2

- Prepare abundance file: Can use

coverMor theabundance tablefromjgi_summarize_bam_contig_depths. - Command:

run_MaxBin.pl -contig assembly.fna -abund abundance_table.txt -out maxbin_out -thread 8 - Output: Binned FASTA files and a summary file.

C. Post-Binning Refinement & Evaluation

- Tool:

DAS Tool(to consolidate bins from multiple tools) andCheckM(for quality assessment). - Protocol (CheckM):

- Quality Standards: Use MIMAG standards (High-quality: >90% completeness, <5% contamination; Medium-quality: >50% completeness, <10% contamination).

Diagrams

Full Metagenomics Workflow from Reads to Bins

Diagram Title: Metagenomics analysis workflow from assembly to MAGs.

Binning Algorithm Decision Logic

Diagram Title: Decision logic for selecting a binning tool.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools & Databases

| Item | Function / Purpose | Typical Source / Package |

|---|---|---|

| Prodigal | Fast, reliable gene prediction in bacterial/archaeal contigs. | Hyatt et al., 2010; conda install -c bioconda prodigal. |

| eggNOG DB | Hierarchical orthology database for functional annotation. | http://eggnog5.embl.de; download_eggnog_data.py. |

| DIAMOND | Ultra-fast protein aligner for comparing sequences to databases. | Buchfink et al., 2015; conda install -c bioconda diamond. |

| Bowtie2/BWA | Map sequencing reads back to contigs to generate coverage profiles. | Langmead & Salzberg, 2012; Li, 2013. |

| CheckM DB | Set of lineage-specific marker genes for assessing MAG quality. | Parks et al., 2015; checkm data setRoot. |

| GTDB-Tk DB | Reference database for taxonomic classification of MAGs. | Chaumeil et al., 2020; gtdbtk download. |

Solving Common Assembly Pitfalls: Optimization Strategies for Real-World Data

Diagnosing and Resolving High Fragmentation (N50 Issues)

Within the workflow of metagenome assembly using SPAdes, metaSPAdes, and MEGAHIT, achieving high continuity (as measured by N50) is critical for accurate gene prediction, taxonomic classification, and metabolic pathway reconstruction. High fragmentation, characterized by a low N50, directly compromises downstream analyses essential for researchers and drug development professionals seeking to identify novel bioactive compounds or resistance genes. This document provides application notes and protocols for diagnosing the causes of, and implementing solutions to, high fragmentation in metagenomic assemblies.

Quantitative Data: Factors Affecting Assembly N50

The table below summarizes key factors and their typical quantitative impact on assembly N50 based on recent literature and benchmarking studies.

Table 1: Factors Influencing Metagenomic Assembly Fragmentation

| Factor | Low/Negative Impact on N50 Range | High/Positive Impact on N50 Range | Primary Mechanism |

|---|---|---|---|

| Sequencing Depth | < 10x coverage per genome | 20-50x+ coverage per genome | Higher coverage enables resolution of repeats and overlaps. |

| DNA Input Quality | DV200 < 30%, high shearing | DV200 > 50%, controlled fragment size | Degraded DNA prevents long, contiguous assemblies. |

| Read Length | Short-read (150-250bp) | Long-read (10kb+), Hybrid | Longer reads span repetitive regions. |

| Community Complexity | High (1000+ species), even | Low (10-100 species), uneven | High diversity reduces per-genome coverage. |

| Assembly Algorithm | Greedy extension approaches | de Bruijn graph with careful k-mer selection | Algorithm choice affects repeat resolution. |

Diagnostic Protocol: Identifying the Cause of Low N50

Objective: To systematically identify the primary cause(s) of high fragmentation in a given metagenomic assembly project.

Materials:

- Raw sequencing data (FASTQ files)

- Quality control reports (FastQC, MultiQC)

- Assembly statistics file (from QUAST, metaQUAST)

- Computing resources with adequate memory

Procedure:

- Calculate Assembly Metrics: Run

metaquast.pyon your assembly contigs to obtain N50, L50, total assembly size, and number of contigs. - Assess Input Read Quality:

- Use

FastQCon raw reads. Note adapter content, per-base sequence quality, and sequence duplication levels. - Calculate average sequencing depth:

(Total bases) / (Estimated community genome size).

- Use

- Profile Community Complexity:

- Perform taxonomic profiling on raw reads using

Kraken2orMetaPhlAn. - Assess species evenness from the profile. A long tail of low-abundance species suggests inherent assembly difficulty.

- Perform taxonomic profiling on raw reads using

- Compare to Expected Benchmarks: Refer to Table 1. If read depth is >50x but N50 remains low, investigate read length or algorithmic issues. If depth is low (<15x), fragmentation is likely coverage-limited.

Resolution Protocols for High Fragmentation

Protocol 4.1: Optimizing Assembly Parameters for SPAdes/metaSPAdes

Objective: To improve N50 by tuning k-mer sizes and leveraging the multi-k-mer assembly strategy effectively.

Reagent Solutions & Computational Tools:

- metaSPAdes (v3.15.0+): Primary assembler with iterative k-mer building.

- Read Error Corrector (BayesHammer): Integrated within SPAdes.

- Mismatch Corrector: For post-assembly polishing.

Procedure:

- Employ Auto-k-mer Selection: For standard runs, use the

-k autoflag to allow the assembler to choose optimal k-mer ranges based on read length. - Manual k-mer Specification: For challenging datasets, run multiple assemblies with explicit, odd-numbered k-mers (e.g.,

-k 21,33,55,77for 150bp reads). Combine results using-ooutput. - Utilize the

--metaFlag: Always use this flag for metagenomes to disable the coverage uniformity assumption. - Increase Computational Limits: If resources allow, increase

-m(memory limit) to prevent premature termination of graph construction.

Protocol 4.2: Hybrid Assembly with Long Reads

Objective: Dramatically increase N50 by integrating long-read (PacBio HiFi, Oxford Nanopore) data to scaffold short-read assemblies.

Reagent Solutions & Computational Tools:

- PacBio HiFi or ONP Ultra-Long Reads: For high-accuracy long sequences.

- MetaFlye: For initial long-read assembly.

- SPAdes in Hybrid Mode: For integrating short and long reads.

Procedure:

- Assemble Long Reads: Assemble filtered long reads with

metaflyeusing--metaand appropriate--read-errorparameters. - Map Short Reads: Map quality-filtered short reads to the long-read assembly using

Bowtie2orBWA. - Polish Assembly: Polish the long-read assembly base-calls using the short-read map with

PilonorRacon. - Alternative Hybrid Path: Directly run

spades.pywith both--pacbioor--nanoporeand-1,-2(short read) arguments for integrated hybrid assembly.

Protocol 4.3: Pre-assembly Binning and Co-assembly

Objective: Reduce effective complexity by assembling related reads together.

Procedure:

- Taxonomic Binning of Reads: Use

Kraken2to classify raw reads. Extract reads assigned to a target phylum or genus. - Co-assembly: Assemble the binned read subsets independently using

metaSPAdesorMEGAHIT. - Merge Assemblies: Concatenate the resulting contig sets for downstream analysis. This often yields higher N50 for dominant taxa.

Visualization of Workflows

Diagram 1: N50 Issue Diagnostic Workflow (94 chars)

Diagram 2: Hybrid Assembly Resolution Pathway (92 chars)

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials and Tools for Fragmentation Resolution

| Item | Function/Application | Example Product/Code |

|---|---|---|

| High Molecular Weight DNA Kit | To maximize input DNA length for long-read sequencing, directly improving assembly continuity. | Qiagen MagAttract HMW DNA Kit, PacBio SRE Kit |

| Duplex Sequencing Adapters | For generating highly accurate long reads (HiFi) which simplify the assembly graph. | PacBio SMRTbell Duplex Adapter Kit |

| Metagenomic Standard | To benchmark assembly performance against known genomes of varying abundance. | ZymoBIOMICS Microbial Community Standard |

| Ligation Sequencing Kit | For preparing DNA for Oxford Nanopore sequencing to generate ultra-long reads. | Oxford Nanopore SQK-LSK114 |

| Size Selection Beads | For precise selection of optimal DNA fragment lengths prior to library prep. | Beckman Coulter SPRIselect, Circulomics SRE |

| Error-Corrected Read Datasets | Pre-processed, high-accuracy reads from public repositories for method testing. | NCBI SRA (Accessions with "HiFi" or "CCS") |