Optimizing Molecular Diagnostics for Diabetic Foot: Integrating Biomarkers, Machine Learning, and Novel Targets

This article synthesizes cutting-edge advancements in molecular diagnostics for diabetic foot complications, addressing the critical need for precise, non-invasive tools.

Optimizing Molecular Diagnostics for Diabetic Foot: Integrating Biomarkers, Machine Learning, and Novel Targets

Abstract

This article synthesizes cutting-edge advancements in molecular diagnostics for diabetic foot complications, addressing the critical need for precise, non-invasive tools. We explore the foundational molecular pathways and current diagnostic challenges, including the differentiation between soft tissue infection and osteomyelitis. The review delves into methodological innovations, highlighting the application of explainable machine learning models for biomarker discovery and the validation of novel molecular targets like SCUBE1 and RNF103-CHMP3. We further examine troubleshooting strategies for diagnostic optimization and provide a comparative analysis of emerging technologies against conventional methods. Aimed at researchers, scientists, and drug development professionals, this comprehensive overview aims to bridge the gap between molecular discovery and clinical application, paving the way for improved diagnostic accuracy and personalized therapeutic strategies.

Unraveling Molecular Complexity: Pathophysiology and Current Diagnostic Hurdles in Diabetic Foot

FAQ: Troubleshooting Common Research Challenges

Q1: Our in vitro macrophage polarization assays under high glucose conditions are inconsistent. What are key factors to control?

A: Inconsistent macrophage polarization often stems from poorly defined glycemic conditions and contamination with endotoxins that skew results.

- Solution:

- Standardize Hyperglycemic Media: Prepare glucose solutions fresh and confirm concentrations with a glucometer. Use a stable, high-osmolality control (e.g., mannitol) to rule out osmotic effects.

- Monitor for Endotoxins: Use only cell culture-grade reagents and screen serum for endotoxin levels (<0.01 EU/mL) to prevent unintended activation of inflammatory pathways.

- Validate Polarization States: Do not rely on a single marker. Use a combination for M1 (e.g., CD86, TNF-α, iNOS) and M2 (e.g., CD206, Arg1, IL-10) phenotypes via flow cytometry and RT-qPCR [1].

Q2: When creating a rodent DFU model, how do we distinguish between impaired healing due to neuropathy versus ischemia?

A: Disentangling these contributors requires specific surgical and assessment techniques.

- Solution:

- Employ Selective Procedures: For a pure neuropathy model, use chemical agents like streptozotocin (STZ) and confirm neuropathy via sensory testing (e.g., von Frey filaments). For a combined neuro-ischemic model, follow STZ induction with a precise femoral or iliac artery ligation [1].

- Multimodal Confirmation: Quantify ischemia with laser Doppler perfusion imaging and confirm neuropathy by measuring reduced motor and sensory nerve conduction velocity [2] [3].

- Histological Endpoints: Analyze wound tissue for neuronal markers (e.g., PGP9.5 for nerve density) and vascular markers (e.g., CD31 for endothelial cells) to objectively assess the degree of neural and vascular deficit [1].

Q3: What is the best approach for isolating high-quality RNA from human DFU tissue for transcriptomic studies?

A: DFU tissue is often necrotic, contaminated, and rich in RNases, making RNA integrity a major challenge.

- Solution:

- Rapid Processing: Snap-freeze tissue biopsies immediately in liquid nitrogen and store at -80°C. Avoid multiple freeze-thaw cycles.

- Robust Lysis: Use a commercial lysis buffer containing strong chaotropic salts and β-mercaptoethanol to inactivate RNases. Mechanical homogenization (e.g., bead beating) is essential for complete tissue disruption.

- Quality Control: Always assess RNA Integrity Number (RIN) with a bioanalyzer. Proceed with sequencing or microarray only if RIN > 7.0. Pre-treat samples with RNase inhibitors during collection [4].

Q4: Which machine learning model is most effective for identifying biomarker genes from DFU transcriptomic data?

A: No single model is universally "best"; a consensus approach from multiple algorithms is most robust.

- Solution:

- Apply Multiple Algorithms: Run your dataset through several models, such as LASSO (for feature selection to avoid overfitting), Random Forest (to assess variable importance), and Support Vector Machine-Recursive Feature Elimination (SVM-RFE) [1] [4].

- Identify Consensus Genes: Select genes that are consistently ranked as important across all models for further validation. This was the strategy used to identify core genes like SAMHD1, DPYSL2, SCUBE1, and RNF103-CHMP3 [1] [4].

- Validate Independently: Test the predictive power of your candidate genes on a separate, independent transcriptomic dataset (e.g., from a public repository like GEO) [4].

Core Experimental Protocols

Protocol: Multi-Omics Integration for Biomarker Discovery

This protocol outlines the workflow for identifying core molecular targets by integrating transcriptomic data and machine learning, as employed in recent studies [1] [4].

- Objective: To identify and validate key biomarker genes and potential drug targets for DFU.

- Materials:

- Datasets: Publicly available DFU transcriptome data from GEO (e.g., GSE134431, GSE80178, GSE147890).

- Software: R software with packages:

limma(differential expression),WGCNA(co-expression networks),randomForest,glmnet(LASSO),e1071(SVM),clusterProfiler(enrichment analysis).

- Procedure:

- Data Acquisition & Preprocessing: Download raw data from GEO. Perform background correction, normalization, and batch effect removal.

- Differential Expression Analysis: Using the

limmapackage, identify genes with significant expression changes (e.g., |log2FC| > 1, adjusted p-value < 0.05) between DFU and control samples. - Weighted Gene Co-expression Network Analysis (WGCNA): Construct a co-expression network to identify modules of highly correlated genes. Correlate modules with DFU traits to find the most relevant module.

- Intersection Analysis: Take the intersection of genes from the significant WGCNA module and the differentially expressed genes (DEGs) to obtain a high-confidence gene set.

- Machine Learning Screening:

- Apply LASSO regression to shrink coefficients and select non-redundant features.

- Use Random Forest to rank genes by their importance in classifying DFU.

- Use SVM-RFE to recursively remove features with the smallest ranking criteria.

- Validation: Validate the expression and diagnostic value of the final core genes using ROC curve analysis on an independent test dataset.

- Enrichment Analysis: Perform GO and KEGG pathway analysis on the core gene set to interpret their biological functions.

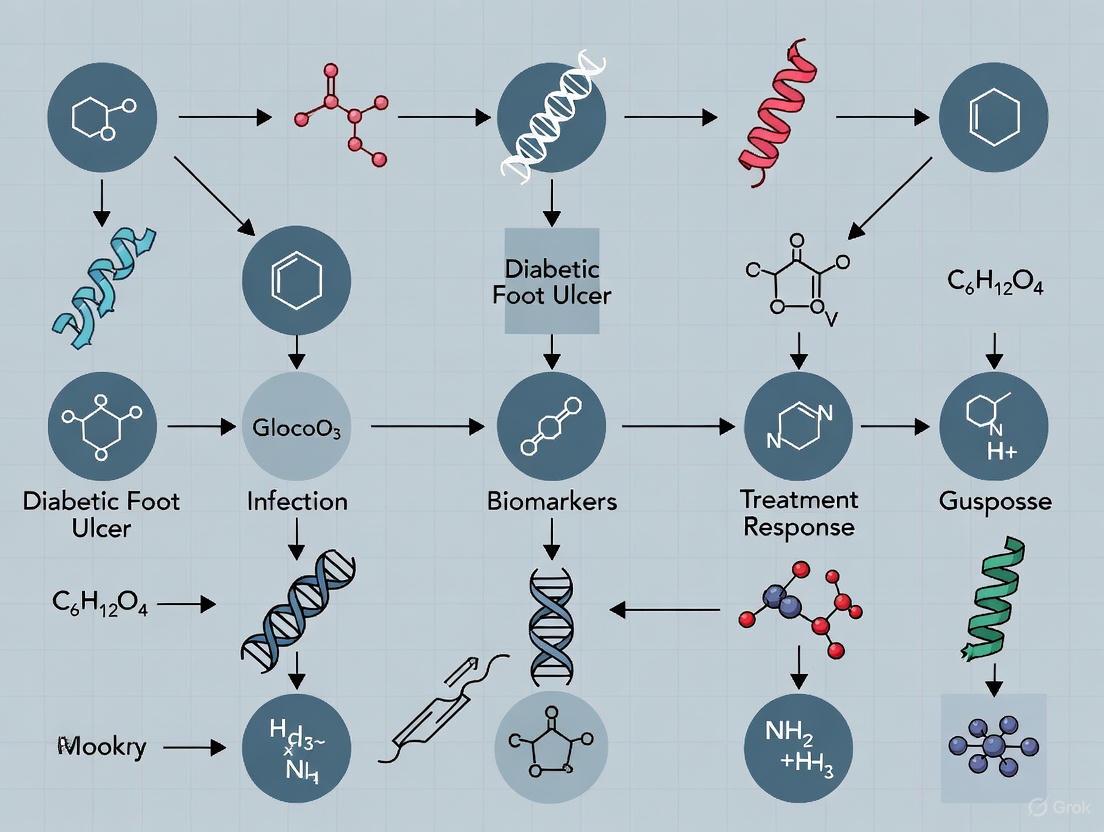

The following diagram visualizes this integrated bioinformatics workflow:

Protocol: Molecular Docking for Therapeutic Compound Screening

This protocol describes how to computationally assess the binding potential of a natural compound like quercetin to proteins encoded by core DFU target genes [1].

- Objective: To evaluate the binding affinity and stability of quercetin with target proteins (e.g., SAMHD1).

- Materials:

- Software: AutoDock Vina, PyMOL, Python.

- Ligand Structure: 3D chemical structure of quercetin (e.g., from PubChem in SDF format).

- Protein Structure: Crystal structure of the target protein (e.g., from Protein Data Bank, PDB).

- Procedure:

- Protein Preparation: Download the PDB file. Remove water molecules and heteroatoms. Add polar hydrogens and compute Gasteiger charges.

- Ligand Preparation: Convert the quercetin SDF to PDBQT format, setting rotatable bonds.

- Grid Box Definition: Define a 3D grid box around the protein's known active site. If the active site is unknown, perform a blind docking over the entire protein surface.

- Molecular Docking: Run the docking simulation in AutoDock Vina. Set the exhaustiveness for accuracy and generate multiple binding poses.

- Analysis: Analyze the output for binding energy (in kcal/mol; lower values indicate stronger binding). Visually inspect the best poses in PyMOL to identify key hydrogen bonds and hydrophobic interactions.

Key Signaling Pathways in DFU Pathogenesis

The following diagram summarizes the core dysfunctional signaling pathways contributing to the pathophysiological triad in DFU, as detailed in the research [2] [3] [5].

Research Reagent Solutions

The following table compiles key reagents, datasets, and software tools essential for researching the DFU pathophysiological triad.

Table 1: Essential Research Resources for Investigating DFU Pathogenesis

| Category | Reagent / Resource | Specific Example / Catalog Number | Primary Function in DFU Research |

|---|---|---|---|

| Transcriptomic Data | GEO Datasets | GSE80178, GSE134431, GSE147890 [1] | Provide human DFU gene expression profiles for bioinformatics analysis and biomarker discovery. |

| Single-Cell Data | GEO Datasets | GSE165816, GSE223964 [1] | Enable cell-type-specific resolution of gene expression in DFU, crucial for understanding immune and endothelial contributions. |

| Machine Learning Tools | R Packages | randomForest, glmnet, e1071 [1] [4] |

Identify key biomarker genes from high-dimensional transcriptomic data and build diagnostic classifiers. |

| Bioinformatics Suites | R Packages | limma, WGCNA, clusterProfiler [1] [4] |

Perform differential expression, co-expression network analysis, and functional enrichment. |

| Molecular Docking | Software Suite | AutoDock 1.5.7, PyMOL [1] | Simulate and visualize interactions between potential therapeutic compounds (e.g., quercetin) and target proteins. |

| Validated Core Targets | Protein/Gene Targets | SAMHD1, DPYSL2 [1] | Macrophage-modulating targets implicated in quercetin's therapeutic mechanism; require antibodies for IHC/IF validation. |

| Validated Core Targets | Protein/Gene Targets | SCUBE1, RNF103-CHMP3 [4] | Biomarkers associated with immune cell infiltration and extracellular matrix interactions; potential diagnostic targets. |

| Animal Modeling | Chemical Inducer | Streptozotocin (STZ) [1] | Induces hyperglycemia in rodent models, replicating the metabolic dysfunction central to DFU development. |

Quantitative Data Synthesis

The following tables consolidate key quantitative findings from recent omics and experimental studies to facilitate comparison and hypothesis generation.

Table 2: Core Biomarker Genes Identified via Machine Learning in DFU Studies

| Gene Symbol | Log2FC Trend in DFU | Proposed Primary Function | Associated Cell Types | Identification Method |

|---|---|---|---|---|

| SAMHD1 | Upregulated | Macrophage modulation; putative quercetin target [1] | Macrophages [1] | WGCNA + RF, Lasso, XGBoost, SVM [1] |

| DPYSL2 | Upregulated | Macrophage & vascular endothelial cell modulation [1] | Macrophages, Vascular Endothelial Cells [1] | WGCNA + RF, Lasso, XGBoost, SVM [1] |

| SCUBE1 | Downregulated (post-cure) | Immune regulation; inflammatory response [4] | NK Cells, Macrophages [4] | LASSO + SVM-RFE [4] |

| RNF103-CHMP3 | Downregulated (post-cure) | Extracellular interactions; vesicular trafficking [4] | NK Cells, Macrophages [4] | LASSO + SVM-RFE [4] |

Table 3: Key Pathophysiological Pathways and Their Molecular Mediators in DFU

| Pathway / Process | Key Molecular Mediators | Experimental Evidence | Functional Consequence |

|---|---|---|---|

| Polyol Pathway | Aldose Reductase, Sorbitol Dehydrogenase, Fructose [2] [3] | Increased flux in hyperglycemia; NADPH depletion; oxidative stress [2] [3] | Neuronal damage, impaired nerve conduction [2] [3] |

| PKC Activation | Diacylglycerol (DAG), PKC-β, PKC-δ isoforms [2] | Increased DAG in vascular tissue; altered gene expression [2] | Vascular dysfunction, reduced blood flow, angiogenesis defects [2] |

| AGE/RAGE Signaling | Advanced Glycation End-products (AGEs), RAGE receptor [2] [5] | Binds RAGE, increasing inflammatory mediators and ROS [2] | Sustained inflammation, nerve & vascular damage [2] [5] |

| Immune Cell Dysregulation | Macrophages (M1/M2 imbalance), Neutrophils, IL-17 [1] [2] | Single-cell RNA-seq shows specific expression of core genes in macrophages; impaired phagocytosis [1] [2] | Failure to resolve inflammation, chronic non-healing wounds [1] [2] |

FAQ: What are the key host inflammatory markers for differentiating osteomyelitis from soft tissue infection, and what are their diagnostic thresholds?

The Erythrocyte Sedimentation Rate (ESR) is a central host inflammatory marker for this differentiation. A recent meta-analysis provides clear quantitative thresholds for its use in diagnostic workflows, particularly for diabetic foot osteomyelitis (DFO).

Table 1: Diagnostic Performance of ESR for Diabetic Foot Osteomyelitis

| ESR Cutoff Value (mm/h) | Sensitivity | Specificity | Recommended Use Case |

|---|---|---|---|

| 51.6 | 80% | 67% | Optimal pooled cutoff for preliminary screening [6] |

| 70.0 | 61% | 83% | Higher specificity; recommended by IWGDF for screening DFO [6] |

Troubleshooting Guide: If your experimental results using these thresholds show high sensitivity but low specificity, consider the following:

- Patient Factors: Be aware that ESR is a nonspecific marker. Conditions like rheumatoid arthritis or other inflammatory states can cause elevated ESR independent of infection [7].

- Integrated Diagnosis: ESR should not be used in isolation. Combine quantitative ESR data with other evidence, such as a positive "probe-to-bone" test or imaging findings, to increase diagnostic certainty [8] [7].

FAQ: What are the primary bacterial immune evasion strategies specific to osteomyelitis?

The pathogen Staphylococcus aureus utilizes distinct molecular mechanisms to persist in bone that are less relevant in soft tissue infections. Understanding these is key to developing targeted diagnostics and therapies.

Key Mechanisms:

- Biofilm Formation: Bacteria encase themselves in a protective matrix on bone and implants, conferring resistance to antibiotics and immune cells [9].

- Intracellular Persistence: S. aureus can survive inside non-professional phagocytes, such as osteoblasts, creating a protected reservoir [10] [9].

- Invasion of the Osteocyte Lacuno-Canalicular Network (OLCN): This is a critical differentiator. S. aureus can invade the microscopic canalicular network that houses osteocytes, using the dense bone matrix as a physical barrier to evade the host immune system. This mechanism explains the persistent and recurrent nature of chronic osteomyelitis [9].

FAQ: How does the host's metabolic immune response differ between soft tissue and bone infections?

Single-cell RNA sequencing (scRNA-seq) studies reveal that metabolic reprogramming of immune and structural cells is a hallmark of non-healing diabetic foot ulcers (DFUs) and is central to the pathogenesis of osteomyelitis.

Key Metabolic Signatures: Research identifies three interconnected metabolic states in DFUs: hypoxia, glycolysis, and lactylation [11]. The shift to glycolysis in macrophages (M1 phenotype) and accumulation of lactate drives histone lactylation, which regulates pro-inflammatory gene expression [11] [12].

Differentiating Workflow: The diagram below outlines a protocol to characterize these metabolic differences in patient samples.

Experimental Protocol: Metabolic State Characterization via scRNA-seq

- Sample Acquisition & Preparation: Obtain foot skin samples from healthy non-diabetic individuals and patients with non-healing DFUs (with and without suspected osteomyelitis). Process tissue into single-cell suspensions [11].

- scRNA-seq Library Construction: Use the 10x Genomics platform for droplet-based single-cell capture. Generate raw count matrices for all samples [11].

- Quality Control & Normalization: Filter out cells with <200 or >6,000 detected genes or >10% mitochondrial gene content. Log-normalize the filtered gene-cell matrix [11].

- Data Integration & Clustering: Perform PCA-based dimensionality reduction. Use the Louvain algorithm for cell clustering and annotate cell types (e.g., keratinocytes, fibroblasts, macrophages) using canonical markers [11].

- Metabolic Signature Scoring: Calculate single-cell enrichment scores for hypoxia, glycolysis, and lactylation gene sets using the AUCell R package (v1.28.0) [11].

- Downstream Analysis:

- Perform KEGG/GO enrichment analysis to identify dysregulated pathways.

- Conduct pseudotime trajectory analysis using Monocle3 (v1.3.7) to map metabolic shifts during disease progression [11].

FAQ: How can I model the macrophage polarization imbalance that contributes to bone destruction in osteomyelitis?

The persistence of pro-inflammatory M1 macrophages over anti-inflammatory M2 macrophages drives chronic inflammation and bone resorption in osteomyelitis. This polarization is directly regulated by mitochondrial metabolism [12].

Molecular Mechanisms:

- M1 Macrophages (Pro-inflammatory): Rely on glycolysis. HIF-1α upregulates GLUT1 and hexokinase 2. A disrupted TCA cycle leads to accumulation of succinate, which stabilizes HIF-1α and promotes ROS production, creating a pro-inflammatory feedback loop [12].

- M2 Macrophages (Anti-inflammatory): Rely on oxidative phosphorylation (OXPHOS) and fatty acid oxidation (FAO) for energy. An intact TCA cycle and glutamine metabolism support anti-inflammatory gene expression [12].

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents for Studying Metabolic Regulation in Osteomyelitis

| Reagent / Assay | Function in Experiment | Key Molecular Targets |

|---|---|---|

| AUCell R Package | Calculating single-cell metabolic enrichment scores from scRNA-seq data [11] | Hypoxia, glycolysis, and lactylation gene sets |

| Seurat R Package | scRNA-seq data processing, normalization, clustering, and cell type annotation [11] | Canonical cell markers (e.g., CD86 for M1, CD163 for M2) |

| Monocle3 | Pseudotime trajectory analysis to model cellular state transitions [11] | Gene expression changes over inferred time |

| Anti-HIF-1α Antibody | Inhibiting/Detecting key regulator of M1 glycolysis and inflammation [12] | Hypoxia-Inducible Factor 1-alpha (HIF-1α) |

| 2-Deoxy-D-Glucose (2-DG) | Glycolysis inhibition to shift polarization from M1 to M2 [12] | Hexokinase |

| Recombinant IL-4 | Polarizing macrophages toward M2 phenotype in vitro [12] | IL-4 Receptor |

| RANKL | Stimulating osteoclast differentiation in co-culture models [12] | Receptor Activator of NF-κB Ligand |

Frequently Asked Questions (FAQs) for Diagnostic Challenges in Diabetic Foot Osteomyelitis Research

FAQ 1: What are the specific clinical limitations of percutaneous bone biopsy for diagnosing diabetic foot osteomyelitis (DFO)?

While bone biopsy with culture is the reference standard for identifying causative pathogens in DFO, its clinical application faces several limitations [13] [14]:

- Invasiveness and Patient Risk: The procedure is invasive, requiring a needle to be inserted into the bone, which can cause pain, carries a risk of infection, bleeding, or potential injury to surrounding structures, and may be contraindicated in patients with certain bleeding disorders [15].

- Feasibility and Access: The procedure is perceived as cumbersome and too invasive for widespread routine use, which limits its application in clinical practice [13] [14].

- Diagnostic Delays: Final culture results can take between five to seven days, delaying the initiation of targeted therapy [15].

FAQ 2: How does the microbiological concordance between deep tissue cultures and bone biopsy impact diagnostic reliability?

A 2025 comparative diagnostic study found only moderate concordance between deep tissue and bone biopsy cultures [13].

- The overall concordance rate was 51.8%.

- Concordance was highest for Staphylococcus aureus (44.4%) but substantially lower for Gram-negative bacteria (31.9%) and other Gram-positive microorganisms (24.2%).

- In 16.5% of cases, bone cultures were positive when deep tissue cultures were negative, indicating a significant rate of potential false negatives if relying solely on deep tissue sampling [13].

FAQ 3: What are the key specificity challenges with MRI in diagnosing DFO?

MRI, while excellent for detecting bone marrow edema, faces specificity challenges because it cannot always distinguish between infection (osteomyelitis) and other non-infectious inflammatory conditions that cause similar fluid shifts and edema, such as Charcot neuro-osteoarthropathy, recent surgery, or traumatic fractures [14].

FAQ 4: What are the analytical limitations of molecular diagnostics like PCR and Whole Genome Sequencing (WGS) for pathogen detection?

Molecular methods, despite their speed, can have a higher limit of detection (LOD) for heteroresistant infections (mixed drug-susceptible and resistant populations) compared to phenotypic culture methods [16].

- A 2024 study on Mycobacterium tuberculosis heteroresistance demonstrated that the agar proportion method (a phenotypic gold standard) could detect a resistant minority population at a proportion of 1%.

- In comparison, the LOD was 10% for both WGS and GeneXpert MTB/RIF Ultra, and 60% for the standard GeneXpert MTB/RIF assay [16]. This indicates that low levels of resistant pathogens might be missed by molecular assays.

FAQ 5: How is artificial intelligence (AI) being applied to address diagnostic challenges in diabetic foot care?

AI and machine learning are showing promise in several areas to complement existing diagnostics [17] [18] [19]:

- Automated Wound Assessment: Deep learning models can segment and classify tissues in diabetic foot ulcer images (e.g., granulation, necrosis, gangrene) with high accuracy, aiding in standardized Wagner grading [18].

- Risk Stratification: Machine learning models analyze biomechanical data from wearable insoles or thermal images to stratify patients based on ulceration risk, enabling early intervention [19].

- Improving Specificity: AI algorithms are being developed to integrate multiple data types (e.g., images, biomechanics, biomarkers) to improve diagnostic specificity beyond what a single modality like MRI can achieve alone [17].

Troubleshooting Guides

Problem 1: Low Concordance Between Deep Tissue and Bone Biopsy Cultures

| Potential Cause | Solution | Rationale |

|---|---|---|

| Polymicrobial Infection | Use molecular methods (e.g., 16S rRNA PCR) on the bone sample to identify fastidious or difficult-to-culture organisms missed by standard cultures. | Deep tissue cultures may not accurately represent the true pathogen profile within the bone, particularly for Gram-negative and polymicrobial infections [13]. |

| Sampling Through Ulcer Bed | Ensure percutaneous bone biopsy is obtained through aseptic skin adjacent to the ulcer, not through the ulcer bed itself. | Sampling through the ulcer bed can capture colonizing bacteria that are not the true causative pathogens of the osteomyelitis, leading to misleading results [14]. |

| Prior Antibiotic Use | Obtain cultures before initiating or after a sufficient washout period of antibiotic therapy. | Even sub-therapeutic antibiotic levels can suppress bacterial growth in cultures, yielding false-negative results. |

Problem 2: Differentiating Osteomyelitis from Charcot Neuro-osteoarthropathy on MRI

| Potential Cause | Solution | Rationale |

|---|---|---|

| Overlapping Imaging Features | Correlate MRI findings with clinical signs (e.g., presence of an open wound, probing to bone, local inflammation) and serologic markers (e.g., ESR, CRP). | Both conditions can present with bone marrow edema, joint effusions, and soft tissue swelling on MRI. Clinical context is essential for accurate interpretation [14]. |

| Lack of Specific Sequences | Utilize advanced sequences like Diffusion-Weighted Imaging (DWI) and Dynamic Contrast-Enhanced (DCE) perfusion. | Research suggests these sequences may help differentiate infected bone from neuropathic edema by assessing tissue cellularity and vascularity, though they are not yet universally standardized for this purpose. |

Problem 3: Molecular Diagnostic Results Do Not Align with Phenotypic Culture/Susceptibility

| Potential Cause | Solution | Rationale |

|---|---|---|

| Heteroresistance | Use a phenotypic reference method (e.g., agar proportion method) to confirm the presence of a resistant subpopulation. | Molecular tests may fail to detect resistant subpopulations that are below their limit of detection (LOD), leading to a discrepancy where culture shows resistance but molecular methods indicate susceptibility [16]. |

| Silent Mutations | Perform functional assays to confirm the phenotypic impact of any genetic mutations identified. | Not all genetic mutations detected by sequencing confer an actual change in antibiotic susceptibility. |

| Contamination | Strictly adhere to sterile sampling techniques and include negative controls in molecular workflows. | Contaminating DNA during sample collection or processing can lead to false-positive results in highly sensitive molecular assays. |

Table 1: Microbiological Concordance Between Deep Tissue and Bone Biopsy Cultures in DFO Diagnosis (n=107) [13]

| Metric | Result |

|---|---|

| Overall Concordance | 51.8% |

| Concordance for Staphylococcus aureus | 44.4% |

| Concordance for Gram-negative bacteria | 31.9% |

| Concordance for other Gram-positive microorganisms | 24.2% |

| Pathogens isolated only from deep tissue | 21.2% |

| Pathogens isolated only from bone (missed by deep tissue) | 16.5% |

Table 2: Limit of Detection (LOD) Comparison for Heteroresistance Identification [16]

| Diagnostic Method | Limit of Detection (LOD) for Minority Resistant Population |

|---|---|

| Agar Proportion Method (Phenotypic Gold Standard) | 1% |

| Whole Genome Sequencing (WGS) | 10% |

| GeneXpert MTB/RIF Ultra | 10% |

| GeneXpert MTB/RIF | 60% |

Table 3: Performance of Deep Learning Models in DFU Image Segmentation and Classification [18]

| Model | Mean Intersection over Union (IoU) | Wagner Grade Classification Accuracy | Area Under the Curve (AUC) |

|---|---|---|---|

| Mask2Former | 65% | 91.85% | 0.9429 |

| Deeplabv3plus | 62% | Not Reported | Not Reported |

| Swin-Transformer | 52% | Not Reported | Not Reported |

Experimental Protocols

Protocol 1: Percutaneous Bone Biopsy for Microbiological Culture in DFO

This protocol is based on the methodology described in the BeBoP randomized controlled trial [14].

1. Pre-Procedure Preparation:

- Imaging Guidance: Identify the site of infected bone using MRI, FDG-PET/CT, or plain X-ray.

- Patient Assessment: Check blood coagulation parameters and adjust anticoagulant medication if necessary. Obtain informed consent [15].

- Anesthesia: Use local anesthesia at the biopsy site. Conscious sedation may be considered based on patient need.

2. Biopsy Procedure:

- Aseptic Technique: Sterilize a wide area of skin surrounding the biopsy site.

- Biopsy Needle: Use an 11-gauge or similar bone biopsy needle.

- Sampling Path: Insert the needle through intact, anesthetized skin adjacent to the ulcer. Do not pass the needle through the ulcer bed to avoid contamination with colonizing flora [14].

- Sample Collection: Obtain a core sample of the bone lesion.

- Sample Handling: Aseptically divide the bone sample. Place one portion in a sterile container for microbiological culture and another in a separate container for molecular analysis (if planned).

3. Post-Procedure Care:

- Apply pressure to the site to prevent bleeding and cover with a sterile bandage.

- Monitor the patient for several hours for potential complications.

- Transport the sample immediately to the microbiology laboratory.

Protocol 2: Deep Learning-Based Segmentation of Diabetic Foot Ulcer Images

This protocol is adapted from a 2025 study that achieved a mean IoU of 65% using the Mask2Former model [18].

1. Data Curation and Preprocessing:

- Image Collection: Collect a dataset of DFU images from patient records. Ensure images have sufficient resolution and clarity.

- Expert Annotation: Have experienced clinicians manually annotate (label) the images using software like Labelme. Annotations should include:

- Ulcer boundary

- Periwound erythema

- Internal wound components: granulation tissue, necrotic tissue, exposed tendon, exposed bone, gangrene.

- Data Standardization: Resize all images to a uniform dimension (e.g., 1024x1024 pixels) using bilinear interpolation.

- Data Augmentation: Apply transformations to increase dataset size and model robustness, including:

- Brightness and contrast adjustment

- Horizontal and vertical flipping

- Image transposition

2. Model Training and Validation:

- Model Selection: Choose an instance segmentation model such as Mask2Former, Deeplabv3plus, or Swin-Transformer. Pre-trained weights from ImageNet are recommended as a starting point.

- Dataset Splitting: Randomly split the annotated dataset into a training set (e.g., 80%) and a test set (e.g., 20%).

- Model Fine-Tuning: Train (fine-tune) the selected model on the DFU training dataset. Monitor the loss function and accuracy on both training and test sets to avoid overfitting.

- Performance Evaluation: Use the held-out test set to evaluate the final model's performance using metrics such as Intersection over Union (IoU), Dice coefficient, accuracy, and Area Under the Curve (AUC).

Diagnostic and Research Workflow Visualization

DFO Diagnostic Pathway

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Research Materials for DFO Diagnostic Studies

| Item | Function/Application in Research |

|---|---|

| 11-Gauge Bone Biopsy Needle | For percutaneous collection of bone specimens for both microbiological and molecular analysis [14]. |

| Mannitol Salt Agar (MSA) | A selective growth medium used for the isolation of Staphylococcus aureus from clinical samples [20]. |

| HiCrome-Rapid MRSA Agar | A chromogenic medium for the selective and differential identification of methicillin-resistant Staphylococcus aureus (MRSA) [20]. |

| Primers for mecA and nuc genes | Essential reagents for multiplex PCR to genetically confirm the presence of S. aureus and its methicillin resistance gene [20]. |

| Labelme Software | An open-source tool for manual annotation and segmentation of diabetic foot ulcer images to create ground-truth datasets for AI model training [18]. |

| Pre-trained Deep Learning Models (e.g., Mask2Former) | Neural network architectures with weights pre-trained on large public datasets (e.g., ImageNet), which can be fine-tuned for specific medical image segmentation tasks, reducing required data and training time [18]. |

| Multiplex PCR Panels | Molecular diagnostic kits capable of simultaneously detecting a syndromic panel of common bacterial pathogens and antibiotic resistance genes from a single sample [21]. |

Diabetic foot osteomyelitis (DFO) is a common and severe complication of diabetic foot infections, present in approximately 20% of patients with diabetic foot infections and 50% of those with severe infections [6]. Its timely and accurate diagnosis is critical for preventing catastrophic outcomes, including lower-limb amputation. In the context of optimizing molecular diagnostic patterns for diabetic foot research, conventional clinical tools like the Probe-to-Bone (PTB) test and Erythrocyte Sedimentation Rate (ESR) measurement remain foundational. They serve as rapid, accessible first-line tests that can guide the need for more advanced (and often more costly and invasive) molecular and imaging diagnostics. This technical support document provides researchers and drug development professionals with a rigorous, evidence-based framework for implementing and evaluating these conventional tools within their experimental and diagnostic workflows.

Diagnostic Performance at a Glance

The following tables summarize the aggregated diagnostic accuracy data for the Probe-to-Bone test and Erythrocyte Sedimentation Rate, providing a quick reference for expected performance metrics.

Table 1: Diagnostic Accuracy of the Probe-to-Bone Test for Diabetic Foot Osteomyelitis

| Metric | Pooled Value (95% CI) | Study Context |

|---|---|---|

| Sensitivity | 0.87 (0.75 - 0.93) [22] | Systematic Review & Meta-Analysis |

| Specificity | 0.83 (0.65 - 0.93) [22] | Systematic Review & Meta-Analysis |

| Positive Predictive Value | 0.57 [23] | Cohort with 12% OM prevalence |

| Negative Predictive Value | 0.98 [23] | Cohort with 12% OM prevalence |

| Positive Likelihood Ratio | 4.41 [24] | Validation against bone histology |

| Negative Likelihood Ratio | 0.02 [24] | Validation against bone histology |

Table 2: Diagnostic Accuracy of ESR for Diabetic Foot Osteomyelitis

| Metric | Value | Context / Model |

|---|---|---|

| Area Under the Curve (AUC) | 0.71 [6] | Hierarchical Summary ROC (HSROC) Model |

| Summary Sensitivity | 0.76 [6] | HSROC Model |

| Summary Specificity | 0.73 [6] | HSROC Model |

| Optimal Pooled Cutoff | 51.6 mm/h [6] | DICS Model (Youden Index) |

| Sensitivity at 51.6 mm/h | 0.80 [6] | DICS Model |

| Specificity at 51.6 mm/h | 0.67 [6] | DICS Model |

| Sensitivity at 70 mm/h | 0.61 [6] | GLMM Prediction |

| Specificity at 70 mm/h | 0.83 [6] | GLMM Prediction |

| Accuracy Designation | "Fair" [25] | ROC AUC 0.70 (95% CI: 0.62-0.79) |

Detailed Experimental Protocols

Probe-to-Bone Test Protocol

The following workflow outlines the standardized procedure for performing and validating the Probe-to-Bone test, based on a prospective study using bone histology as the reference standard [24].

Objective: To clinically diagnose osteomyelitis in a diabetic foot ulcer by detecting exposed bone [24].

Materials:

- Sterile, blunt metal probe (e.g., surgical instrument)

- Sterile saline and gauze

- Personal protective equipment (PPE)

Step-by-Step Procedure:

- Patient Preparation: Position the patient comfortably. Explain the procedure.

- Ulcer Cleaning: Briefly clean the ulcer surface with sterile saline and gauze to remove debris and exudate [24].

- Probing Technique: Using the sterile blunt probe, gently explore the base of the ulcer and any associated sinus tracts. Apply minimal pressure [24].

- Interpretation: The test is considered positive if a hard, gritty, non-give surface—assumed to be bone—is palpated. The test is negative if no such hard surface is encountered [24] [23].

- Documentation: Record the finding in the patient's record. A positive test should trigger a referral for confirmatory testing.

Key Validation Data: In a study of 132 wounds with a high prevalence (79.5%) of osteomyelitis confirmed by bone histology, the PTB test demonstrated an efficiency of 94%, sensitivity of 98%, and specificity of 78% [24]. A separate meta-analysis reported pooled sensitivity and specificity of 0.87 and 0.83, respectively [22].

ESR Measurement Protocol for DFO Suspicion

The diagram below illustrates the role of ESR in the diagnostic pathway for suspected diabetic foot osteomyelitis.

Objective: To measure the erythrocyte sedimentation rate as an inflammatory marker to aid in the diagnosis of diabetic foot osteomyelitis [25] [6].

Materials:

- Venous blood collection kit (needle, tourniquet, etc.)

- Vials containing Ethylenediaminetetraacetic acid (EDTA) anticoagulant

- Equipment for ESR analysis (e.g., automated analyzer using the modified Westergren method) [25]

Step-by-Step Procedure:

- Blood Collection: Draw a 6 mL peripheral blood sample under aseptic conditions and transfer it to a vial containing EDTA anticoagulant [25].

- Sample Analysis: Analyze the blood sample using a validated method. The modified Westergren method is commonly used and reported [25].

- Interpretation: Interpret the result against predefined cutoffs. A recent meta-analysis recommends a cutoff of 51.6 mm/h as optimal for screening, with a sensitivity of 80% and specificity of 67% [6]. The traditional cutoff of 70 mm/h offers higher specificity (83%) but lower sensitivity (61%) [6].

- Contextualization: Always interpret the ESR value in the context of the clinical presentation and other diagnostic tests. An elevated ESR should increase the index of suspicion for DFO and warrant further investigation.

Key Validation Data: A 2025 meta-analysis of 12 studies (1,674 subjects) determined the summary AUC for ESR in diagnosing DFO to be 0.71, with a sensitivity of 0.76 and specificity of 0.73 [6]. Another cross-sectional study reported an AUC of 0.70 for ESR, classifying its accuracy as "fair" [25].

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Reagents and Materials for Diagnostic Validation Studies

| Item | Function / Application in Research | Specification / Standardization |

|---|---|---|

| Sterile Surgical Probe | Performing the PTB test to detect bone exposure in ulcers. | Blunt metal instrument; sterilization between uses is critical [24]. |

| EDTA Blood Collection Tubes | Anticoagulation of venous blood samples for subsequent ESR analysis. | Standard 6 mL draw volume [25]. |

| ESR Analyzer & Kits | Quantifying the erythrocyte sedimentation rate. | Adherence to standardized methods (e.g., modified Westergren) [25]. |

| Bone Biopsy Instrumentation | Obtaining bone specimens for histopathological analysis (reference standard). | Requires surgical intervention; samples preserved in 10% buffered formalin [24]. |

| Semmes-Weinstein Monofilament | Assessing peripheral neuropathy, a key risk factor for DFU and DFO. | 5.07 / 10 gram monofilament for standardized testing [24]. |

| Microbiology Transport Medium | Transporting soft tissue and bone specimens for microbial culture. | Sterile vessel with transport medium (e.g., Copan Innovation) [24]. |

Frequently Asked Questions (FAQs) & Troubleshooting

Q1: The PTB test shows high sensitivity in studies, but my clinical team finds it has a low positive predictive value. What is the explanation for this discrepancy?

A: This is a classic example of the impact of disease prevalence on predictive values. The PTB test's positive predictive value (PPV) is highly dependent on the underlying prevalence of osteomyelitis in the studied population [23]. In a population with a low prevalence of osteomyelitis (e.g., 12%), even a highly specific test will yield a lower PPV. In the referenced study, with a 12% prevalence, the PPV was 57%, meaning almost half of the positive tests were false positives. However, the negative predictive value (NPV) remained very high (98%), making it an excellent "rule-out" tool [23]. In high-prevalence settings (e.g., >70%), the PPV rises significantly [24] [22].

Q2: When validating ESR in our patient cohort, what is the single most evidence-based cutoff value we should use to define a positive test for osteomyelitis?

A: A 2025 systemic review and meta-analysis specifically addressed this using advanced modeling (DICS model) to calculate an optimal pooled cutoff. The study recommends 51.6 mm/h as the optimal cutoff, which balances sensitivity (80%) and specificity (67%) for screening purposes [6]. If your research priority is to maximize specificity (e.g., for patient enrollment in a clinical trial), the traditional cutoff of 70 mm/h (specificity 83%) may be more appropriate, albeit with a loss of sensitivity (61%) [6].

Q3: How does the diagnostic accuracy of ESR compare to C-Reactive Protein (CRP) for detecting DFO?

A: Both are acute-phase reactants with modest accuracy for DFO. Direct comparative studies have shown that ESR generally has slightly superior performance. One cross-sectional study found the AUC for ESR was 0.70 ("fair" accuracy) compared to 0.67 ("poor" accuracy) for CRP. The same study reported the best cut-off for CRP was 35 mg/L, with a sensitivity of 76% and specificity of 55% [25]. CRP rises and falls more rapidly than ESR, so it may be more useful for monitoring treatment response rather than initial diagnosis.

Q4: What is the recommended reference standard against which we should validate new molecular diagnostics for DFO?

A: The most definitive reference standard is bone histopathology. The consensus criteria for diagnosis include the presence of inflammatory cell infiltrate (e.g., lymphocytes, plasma cells, neutrophils), bone necrosis, and reactive bone neoformation [24]. Bone culture is also used, often in conjunction with histology. While MRI is a highly sensitive imaging modality, it is still often validated against histology as the ultimate benchmark [24] [6]. Your experimental protocols should clearly state the chosen reference standard.

Technical Troubleshooting Guides

Troubleshooting SCUBE1 Expression Analysis

Problem: Inconsistent SCUBE1 detection in DFU patient samples via qRT-PCR

| Problem Area | Possible Cause | Solution | Verification |

|---|---|---|---|

| Low RNA Quality | Degraded RNA from necrotic DFU tissue | Implement rigorous RNA integrity number (RIN) assessment; accept only samples with RIN >7.0 | Bioanalyzer electropherogram shows intact 18S and 28S ribosomal RNA peaks |

| Low Abundance Target | SCUBE1 significantly downregulated in cured DFU [26] [4] | Use highly sensitive detection chemistry (TaqMan vs. SYBR Green); increase RNA input to 100ng per reaction | Standard curve with dilution series shows efficient amplification (90-105%) |

| Sample Heterogeneity | Varying degrees of immune cell infiltration in biopsy sites | Standardize biopsy location; use single-cell RNA sequencing for cellular resolution | Single-cell validation shows SCUBE1 expression primarily in NK cells and macrophages [26] |

| Data Normalization | Unstable reference genes in pathological tissue | Validate reference genes (e.g., GAPDH, β-actin) using geNorm or NormFinder; use multiple reference genes | Coefficient of variation <0.2 across sample groups after normalization |

Problem: Poor SCUBE1 antibody performance in Western blotting

| Problem Area | Possible Cause | Solution | Verification |

|---|---|---|---|

| Protein Extraction | SCUBE1 is a secreted/ membrane-associated protein [27] | Use combination detergent (1% Triton X-100) with mild sonication; include protease inhibitors | Detection of positive control (recombinant SCUBE1) confirms extraction efficiency |

| Glycosylation Issues | Extensive N-glycosylation in spacer region alters mobility [27] | Treat samples with PNGase F; expect mobility shift from ~100kDa to ~80kDa | Sharp band appears after deglycosylation |

| Specificity | Non-specific binding in complex wound tissue | Include peptide competition control; use blocking buffer with 5% BSA + 5% normal serum | Signal abolished with competing peptide |

Troubleshooting RNF103-CHMP3 Functional Studies

Problem: High variability in extracellular interaction assays for RNF103-CHMP3

| Problem Area | Possible Cause | Solution | Verification |

|---|---|---|---|

| Cellular Model | Endogenous expression interferes with overexpression | Use CRISPR/Cas9 knockout cell line before transfection; confirm knockout via sequencing | Western blot shows complete absence of endogenous protein |

| Assay Timing | Dynamic changes during epithelial-mesenchymal transition | Perform time-course experiments (0, 6, 12, 24, 48h) post-wounding in scratch assay | Phase-contrast microscopy shows consistent migration patterns |

| Cell-Cell Communication | Disruption of extracellular matrix interactions [26] | Include ECM components (collagen I, fibronectin) in coating; measure soluble factors in conditioned media | Proteomic analysis of secretome identifies interaction partners |

Frequently Asked Questions (FAQs)

Q1: What is the clinical relevance of SCUBE1 and RNF103-CHMP3 as therapeutic targets in diabetic foot ulcers?

SCUBE1 and RNF103-CHMP3 represent promising therapeutic targets because they were identified as significantly downregulated in patients who were successfully cured of DFU, suggesting their expression patterns are closely linked to healing response [26] [4]. SCUBE1 plays a role in immune regulation, particularly in the body's response to inflammation and infection, which are critical factors in DFU pathogenesis [26]. RNF103-CHMP3 is involved in extracellular interactions, suggesting importance in cellular communication and tissue repair mechanisms [26]. Their discovery offers new theoretical foundations and molecular targets for DFU diagnosis and treatment optimization [26] [4].

Q2: What are the recommended experimental models for studying SCUBE1 function in DFU pathogenesis?

For in vitro studies, primary human keratinocytes or fibroblast cell lines under hyperglycemic conditions (25mM glucose) can model diabetic skin. Oxidative stress can be induced with H₂O₂ (0.3mM) to examine SCUBE1's protective role, as demonstrated in granulosa cells [28]. For immune function studies, co-culture systems with macrophages (e.g., THP-1 cells) allow investigation of SCUBE1's role in immune cell infiltration [26]. For in vivo approaches, diabetic mouse models (e.g., db/db mice) with excisional wounds represent the gold standard. Single-cell RNA sequencing of wound tissue can pinpoint specific cellular sources of SCUBE1 expression, which has been localized to NK cells and macrophages in DFU [26].

Q3: How does RNF103-CHMP3 influence extracellular interactions in the DFU microenvironment?

While the precise mechanisms are still under investigation, RNF103-CHMP3 has been associated with extracellular interactions that are crucial for proper cellular communication during wound healing [26]. As a protein potentially involved in endosomal sorting and membrane trafficking (inferred from the CHMP3 domain), it may regulate the secretion of extracellular matrix components or signaling molecules that facilitate cell-cell communication. In the dysfunctional DFU microenvironment, downregulation of RNF103-CHMP3 may disrupt these critical extracellular interactions, impairing the coordinated cellular responses needed for effective tissue repair [26].

Q4: What computational approaches are available for identifying additional targets like SCUBE1 and RNF103-CHMP3?

The original identification of SCUBE1 and RNF103-CHMP3 employed machine learning analysis of transcriptome data from the GEO dataset GSE230426 [26] [4]. This integrated approach combined differential expression analysis (using limma package in R with thresholds of │logFC│>1 and p<0.05) with machine learning algorithms including LASSO regression and SVM-RFE for feature selection [26]. Validation in independent datasets (GSE80178, GSE165816) confirmed reliability [26] [4]. Similar workflows can be applied, incorporating additional methods like weighted gene co-expression network analysis (WGCNA) [29] [30] and single-cell RNA sequencing analysis [11] to uncover novel targets in DFU.

Q5: What are the key considerations for validating SCUBE1 and RNF103-CHMP3 as diagnostic biomarkers?

Analytical Validation: Establish reliable detection assays (qRT-PCR, ELISA) with determined precision, accuracy, and sensitivity. Define reference ranges in appropriate control populations [26]. Clinical Validation: Correlate expression levels with DFU severity (e.g., Wagner grade), healing trajectory, and clinical outcomes in prospective cohorts [26] [4]. Specificity Assessment: Evaluate expression patterns in other wound etiologies to ensure specificity to DFU pathophysiology. Sample Standardization: Standardize sample collection procedures (e.g., biopsy location, RNA stabilization) due to the heterogeneity of DFU tissue [26].

Research Reagent Solutions

Key Reagents for SCUBE1 and RNF103-CHMP3 Research

| Reagent Category | Specific Product/Assay | Function/Application | Key Considerations |

|---|---|---|---|

| Detection Antibodies | Anti-SCUBE1 (Bioss, bs-9903R) [28] | IHC, WB for protein localization and expression | Validate with peptide competition; note glycosylation state in WB |

| Anti-RNF103-CHMP3 | IP, IF for protein interaction studies | Confirm specificity in knockout cell lines | |

| Recombinant Proteins | rhSCUBE1 (Abnova) [28] | Functional studies (e.g., 5ng/mL pretreatment) | Test bioactivity in migration/proliferation assays |

| Cell Lines | KGN granulosa cell line [28] | Model for oxidative stress studies | Adapt for DFU research with hyperglycemic conditions |

| Primary human keratinocytes | Relevant DFU cell type for mechanistic studies | Use early passages (P3-P5) for consistency | |

| Animal Models | db/db mice | In vivo wound healing studies | Monitor blood glucose >350mg/dL before wounding |

| Critical Assays | Single-cell RNA-seq [26] [11] | Cellular resolution of target expression | Process fresh tissue; target 10,000 cells/sample |

| AUCell analysis [11] | Metabolic state assessment (hypoxia, glycolysis) | Use hallmark gene sets from GSEA |

Experimental Protocols

Transcriptomic Analysis Pipeline for Target Identification

This protocol follows the methodology that successfully identified SCUBE1 and RNF103-CHMP3 [26] [4].

Step 1: Data Acquisition and Preprocessing

- Download DFU transcriptome data from GEO (e.g., GSE230426, GSE80178, GSE165816)

- Perform quality control: RIN >7.0 for RNA-seq, presence of internal controls for arrays

- Normalize data: RMA for microarray data, TPM/FPKM for RNA-seq data

- Batch effect correction: Use ComBat or sva package in R [30]

Step 2: Differential Expression Analysis

- Utilize limma package in R with thresholds: │logFC│>1 and adjusted p-value <0.05 [26]

- Generate volcano plots using ggplot2 package

- Identify 403-500 differentially expressed genes typically observed in DFU studies [30]

Step 3: Enrichment Analysis

- Perform GO, KEGG, and Disease Ontology enrichment using clusterProfiler [26]

- Focus on immune regulation, extracellular matrix, and cell communication pathways

- Identify significantly enriched pathways (p-value <0.05, FDR <0.1)

Step 4: Machine Learning Feature Selection

- Apply LASSO regression (glmnet package) for dimensionality reduction [26] [30]

- Implement SVM-RFE algorithm for feature ranking [26]

- Use 10-fold cross-validation to determine optimal parameters [30]

- Identify top candidate genes (e.g., SCUBE1, RNF103-CHMP3) [26]

Step 5: Validation

- Validate key genes in independent datasets (e.g., GSE80178) [26]

- Examine expression at single-cell level in datasets like GSE165816 [26] [11]

- Correlate gene expression with clinical outcomes (healing status)

SCUBE1 Functional Analysis in Oxidative Stress Model

This protocol adapts SCUBE1 oxidative stress protection assessment for DFU-relevant cell types [28].

Step 1: Cell Culture and Treatment

- Culture relevant cells (keratinocytes, fibroblasts) in high glucose (25mM) DMEM/F12 with 10% FBS

- Plate cells in 6-well plates (2×10^5 cells/well) for apoptosis analysis or 96-well plates (5×10^3 cells/well) for viability

- Pretreat experimental group with 5ng/mL rhSCUBE1 for 24h [28]

- Induce oxidative stress with 0.3mM H₂O₂ for 24h [28]

Step 2: Viability and Apoptosis Assessment

- MTT assay: Add 0.5mg/mL MTT, incubate 4h, dissolve in DMSO, measure 570nm absorbance

- Annexin V/PI staining: Analyze by flow cytometry within 1h of staining

- Caspase-3 activity: Use fluorometric assay with DEVD-AFC substrate

Step 3: ROS and Mitochondrial Function

- Intracellular ROS: Load cells with 10μM DCFH-DA for 30min, measure fluorescence (Ex/Em 485/535nm)

- Mitochondrial membrane potential: Stain with 5μg/mL JC-1 for 15min, calculate red/green fluorescence ratio

Step 4: Western Blot Analysis

- Extract proteins with RIPA buffer + protease inhibitors

- Separate 30μg protein on 10% SDS-PAGE, transfer to PVDF

- Block with 5% non-fat milk, incubate with primary antibodies (1:1000) overnight at 4°C

- Target: Bcl-2, Bax, cleaved caspase-3, p53 [28]

- Incubate with HRP-conjugated secondary antibodies (1:5000), develop with ECL

Step 5: Data Analysis

- Normalize viability to untreated control (set as 100%)

- Express apoptosis as % Annexin V-positive cells

- Quantify Western blots by densitometry, normalize to β-actin

- Statistical analysis: One-way ANOVA with Tukey's post-hoc test, p<0.05 significant

Single-Cell RNA Sequencing Analysis for Cellular Localization

This protocol validates cell-type specific expression of targets like SCUBE1 and RNF103-CHMP3 [26] [11].

Step 1: Sample Preparation and Sequencing

- Process fresh DFU biopsies (3-5mm) within 30min of collection

- Digest tissue with collagenase IV (1mg/mL) for 45min at 37°C with agitation

- Filter through 40μm strainer, resuspend in PBS + 0.04% BSA

- Target cell viability >85% before loading on 10X Chromium

- Sequence to depth of 50,000 reads/cell minimum

Step 2: Data Processing and Quality Control

- Process raw data using Cell Ranger (10X Genomics)

- Filter cells with <200 or >6,000 genes, >10% mitochondrial content [11]

- Normalize using SCTransform (Seurat) or comparable methods

- Integrate multiple samples using harmony or CCA anchors

Step 3: Cell Clustering and Annotation

- Perform PCA and UMAP dimensionality reduction

- Cluster cells using Louvain algorithm (resolution 0.5-1.2) [11]

- Annotate cell types using canonical markers:

- Keratinocytes: KRT5, KRT14, KRT10

- Fibroblasts: DCN, COL1A1, PDPN

- Endothelial: PECAM1, VWF

- Immune: PTPRC (CD45)

- Macrophages: CD68, CD163, MRC1

- T cells: CD3D, CD3E

- NK cells: NKG7, GNLY [26]

Step 4: Target Gene Expression Analysis

- Extract expression values for SCUBE1 and RNF103-CHMP3

- Visualize using feature plots, violin plots, and dot plots

- Compare expression between cell types and conditions (non-healing vs. healing)

- Validate findings with immunohistochemistry on parallel tissue sections

Step 5: Cellular Communication Analysis

- Use CellChat or NicheNet to infer intercellular communication

- Identify altered signaling pathways in DFU vs. normal skin

- Focus on pathways involving SCUBE1 and RNF103-CHMP3

Next-Generation Diagnostic Tools: From Biomarker Panels to Explainable AI Models

FAQs and Troubleshooting Guides

Data Acquisition and Preprocessing

Q1: What are the critical inclusion and exclusion criteria for patient data when building a dataset to differentiate Diabetic Foot Infection (DFI) from Osteomyelitis (OM)?

A: Ensuring a clean, well-defined cohort is paramount for model generalizability. Adhere to the following criteria based on established study designs [31]:

- Inclusion Criteria:

- Patients aged 18 years or older.

- A confirmed diagnosis of diabetes mellitus.

- A definitive final diagnosis of either DFI or OM, based on a composite reference standard that can include clinical findings, imaging (e.g., MRI), laboratory results, and bone biopsy or surgical debridement findings.

- Exclusion Criteria:

- Primary foot pathology unrelated to DFI/OM (e.g., Charcot neuroarthropathy without infection, major trauma).

- Concurrent systemic conditions that confound biomarker interpretation (e.g., active autoimmune disease, sepsis from another source, active malignancy).

- Incompleteness of the core routine blood biomarker dataset (e.g., >30% missing values for key variables).

Q2: My dataset has missing values for some biomarkers. How should I handle this?

A: The handling of missing data is a critical step in the preprocessing pipeline.

- Best Practice: First, investigate the pattern of missingness. If data is Missing Completely At Random (MCAR), you can use imputation techniques.

- Recommended Technique: For routine laboratory biomarkers, multiple imputation or k-nearest neighbors (KNN) imputation are robust methods. However, if the missing data is extensive (e.g., exceeding 30% for a specific biomarker in your dataset), consider excluding that variable or patient record, as was done in the referenced study [31].

- Troubleshooting: If model performance is poor, check the impact of your imputation method. Sensitivity analysis (comparing complete-case analysis with imputed results) is highly recommended.

Model Development and Training

Q3: Which machine learning algorithms are most effective for building a diagnostic model with these biomarkers?

A: Multiple classifiers should be evaluated and compared. A recent large-scale study found the LightGBM (Light Gradient Boosting Machine) model to be the top-performing algorithm for this specific task, outperforming others when using a compact set of routine biomarkers [31].

- Recommended Workflow:

- Train Multiple Models: Experiment with a suite of models, including Random Forests, Support Vector Machines (SVM), and XGBoost, in addition to LightGBM.

- Evaluate Performance: Use the Area Under the Receiver Operating Characteristic Curve (AUC) as a primary metric, supplemented by the Brier score for calibration.

- Select Final Model: Choose the model that demonstrates the highest AUC and is well-calibrated on your internal validation set.

Q4: How can I ensure my model is trustworthy and not a "black box" for clinicians?

A: Model interpretability is non-negotiable for clinical adoption. Integrate Explainable AI (XAI) techniques directly into your workflow.

- Solution: Employ SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations). These tools quantify the contribution of each biomarker (e.g., HbA1c, ESR) to individual predictions [31]. This allows a clinician to see why a case was classified as DFI or OM, fostering trust and providing actionable insights.

Model Validation and Deployment

Q5: What is the gold standard for validating the performance of my diagnostic model?

A: Beyond standard internal validation (e.g., train-test split or cross-validation), external validation is critical.

- Protocol: Reserve a portion of your data from a completely different clinical center or geographic location as an independent external validation cohort. A model that achieves an AUC of 0.942 on an external cohort, as demonstrated in recent research, provides strong evidence of its generalizability and robustness [31].

- Troubleshooting: A significant performance drop in external validation indicates overfitting to your development data or underlying population differences. Revisit feature selection and model regularization.

Q6: How can I make my model accessible for other researchers and clinicians?

A: Develop a user-friendly, publicly accessible tool.

- Proven Method: Create a web-based calculator. This allows users to input the six key biomarkers (Age, HbA1c, Creatinine, Albumin, ESR, Sodium) and receive a risk prediction for OM vs. DFI. This approach translates your research into a low-cost, clinically applicable tool, especially useful in resource-limited settings [31].

Experimental Protocols

Protocol: Developing and Validating an Explainable ML Model for DFI/OM Differentiation

This protocol outlines the methodology for building a diagnostic model based on a successful two-center study [31].

1. Objective To develop and validate an explainable machine learning model using routine blood biomarkers (Age, HbA1c, Creatinine, Albumin, ESR, Sodium) to accurately differentiate between Diabetic Foot Infection (DFI) and Osteomyelitis (OM).

2. Materials and Dataset Preparation

- Data Collection: Collect retrospective data from patient electronic health records. Ensure ethical approval and data anonymization.

- Cohort Definition: Define DFI and OM cases based on a composite reference standard (e.g., clinical exam, imaging, bone biopsy). Apply strict inclusion/exclusion criteria [31].

- Feature Selection: Extract the six key biomarkers. Normalize numerical values (e.g., Z-score standardization).

3. Machine Learning Workflow

- Data Partitioning: Split the dataset from Center 1 into a training set (75%) and an internal validation set (25%). Use data from Center 2 as a hold-out external validation set.

- Model Training: Train multiple ML classifiers (LightGBM, Random Forest, SVM, etc.) on the training set using 5-fold cross-validation.

- Hyperparameter Tuning: Optimize model parameters using techniques like Bayesian optimization or grid search on the validation set.

- Model Evaluation: Evaluate the final selected model on the internal and external validation sets. Key metrics include AUC, accuracy, sensitivity, specificity, and Brier score.

4. Explainability and Clinical Translation

- Explainable AI (XAI): Apply SHAP analysis to the final model to generate global feature importance plots and local explanations for individual predictions.

- Deployment: Develop a web calculator using a framework like Flask or Shiny to host the model for public use.

Table 1: Performance Metrics of a LightGBM Model for Differentiating DFI from OM on an External Validation Cohort (n=341) [31]

| Metric | Value (95% Confidence Interval) |

|---|---|

| Area Under the Curve (AUC) | 0.942 (0.936 - 0.950) |

| Sensitivity | Not specified in results |

| Specificity | Not specified in results |

| Brier Score (Lower is better) | Not specified in results |

Table 2: Key Biomarkers and Their Hypothesized Pathophysiological Roles in DFI/OM [31]

| Biomarker | Biological Function & Rationale for Inclusion |

|---|---|

| HbA1c | Reflects long-term glycemic control. Hyperglycemia impairs immune function and wound healing, increasing susceptibility to severe infection. |

| ESR | A non-specific marker of inflammation. Typically elevated in both DFI and OM, but levels may vary with severity and bone involvement. |

| Creatinine | Indicator of renal function. Renal impairment can alter drug pharmacokinetics (antibiotics) and is a comorbidity in diabetic patients. |

| Albumin | A marker of nutritional status and systemic inflammation. Low levels are associated with poorer healing outcomes and increased morbidity. |

Research Reagent Solutions

Table 3: Essential Materials for ML-Based Diagnostic Model Development

| Item | Function/Description |

|---|---|

| Clinical Data Repository | Anonymized electronic health records from patients with confirmed DFI or OM. |

| Computing Environment | Python or R programming environment with libraries (e.g., scikit-learn, LightGBM, SHAP, pandas). |

| Statistical Software | R or Python for data preprocessing, statistical analysis, and generation of performance metrics. |

| Web Development Framework | Flask (Python) or Shiny (R) for building an interactive web interface to deploy the final model. |

Workflow and Pathway Diagrams

Diagram 1: End-to-end workflow for developing and deploying an explainable ML model.

Diagram 2: Logical relationship between model prediction and explainable AI for clinical support.

Troubleshooting Guide: SHAP and LIME for Diabetic Foot Diagnostics

Frequently Asked Questions

Q1: Why do my SHAP values show unexpected feature importance rankings that don't match clinical understanding?

This commonly occurs due to highly correlated molecular features in diabetic foot ulcer (DFU) datasets. When features are strongly correlated, SHAP values might distribute importance in ways that appear counterintuitive [32] [33]. The VeriStrat test case study found correlations between features ranging from 0.310 to 0.996, which significantly affected importance distributions [33].

Solution:

- Calculate exact Shapley values instead of approximations when possible, especially with smaller datasets [32]

- Use Shapley-based interaction indices (SIIs, STIIs) to identify feature interactions [33]

- Apply domain knowledge to evaluate if correlated features represent similar biological pathways

Q2: My LIME explanations are unstable - they change significantly with each run for the same patient. How can I increase reliability?

LIME generates explanations by sampling perturbed instances around your prediction, and this randomness can cause instability, particularly with complex molecular data [34] [35].

Solution:

- Increase the

sample_sizeparameter in LIME to generate more perturbed samples [35] - Set a random seed for reproducible explanations

- For tabular data, ensure

discretize_continuous=Trueto create more stable categorical features [35] - Consider using SHAP for more mathematically grounded explanations [36]

Q3: Which XAI method is better for explaining differential diagnosis of diabetic foot infections versus osteomyelitis?

In a recent two-center study comparing DFI and OM differentiation, SHAP provided more quantitative insights into biomarker contributions, while LIME offered intuitive local explanations [37]. The study achieved an AUC of 0.942 using a LightGBM model with six key biomarkers [37].

Solution:

- Use SHAP for global model interpretability and understanding overall feature importance

- Use LIME for case-specific explanations to present to clinical colleagues

- Implement both methods as complementary approaches

Q4: How can I extract global model understanding from local explanation methods?

Both SHAP and LIME can be aggregated to provide global insights [34] [35].

Solution for SHAP:

- Calculate mean absolute SHAP values across all predictions for global feature importance

- Plot SHAP summary charts showing feature impact versus value

Solution for LIME:

- Implement "Submodular Pick" methodology to select diverse, representative explanations [35]

- Aggregate local explanations across multiple patient subgroups

- Create frequency analysis of top features across numerous local explanations

Performance Comparison Table: XAI Methods in Clinical Diagnostics

Table 1: Quantitative performance of XAI-enhanced models in diabetic complications research

| Study Focus | Best Performing Model | Accuracy Metrics | XAI Method Used | Key Features Identified |

|---|---|---|---|---|

| Diabetic Foot Ulcer Identification [38] | Siamese Neural Network (SNN) | 98.76% accuracy, 99.3% precision, 97.7% recall, 98.5% F1-score | Grad-CAM, SHAP, LIME | Heat map localization for visual interpretation |

| Differential Diagnosis: DFI vs. Osteomyelitis [37] | LightGBM | AUC: 0.942 (external validation) | SHAP, LIME | Age, HbA1c, Creatinine, Albumin, ESR, Sodium |

| Molecular Diagnostic Test (VeriStrat) [32] [33] | 7-Nearest Neighbor | Clinical validation in 40,000+ samples | Exact Shapley Values | 8 proteomic features with varying importance per sample |

Table 2: Technical comparison of SHAP vs. LIME for clinical applications

| Characteristic | SHAP | LIME |

|---|---|---|

| Explanation Scope | Global & local interpretability | Focus on local interpretability |

| Mathematical Foundation | Game theory (Shapley values) | Local surrogate models |

| Computational Demand | Higher for exact calculations | Generally faster |

| Data Type Compatibility | Tabular, text, images | Tabular, text, images |

| Clinical Implementation | Quantitative contribution scores | Intuitive "push-pull" explanations |

| Handling of Correlated Features | Can be challenging with approximations | Affected by perturbation strategy |

Experimental Protocols for Diabetic Foot Ulcer Research

Protocol 1: Implementing SHAP for Molecular Diagnostic Patterns

Materials Required:

- Pre-trained ML model for DFU classification

- Patient dataset with molecular features

- SHAP Python library (

pip install shap)

Procedure:

- Load and prepare your trained model and preprocessing pipeline

- Initialize appropriate SHAP explainer:

- Use

TreeExplainerfor tree-based models - Use

KernelExplainerfor model-agnostic applications - Use

DeepExplainerfor neural networks

- Use

- Calculate SHAP values on representative data sample:

- Generate global feature importance plot:

- Create individual force plots for specific patient explanations

- Calculate mean absolute SHAP values for overall feature ranking

Troubleshooting Tip: For small datasets (<1000 samples), use exact Shapley value calculation instead of approximations to avoid qualitative differences in explanations [32].

Protocol 2: LIME for Case-Specific DFU Explanations

Materials Required:

- Trained classification model with

predict_probamethod - LIME Python library (

pip install lime) - Individual patient case data

Procedure:

- Initialize LIME Tabular Explainer with training data statistics:

- Generate explanation for specific case:

- Visualize explanation:

- Save explanation as HTML for clinical reporting:

Troubleshooting Tip: If explanations seem sparse or incomplete, adjust the num_features parameter and try different feature_selection methods ('auto', 'lasso_path', or 'none') [35].

Research Reagent Solutions for Diabetic Foot Ulcer Diagnostics

Table 3: Essential materials and computational tools for XAI experiments

| Resource Type | Specific Tool/Resource | Application in DFU Research |

|---|---|---|

| Programming Libraries | SHAP (Python library) | Quantitative feature contribution analysis for molecular markers |

| Programming Libraries | LIME (Python library) | Case-by-case explanation generation for clinical review |

| Model Architectures | Siamese Neural Networks [38] | DFU image classification with 98.76% accuracy |

| Model Architectures | LightGBM [37] | Differential diagnosis of foot infections with high AUC |

| Clinical Validation Tools | Grad-CAM heat maps [38] | Visual localization of ulcer features in image data |

| Biomarker Panels | Routine blood tests [37] | Six-key biomarker panel (HbA1c, Creatinine, Albumin, ESR, etc.) |

| Molecular Assays | Mass spectrometry proteomics [33] | VeriStrat test with 8 protein features for classification |

Workflow Visualization

XAI Clinical Diagnostics Workflow

Correlation Challenges in Molecular Data

FAQs: Technical Guidance for Researchers

FAQ 1: What are the top-performing deep learning architectures for diabetic foot ulcer (DFU) segmentation, and how do they compare quantitatively?

The performance of deep learning models for DFU segmentation is typically evaluated using metrics like Intersection over Union (IoU) and Dice coefficient. Below is a comparative analysis of leading architectures.

Table 1: Performance Comparison of DFU Segmentation Models

| Model Architecture | Reported Mean IoU | Key Strengths | Notable Applications/Studies |

|---|---|---|---|

| Mask2Former | 65% [18] | Best overall performance; excels in multi-label recognition and global feature modeling [18]. | Segmentation and classification of periwound erythema, ulcer boundaries, and internal tissues (granulation, necrotic tissue, etc.) [18]. |

| Deeplabv3plus | 62% [18] | Well-established CNN-based model; widespread application in semantic segmentation [18]. | Served as a baseline model in performance comparisons [18]. |

| UFOS-Net | Dice: 0.7745 [39] | Incorporates an Enhanced Multi-scale Segmentation (EMS) block; optimized for small-scale mask identification [39]. | Ranked highly on the DFUC2022 leaderboard; validated on the SRRSH-DF dataset [39]. |

| Swin-Transformer | 52% [18] | Leverages Transformer architecture to handle long-range dependencies [18]. | Suitable for recognizing complex features in DFU images [18]. |

FAQ 2: How can I address the challenge of limited and imbalanced DFU image datasets in my model training?

Data augmentation is a cornerstone technique for mitigating dataset limitations. Beyond standard methods (rotation, flipping), researchers have developed tailored strategies for DFU images.

- Multi-Operator Data Augmentation (MODA): This method simultaneously applies multiple augmentation operators specifically chosen for DFU characteristics, significantly expanding the dataset and improving model generalization [39].

- Standard Augmentation Techniques: The following five techniques are commonly employed to enhance model robustness [18]:

- Brightness Adjustment

- Contrast Adjustment

- Horizontal Flip

- Vertical Flip

- Transposition

FAQ 3: My model confuses ulcer areas with healthy skin of similar color. How can I improve segmentation accuracy for complex lesions?

This is a common challenge, particularly when model parameters are reduced for efficiency [39]. Consider these approaches:

- Architecture Enhancement: Integrate modules designed for detailed feature extraction. The EMS Block in UFOS-Net, which uses depthwise separable convolutions, helps capture more comprehensive features with a reduced parameter count, improving the handling of texture details [39].

- Advanced Feature Fusion: Leverage models that incorporate small-scale feature fusion, which can enhance the identification of subtle boundaries between ulcers and normal skin [39].

- Hybrid Feature Extraction: For classification tasks, combining handcrafted features (e.g., ORB, LBP) with deep features from pretrained CNNs can create a more robust and noise-resistant model, as demonstrated in frameworks for plantar thermograms [40].

FAQ 4: How is the Wagner classification system integrated into deep learning models for automated DFU grading?

The Wagner classification system provides a standardized framework for assessing ulcer severity. In deep learning, it is used as the ground truth for training and evaluating classification models.

- Adaptation for Model Training: Models are trained to categorize DFU images into Wagner grades. For instance, one study grouped Wagner grades 1-2 into a single category due to similar treatment strategies, and trained the Mask2former model to classify wounds into W1-2, W3, and W4 [18].

- Model Performance: In such a task, the Mask2former model achieved an accuracy of 0.9185 and an Area Under the Curve (AUC) of 0.9429 [18].

- System Overview:

- Grade 0: No open lesions [41] [42].

- Grade 1: Superficial ulcer limited to the skin [41] [42].

- Grade 2: Deeper ulcer extending to ligaments and muscle [41] [42].

- Grade 3: Deep ulcer with abscess, osteomyelitis (bone infection), or joint sepsis [41] [42].

- Grade 4: Partial gangrene in the forefoot [41] [42].

- Grade 5: Extensive gangrene involving the entire foot [41] [42].

Troubleshooting Guides

Issue 1: Poor Segmentation Performance on Small or Complex Ulcer Regions

- Symptoms: Low IoU or Dice scores for specific tissue types like tendons or periwound erythema; failure to detect subtle lesion boundaries.

- Investigation Checklist:

- Examine the distribution of your annotated masks. Are small-scale ulcers adequately represented?

- Verify the model's decoder. Does it incorporate multi-scale feature fusion to preserve details from early layers?

- Inspect the data augmentation pipeline. Are you using strategies like MODA to increase the diversity of complex lesion appearances? [39]

- Resolution Steps:

- Model Selection: Prioritize architectures known for detail capture, such as UFOS-Net with its EMS block [39] or Mask2Former for its strong multi-class performance [18].

- Data Curation: Consider using more comprehensive datasets like SRRSH-DF [39] or the DFUC 2022 dataset [43], which contain a wide spectrum of pathological features.

- Post-Processing: Apply contour refinement algorithms (e.g., active contours) to the model's raw output to better align with clinical delineations [43].

Issue 2: Model Fails to Generalize to Images from Different Sources

- Symptoms: High accuracy on the test set from the same source as training data, but significant performance drop on external datasets or new hospital data.

- Investigation Checklist:

- Check for domain shift. Are the new images from different devices, lighting conditions, or patient demographics?

- Review your preprocessing. Have you standardized image scaling (e.g., using bilinear interpolation to a fixed size like 1024x1024)? [18]

- Assess the training data. Does your dataset encompass the variability found in real-world clinical settings? [39]

- Resolution Steps:

- Data Augmentation: Aggressively employ photometric augmentations (brightness, contrast adjustments) to simulate domain variation [18].

- Transfer Learning: Fine-tune models that were pre-trained on large, diverse natural image datasets (e.g., ImageNet) [18].

- Hybrid Models: Explore frameworks that fuse traditional feature descriptors (like ORB) with deep learning features, as they can be more robust to image distortions [40].

Issue 3: High Computational Cost and Model Size Hindering Deployment

- Symptoms: Long training and inference times; model too large for target hardware (e.g., mobile devices or edge computers).

- Investigation Checklist: